How to use Python While with Assignment[4 Examples]

In this Python tutorial, you will learn “ How to use Python While with Assignment “, with multiple examples and different approaches.

While working on a project, I was assigned to optimise the code. In the research, I found that we can assign variables within a while loop in Python. This helps to reduce some lines of code.

Generally, we use the ” = “ assignment operator to assign the variable in Python. But we will also try the walrus operator “:= “ to assign the variable within the while loop in Python.

Let’s understand every example one by one with some practical scenarios.

Table of Contents

Python while loop with the assignment using the “=” operator

First, we will use the “=” operator, an assignment operator in Python. This operator is used to assign a value to a variable, and we will assign a variable inside the loop in Python using the ” = “ operator.

Let’s see how Python, while with assignment, works.

In the above code, we always initialize the while loop with the true condition. “ while True :” means it will iterate infinite times and then assign the variable ‘line = “Hello” inside the while loop with one default string value.

Then assigned i = 0 , and again initialize nested while loop “print(line[i],’-‘,ord(line[i])) ” to target every character one by one and print its ascii value using ord() method in Python

Python assign variables in the while condition using the walrus operator

We can also use the walrus operator ” := “, a new assignment expression operator introduced in the 3.8 version of Python. It can create a new variable inside the expression even if that variable does not exist previously.

Let’s see how we can use it in a while loop:

In the above code, we have a list named capitals. Then we initialize a while loop and create current_capital , giving a value as capitals.pop(0) , which means removing the first element in every iteration using the walrus operator like this: ‘current_capital:= capitals.pop(0)) != “Austin” .

When it iterates at “Austin”, the condition will be False because “Austin” != “Austin” will return false, and the loop will stop iterating.

Python while with assignment by taking user input

Here, we will see how Python While with Assignment will work if we take user input with a while loop using the walrus operator in Python.

In the above code, we are initializing a variable with a while loop and taking user input for an integer. Then, it will check whether the number is even or odd using the % operator.

Look at how it asks the user to enter the number repeatedly because we are not giving a break statement anywhere so it will work infinite times.

Python While with Assignment by calling the function

Now, we will see how to assign a variable inside a while loop in Python by calling the function name. We will create a user-defined function so you will understand how it is working.

How Python While with Assignment can be created by calling the user-defined function.

In the above code, we create a function called get_ascii_value() . This function takes a string as a parameter and returns the ASCII value of each character.

Then, we initialize a while loop, taking user_input as a string. The result variable calls a function and returns a character and ASCII value in a dictionary datatype.

In this Python article, you learned how to use Python while with assignment with different approaches and examples. We tried to cover all types of scenarios, such as creating user-defined functions , assigning within a while loop, taking user input, etc.

You may like to read:

- Python Find Last Number in String

- How to compare two lists in Python and return non-matches elements

- How to Convert a Dict to String in Python[5 ways]

I am Bijay Kumar, a Microsoft MVP in SharePoint. Apart from SharePoint, I started working on Python, Machine learning, and artificial intelligence for the last 5 years. During this time I got expertise in various Python libraries also like Tkinter, Pandas, NumPy, Turtle, Django, Matplotlib, Tensorflow, Scipy, Scikit-Learn, etc… for various clients in the United States, Canada, the United Kingdom, Australia, New Zealand, etc. Check out my profile .

Python Enhancement Proposals

- Python »

- PEP Index »

PEP 572 – Assignment Expressions

The importance of real code, exceptional cases, scope of the target, relative precedence of :=, change to evaluation order, differences between assignment expressions and assignment statements, specification changes during implementation, _pydecimal.py, datetime.py, sysconfig.py, simplifying list comprehensions, capturing condition values, changing the scope rules for comprehensions, alternative spellings, special-casing conditional statements, special-casing comprehensions, lowering operator precedence, allowing commas to the right, always requiring parentheses, why not just turn existing assignment into an expression, with assignment expressions, why bother with assignment statements, why not use a sublocal scope and prevent namespace pollution, style guide recommendations, acknowledgements, a numeric example, appendix b: rough code translations for comprehensions, appendix c: no changes to scope semantics.

This is a proposal for creating a way to assign to variables within an expression using the notation NAME := expr .

As part of this change, there is also an update to dictionary comprehension evaluation order to ensure key expressions are executed before value expressions (allowing the key to be bound to a name and then re-used as part of calculating the corresponding value).

During discussion of this PEP, the operator became informally known as “the walrus operator”. The construct’s formal name is “Assignment Expressions” (as per the PEP title), but they may also be referred to as “Named Expressions” (e.g. the CPython reference implementation uses that name internally).

Naming the result of an expression is an important part of programming, allowing a descriptive name to be used in place of a longer expression, and permitting reuse. Currently, this feature is available only in statement form, making it unavailable in list comprehensions and other expression contexts.

Additionally, naming sub-parts of a large expression can assist an interactive debugger, providing useful display hooks and partial results. Without a way to capture sub-expressions inline, this would require refactoring of the original code; with assignment expressions, this merely requires the insertion of a few name := markers. Removing the need to refactor reduces the likelihood that the code be inadvertently changed as part of debugging (a common cause of Heisenbugs), and is easier to dictate to another programmer.

During the development of this PEP many people (supporters and critics both) have had a tendency to focus on toy examples on the one hand, and on overly complex examples on the other.

The danger of toy examples is twofold: they are often too abstract to make anyone go “ooh, that’s compelling”, and they are easily refuted with “I would never write it that way anyway”.

The danger of overly complex examples is that they provide a convenient strawman for critics of the proposal to shoot down (“that’s obfuscated”).

Yet there is some use for both extremely simple and extremely complex examples: they are helpful to clarify the intended semantics. Therefore, there will be some of each below.

However, in order to be compelling , examples should be rooted in real code, i.e. code that was written without any thought of this PEP, as part of a useful application, however large or small. Tim Peters has been extremely helpful by going over his own personal code repository and picking examples of code he had written that (in his view) would have been clearer if rewritten with (sparing) use of assignment expressions. His conclusion: the current proposal would have allowed a modest but clear improvement in quite a few bits of code.

Another use of real code is to observe indirectly how much value programmers place on compactness. Guido van Rossum searched through a Dropbox code base and discovered some evidence that programmers value writing fewer lines over shorter lines.

Case in point: Guido found several examples where a programmer repeated a subexpression, slowing down the program, in order to save one line of code, e.g. instead of writing:

they would write:

Another example illustrates that programmers sometimes do more work to save an extra level of indentation:

This code tries to match pattern2 even if pattern1 has a match (in which case the match on pattern2 is never used). The more efficient rewrite would have been:

Syntax and semantics

In most contexts where arbitrary Python expressions can be used, a named expression can appear. This is of the form NAME := expr where expr is any valid Python expression other than an unparenthesized tuple, and NAME is an identifier.

The value of such a named expression is the same as the incorporated expression, with the additional side-effect that the target is assigned that value:

There are a few places where assignment expressions are not allowed, in order to avoid ambiguities or user confusion:

This rule is included to simplify the choice for the user between an assignment statement and an assignment expression – there is no syntactic position where both are valid.

Again, this rule is included to avoid two visually similar ways of saying the same thing.

This rule is included to disallow excessively confusing code, and because parsing keyword arguments is complex enough already.

This rule is included to discourage side effects in a position whose exact semantics are already confusing to many users (cf. the common style recommendation against mutable default values), and also to echo the similar prohibition in calls (the previous bullet).

The reasoning here is similar to the two previous cases; this ungrouped assortment of symbols and operators composed of : and = is hard to read correctly.

This allows lambda to always bind less tightly than := ; having a name binding at the top level inside a lambda function is unlikely to be of value, as there is no way to make use of it. In cases where the name will be used more than once, the expression is likely to need parenthesizing anyway, so this prohibition will rarely affect code.

This shows that what looks like an assignment operator in an f-string is not always an assignment operator. The f-string parser uses : to indicate formatting options. To preserve backwards compatibility, assignment operator usage inside of f-strings must be parenthesized. As noted above, this usage of the assignment operator is not recommended.

An assignment expression does not introduce a new scope. In most cases the scope in which the target will be bound is self-explanatory: it is the current scope. If this scope contains a nonlocal or global declaration for the target, the assignment expression honors that. A lambda (being an explicit, if anonymous, function definition) counts as a scope for this purpose.

There is one special case: an assignment expression occurring in a list, set or dict comprehension or in a generator expression (below collectively referred to as “comprehensions”) binds the target in the containing scope, honoring a nonlocal or global declaration for the target in that scope, if one exists. For the purpose of this rule the containing scope of a nested comprehension is the scope that contains the outermost comprehension. A lambda counts as a containing scope.

The motivation for this special case is twofold. First, it allows us to conveniently capture a “witness” for an any() expression, or a counterexample for all() , for example:

Second, it allows a compact way of updating mutable state from a comprehension, for example:

However, an assignment expression target name cannot be the same as a for -target name appearing in any comprehension containing the assignment expression. The latter names are local to the comprehension in which they appear, so it would be contradictory for a contained use of the same name to refer to the scope containing the outermost comprehension instead.

For example, [i := i+1 for i in range(5)] is invalid: the for i part establishes that i is local to the comprehension, but the i := part insists that i is not local to the comprehension. The same reason makes these examples invalid too:

While it’s technically possible to assign consistent semantics to these cases, it’s difficult to determine whether those semantics actually make sense in the absence of real use cases. Accordingly, the reference implementation [1] will ensure that such cases raise SyntaxError , rather than executing with implementation defined behaviour.

This restriction applies even if the assignment expression is never executed:

For the comprehension body (the part before the first “for” keyword) and the filter expression (the part after “if” and before any nested “for”), this restriction applies solely to target names that are also used as iteration variables in the comprehension. Lambda expressions appearing in these positions introduce a new explicit function scope, and hence may use assignment expressions with no additional restrictions.

Due to design constraints in the reference implementation (the symbol table analyser cannot easily detect when names are re-used between the leftmost comprehension iterable expression and the rest of the comprehension), named expressions are disallowed entirely as part of comprehension iterable expressions (the part after each “in”, and before any subsequent “if” or “for” keyword):

A further exception applies when an assignment expression occurs in a comprehension whose containing scope is a class scope. If the rules above were to result in the target being assigned in that class’s scope, the assignment expression is expressly invalid. This case also raises SyntaxError :

(The reason for the latter exception is the implicit function scope created for comprehensions – there is currently no runtime mechanism for a function to refer to a variable in the containing class scope, and we do not want to add such a mechanism. If this issue ever gets resolved this special case may be removed from the specification of assignment expressions. Note that the problem already exists for using a variable defined in the class scope from a comprehension.)

See Appendix B for some examples of how the rules for targets in comprehensions translate to equivalent code.

The := operator groups more tightly than a comma in all syntactic positions where it is legal, but less tightly than all other operators, including or , and , not , and conditional expressions ( A if C else B ). As follows from section “Exceptional cases” above, it is never allowed at the same level as = . In case a different grouping is desired, parentheses should be used.

The := operator may be used directly in a positional function call argument; however it is invalid directly in a keyword argument.

Some examples to clarify what’s technically valid or invalid:

Most of the “valid” examples above are not recommended, since human readers of Python source code who are quickly glancing at some code may miss the distinction. But simple cases are not objectionable:

This PEP recommends always putting spaces around := , similar to PEP 8 ’s recommendation for = when used for assignment, whereas the latter disallows spaces around = used for keyword arguments.)

In order to have precisely defined semantics, the proposal requires evaluation order to be well-defined. This is technically not a new requirement, as function calls may already have side effects. Python already has a rule that subexpressions are generally evaluated from left to right. However, assignment expressions make these side effects more visible, and we propose a single change to the current evaluation order:

- In a dict comprehension {X: Y for ...} , Y is currently evaluated before X . We propose to change this so that X is evaluated before Y . (In a dict display like {X: Y} this is already the case, and also in dict((X, Y) for ...) which should clearly be equivalent to the dict comprehension.)

Most importantly, since := is an expression, it can be used in contexts where statements are illegal, including lambda functions and comprehensions.

Conversely, assignment expressions don’t support the advanced features found in assignment statements:

- Multiple targets are not directly supported: x = y = z = 0 # Equivalent: (z := (y := (x := 0)))

- Single assignment targets other than a single NAME are not supported: # No equivalent a [ i ] = x self . rest = []

- Priority around commas is different: x = 1 , 2 # Sets x to (1, 2) ( x := 1 , 2 ) # Sets x to 1

- Iterable packing and unpacking (both regular or extended forms) are not supported: # Equivalent needs extra parentheses loc = x , y # Use (loc := (x, y)) info = name , phone , * rest # Use (info := (name, phone, *rest)) # No equivalent px , py , pz = position name , phone , email , * other_info = contact

- Inline type annotations are not supported: # Closest equivalent is "p: Optional[int]" as a separate declaration p : Optional [ int ] = None

- Augmented assignment is not supported: total += tax # Equivalent: (total := total + tax)

The following changes have been made based on implementation experience and additional review after the PEP was first accepted and before Python 3.8 was released:

- for consistency with other similar exceptions, and to avoid locking in an exception name that is not necessarily going to improve clarity for end users, the originally proposed TargetScopeError subclass of SyntaxError was dropped in favour of just raising SyntaxError directly. [3]

- due to a limitation in CPython’s symbol table analysis process, the reference implementation raises SyntaxError for all uses of named expressions inside comprehension iterable expressions, rather than only raising them when the named expression target conflicts with one of the iteration variables in the comprehension. This could be revisited given sufficiently compelling examples, but the extra complexity needed to implement the more selective restriction doesn’t seem worthwhile for purely hypothetical use cases.

Examples from the Python standard library

env_base is only used on these lines, putting its assignment on the if moves it as the “header” of the block.

- Current: env_base = os . environ . get ( "PYTHONUSERBASE" , None ) if env_base : return env_base

- Improved: if env_base := os . environ . get ( "PYTHONUSERBASE" , None ): return env_base

Avoid nested if and remove one indentation level.

- Current: if self . _is_special : ans = self . _check_nans ( context = context ) if ans : return ans

- Improved: if self . _is_special and ( ans := self . _check_nans ( context = context )): return ans

Code looks more regular and avoid multiple nested if. (See Appendix A for the origin of this example.)

- Current: reductor = dispatch_table . get ( cls ) if reductor : rv = reductor ( x ) else : reductor = getattr ( x , "__reduce_ex__" , None ) if reductor : rv = reductor ( 4 ) else : reductor = getattr ( x , "__reduce__" , None ) if reductor : rv = reductor () else : raise Error ( "un(deep)copyable object of type %s " % cls )

- Improved: if reductor := dispatch_table . get ( cls ): rv = reductor ( x ) elif reductor := getattr ( x , "__reduce_ex__" , None ): rv = reductor ( 4 ) elif reductor := getattr ( x , "__reduce__" , None ): rv = reductor () else : raise Error ( "un(deep)copyable object of type %s " % cls )

tz is only used for s += tz , moving its assignment inside the if helps to show its scope.

- Current: s = _format_time ( self . _hour , self . _minute , self . _second , self . _microsecond , timespec ) tz = self . _tzstr () if tz : s += tz return s

- Improved: s = _format_time ( self . _hour , self . _minute , self . _second , self . _microsecond , timespec ) if tz := self . _tzstr (): s += tz return s

Calling fp.readline() in the while condition and calling .match() on the if lines make the code more compact without making it harder to understand.

- Current: while True : line = fp . readline () if not line : break m = define_rx . match ( line ) if m : n , v = m . group ( 1 , 2 ) try : v = int ( v ) except ValueError : pass vars [ n ] = v else : m = undef_rx . match ( line ) if m : vars [ m . group ( 1 )] = 0

- Improved: while line := fp . readline (): if m := define_rx . match ( line ): n , v = m . group ( 1 , 2 ) try : v = int ( v ) except ValueError : pass vars [ n ] = v elif m := undef_rx . match ( line ): vars [ m . group ( 1 )] = 0

A list comprehension can map and filter efficiently by capturing the condition:

Similarly, a subexpression can be reused within the main expression, by giving it a name on first use:

Note that in both cases the variable y is bound in the containing scope (i.e. at the same level as results or stuff ).

Assignment expressions can be used to good effect in the header of an if or while statement:

Particularly with the while loop, this can remove the need to have an infinite loop, an assignment, and a condition. It also creates a smooth parallel between a loop which simply uses a function call as its condition, and one which uses that as its condition but also uses the actual value.

An example from the low-level UNIX world:

Rejected alternative proposals

Proposals broadly similar to this one have come up frequently on python-ideas. Below are a number of alternative syntaxes, some of them specific to comprehensions, which have been rejected in favour of the one given above.

A previous version of this PEP proposed subtle changes to the scope rules for comprehensions, to make them more usable in class scope and to unify the scope of the “outermost iterable” and the rest of the comprehension. However, this part of the proposal would have caused backwards incompatibilities, and has been withdrawn so the PEP can focus on assignment expressions.

Broadly the same semantics as the current proposal, but spelled differently.

Since EXPR as NAME already has meaning in import , except and with statements (with different semantics), this would create unnecessary confusion or require special-casing (e.g. to forbid assignment within the headers of these statements).

(Note that with EXPR as VAR does not simply assign the value of EXPR to VAR – it calls EXPR.__enter__() and assigns the result of that to VAR .)

Additional reasons to prefer := over this spelling include:

- In if f(x) as y the assignment target doesn’t jump out at you – it just reads like if f x blah blah and it is too similar visually to if f(x) and y .

- import foo as bar

- except Exc as var

- with ctxmgr() as var

To the contrary, the assignment expression does not belong to the if or while that starts the line, and we intentionally allow assignment expressions in other contexts as well.

- NAME = EXPR

- if NAME := EXPR

reinforces the visual recognition of assignment expressions.

This syntax is inspired by languages such as R and Haskell, and some programmable calculators. (Note that a left-facing arrow y <- f(x) is not possible in Python, as it would be interpreted as less-than and unary minus.) This syntax has a slight advantage over ‘as’ in that it does not conflict with with , except and import , but otherwise is equivalent. But it is entirely unrelated to Python’s other use of -> (function return type annotations), and compared to := (which dates back to Algol-58) it has a much weaker tradition.

This has the advantage that leaked usage can be readily detected, removing some forms of syntactic ambiguity. However, this would be the only place in Python where a variable’s scope is encoded into its name, making refactoring harder.

Execution order is inverted (the indented body is performed first, followed by the “header”). This requires a new keyword, unless an existing keyword is repurposed (most likely with: ). See PEP 3150 for prior discussion on this subject (with the proposed keyword being given: ).

This syntax has fewer conflicts than as does (conflicting only with the raise Exc from Exc notation), but is otherwise comparable to it. Instead of paralleling with expr as target: (which can be useful but can also be confusing), this has no parallels, but is evocative.

One of the most popular use-cases is if and while statements. Instead of a more general solution, this proposal enhances the syntax of these two statements to add a means of capturing the compared value:

This works beautifully if and ONLY if the desired condition is based on the truthiness of the captured value. It is thus effective for specific use-cases (regex matches, socket reads that return '' when done), and completely useless in more complicated cases (e.g. where the condition is f(x) < 0 and you want to capture the value of f(x) ). It also has no benefit to list comprehensions.

Advantages: No syntactic ambiguities. Disadvantages: Answers only a fraction of possible use-cases, even in if / while statements.

Another common use-case is comprehensions (list/set/dict, and genexps). As above, proposals have been made for comprehension-specific solutions.

This brings the subexpression to a location in between the ‘for’ loop and the expression. It introduces an additional language keyword, which creates conflicts. Of the three, where reads the most cleanly, but also has the greatest potential for conflict (e.g. SQLAlchemy and numpy have where methods, as does tkinter.dnd.Icon in the standard library).

As above, but reusing the with keyword. Doesn’t read too badly, and needs no additional language keyword. Is restricted to comprehensions, though, and cannot as easily be transformed into “longhand” for-loop syntax. Has the C problem that an equals sign in an expression can now create a name binding, rather than performing a comparison. Would raise the question of why “with NAME = EXPR:” cannot be used as a statement on its own.

As per option 2, but using as rather than an equals sign. Aligns syntactically with other uses of as for name binding, but a simple transformation to for-loop longhand would create drastically different semantics; the meaning of with inside a comprehension would be completely different from the meaning as a stand-alone statement, while retaining identical syntax.

Regardless of the spelling chosen, this introduces a stark difference between comprehensions and the equivalent unrolled long-hand form of the loop. It is no longer possible to unwrap the loop into statement form without reworking any name bindings. The only keyword that can be repurposed to this task is with , thus giving it sneakily different semantics in a comprehension than in a statement; alternatively, a new keyword is needed, with all the costs therein.

There are two logical precedences for the := operator. Either it should bind as loosely as possible, as does statement-assignment; or it should bind more tightly than comparison operators. Placing its precedence between the comparison and arithmetic operators (to be precise: just lower than bitwise OR) allows most uses inside while and if conditions to be spelled without parentheses, as it is most likely that you wish to capture the value of something, then perform a comparison on it:

Once find() returns -1, the loop terminates. If := binds as loosely as = does, this would capture the result of the comparison (generally either True or False ), which is less useful.

While this behaviour would be convenient in many situations, it is also harder to explain than “the := operator behaves just like the assignment statement”, and as such, the precedence for := has been made as close as possible to that of = (with the exception that it binds tighter than comma).

Some critics have claimed that the assignment expressions should allow unparenthesized tuples on the right, so that these two would be equivalent:

(With the current version of the proposal, the latter would be equivalent to ((point := x), y) .)

However, adopting this stance would logically lead to the conclusion that when used in a function call, assignment expressions also bind less tight than comma, so we’d have the following confusing equivalence:

The less confusing option is to make := bind more tightly than comma.

It’s been proposed to just always require parentheses around an assignment expression. This would resolve many ambiguities, and indeed parentheses will frequently be needed to extract the desired subexpression. But in the following cases the extra parentheses feel redundant:

Frequently Raised Objections

C and its derivatives define the = operator as an expression, rather than a statement as is Python’s way. This allows assignments in more contexts, including contexts where comparisons are more common. The syntactic similarity between if (x == y) and if (x = y) belies their drastically different semantics. Thus this proposal uses := to clarify the distinction.

The two forms have different flexibilities. The := operator can be used inside a larger expression; the = statement can be augmented to += and its friends, can be chained, and can assign to attributes and subscripts.

Previous revisions of this proposal involved sublocal scope (restricted to a single statement), preventing name leakage and namespace pollution. While a definite advantage in a number of situations, this increases complexity in many others, and the costs are not justified by the benefits. In the interests of language simplicity, the name bindings created here are exactly equivalent to any other name bindings, including that usage at class or module scope will create externally-visible names. This is no different from for loops or other constructs, and can be solved the same way: del the name once it is no longer needed, or prefix it with an underscore.

(The author wishes to thank Guido van Rossum and Christoph Groth for their suggestions to move the proposal in this direction. [2] )

As expression assignments can sometimes be used equivalently to statement assignments, the question of which should be preferred will arise. For the benefit of style guides such as PEP 8 , two recommendations are suggested.

- If either assignment statements or assignment expressions can be used, prefer statements; they are a clear declaration of intent.

- If using assignment expressions would lead to ambiguity about execution order, restructure it to use statements instead.

The authors wish to thank Alyssa Coghlan and Steven D’Aprano for their considerable contributions to this proposal, and members of the core-mentorship mailing list for assistance with implementation.

Appendix A: Tim Peters’s findings

Here’s a brief essay Tim Peters wrote on the topic.

I dislike “busy” lines of code, and also dislike putting conceptually unrelated logic on a single line. So, for example, instead of:

instead. So I suspected I’d find few places I’d want to use assignment expressions. I didn’t even consider them for lines already stretching halfway across the screen. In other cases, “unrelated” ruled:

is a vast improvement over the briefer:

The original two statements are doing entirely different conceptual things, and slamming them together is conceptually insane.

In other cases, combining related logic made it harder to understand, such as rewriting:

as the briefer:

The while test there is too subtle, crucially relying on strict left-to-right evaluation in a non-short-circuiting or method-chaining context. My brain isn’t wired that way.

But cases like that were rare. Name binding is very frequent, and “sparse is better than dense” does not mean “almost empty is better than sparse”. For example, I have many functions that return None or 0 to communicate “I have nothing useful to return in this case, but since that’s expected often I’m not going to annoy you with an exception”. This is essentially the same as regular expression search functions returning None when there is no match. So there was lots of code of the form:

I find that clearer, and certainly a bit less typing and pattern-matching reading, as:

It’s also nice to trade away a small amount of horizontal whitespace to get another _line_ of surrounding code on screen. I didn’t give much weight to this at first, but it was so very frequent it added up, and I soon enough became annoyed that I couldn’t actually run the briefer code. That surprised me!

There are other cases where assignment expressions really shine. Rather than pick another from my code, Kirill Balunov gave a lovely example from the standard library’s copy() function in copy.py :

The ever-increasing indentation is semantically misleading: the logic is conceptually flat, “the first test that succeeds wins”:

Using easy assignment expressions allows the visual structure of the code to emphasize the conceptual flatness of the logic; ever-increasing indentation obscured it.

A smaller example from my code delighted me, both allowing to put inherently related logic in a single line, and allowing to remove an annoying “artificial” indentation level:

That if is about as long as I want my lines to get, but remains easy to follow.

So, in all, in most lines binding a name, I wouldn’t use assignment expressions, but because that construct is so very frequent, that leaves many places I would. In most of the latter, I found a small win that adds up due to how often it occurs, and in the rest I found a moderate to major win. I’d certainly use it more often than ternary if , but significantly less often than augmented assignment.

I have another example that quite impressed me at the time.

Where all variables are positive integers, and a is at least as large as the n’th root of x, this algorithm returns the floor of the n’th root of x (and roughly doubling the number of accurate bits per iteration):

It’s not obvious why that works, but is no more obvious in the “loop and a half” form. It’s hard to prove correctness without building on the right insight (the “arithmetic mean - geometric mean inequality”), and knowing some non-trivial things about how nested floor functions behave. That is, the challenges are in the math, not really in the coding.

If you do know all that, then the assignment-expression form is easily read as “while the current guess is too large, get a smaller guess”, where the “too large?” test and the new guess share an expensive sub-expression.

To my eyes, the original form is harder to understand:

This appendix attempts to clarify (though not specify) the rules when a target occurs in a comprehension or in a generator expression. For a number of illustrative examples we show the original code, containing a comprehension, and the translation, where the comprehension has been replaced by an equivalent generator function plus some scaffolding.

Since [x for ...] is equivalent to list(x for ...) these examples all use list comprehensions without loss of generality. And since these examples are meant to clarify edge cases of the rules, they aren’t trying to look like real code.

Note: comprehensions are already implemented via synthesizing nested generator functions like those in this appendix. The new part is adding appropriate declarations to establish the intended scope of assignment expression targets (the same scope they resolve to as if the assignment were performed in the block containing the outermost comprehension). For type inference purposes, these illustrative expansions do not imply that assignment expression targets are always Optional (but they do indicate the target binding scope).

Let’s start with a reminder of what code is generated for a generator expression without assignment expression.

- Original code (EXPR usually references VAR): def f (): a = [ EXPR for VAR in ITERABLE ]

- Translation (let’s not worry about name conflicts): def f (): def genexpr ( iterator ): for VAR in iterator : yield EXPR a = list ( genexpr ( iter ( ITERABLE )))

Let’s add a simple assignment expression.

- Original code: def f (): a = [ TARGET := EXPR for VAR in ITERABLE ]

- Translation: def f (): if False : TARGET = None # Dead code to ensure TARGET is a local variable def genexpr ( iterator ): nonlocal TARGET for VAR in iterator : TARGET = EXPR yield TARGET a = list ( genexpr ( iter ( ITERABLE )))

Let’s add a global TARGET declaration in f() .

- Original code: def f (): global TARGET a = [ TARGET := EXPR for VAR in ITERABLE ]

- Translation: def f (): global TARGET def genexpr ( iterator ): global TARGET for VAR in iterator : TARGET = EXPR yield TARGET a = list ( genexpr ( iter ( ITERABLE )))

Or instead let’s add a nonlocal TARGET declaration in f() .

- Original code: def g (): TARGET = ... def f (): nonlocal TARGET a = [ TARGET := EXPR for VAR in ITERABLE ]

- Translation: def g (): TARGET = ... def f (): nonlocal TARGET def genexpr ( iterator ): nonlocal TARGET for VAR in iterator : TARGET = EXPR yield TARGET a = list ( genexpr ( iter ( ITERABLE )))

Finally, let’s nest two comprehensions.

- Original code: def f (): a = [[ TARGET := i for i in range ( 3 )] for j in range ( 2 )] # I.e., a = [[0, 1, 2], [0, 1, 2]] print ( TARGET ) # prints 2

- Translation: def f (): if False : TARGET = None def outer_genexpr ( outer_iterator ): nonlocal TARGET def inner_generator ( inner_iterator ): nonlocal TARGET for i in inner_iterator : TARGET = i yield i for j in outer_iterator : yield list ( inner_generator ( range ( 3 ))) a = list ( outer_genexpr ( range ( 2 ))) print ( TARGET )

Because it has been a point of confusion, note that nothing about Python’s scoping semantics is changed. Function-local scopes continue to be resolved at compile time, and to have indefinite temporal extent at run time (“full closures”). Example:

This document has been placed in the public domain.

Source: https://github.com/python/peps/blob/main/peps/pep-0572.rst

Last modified: 2023-10-11 12:05:51 GMT

8 Python while Loop Examples for Beginners

- learn python

- python basics

What is a while loop in Python? These eight Python while loop examples will show you how it works and how to use it properly.

In programming, looping refers to repeating the same operation or task multiple times. In Python, there are two different loop types, the while loop and the for loop. The main difference between them is the way they define the execution cycle or the stopping criteria.

The for loop goes through a collection of items and executes the code inside the loop for every item in the collection. There are different object types in Python that can be used as this collection, such as lists , sets , ranges, and strings .

On the other hand, the while loop takes in a condition and continues the execution as long as the condition is met. In other words, it stops the execution when the condition becomes false (i.e. is not met).

The for loop requires a collection of items to loop over. The while loop is more general and does not require a collection; we can use it by providing a condition only.

In this article, we will examine 8 examples to help you obtain a comprehensive understanding of while loops in Python.

Example 1: Basic Python While Loop

Let’s go over a simple Python while loop example to understand its structure and functionality:

The condition in this while loop example is that the variable i must be less than 5. The initial value of the variable i is set to 0 before the while loop. The while loop first checks the condition. Since 0 is less than 5, the code inside the while loop is executed: it prints the value of i and then increments i’s value by 1. Now the value of i is 1. Then the code returns to the beginning of the while loop. Since 1 is less than 5, the code inside the while loop is executed again.

This looping is repeated until the i variable becomes 5. When the i variable becomes 5, the condition i < 5 stops being true and the execution of the while loop stops. This Python while loop can be translated to plain English as “while i is less than 5, print i and increment its value by 1”.

There are several use cases for while loops. Let’s discover them by solving some Python while loop examples. Make sure to visit our Python Basics: Part 1 course to learn more about while loops and other basic concepts.

Example 2: Using a Counter in a while Loop

We don’t always know beforehand how many times the code inside a while loop will be executed. Hence, it’s a good practice to set up a counter inside the loop. In the following Python while loop example, you can see how a counter can be used:

We start by creating the counter variable by setting its value to 0. In this case, we ask the user to input a number between 0 and 10. The condition in the while loop checks if the user input is equal to 5. As long as the input is not equal to 5, the code inside the loop will be executed so the user will be prompted to enter a number.

When the user guesses the correct number (i.e. the input is equal to 5), the code exits the loop. Then we tell the user how many guesses it took to guess the correct number by printing the value of the counter. Feel free to test it yourself in your coding environment to see how the counter works in this example.

Example 3: A while Loop with a List

Although for loops are preferred when you want to loop over a list , we can also use a while loop for this task. If we do, we need to set the while loop condition carefully. The following code snippet loops over a list called cities and prints the name of each city in the list:

There are two things to understand about how this while loop works. The len() function gives us the length of the cities list, which is equal to the number of items in the list. The variable i is used in two places. The first one is in the while loop condition, where we compare it to the length of the list. The second one is in the list index to select the item to be printed from the list. The variable is initialized with the value of 0. The expression cities[0] selects the first item in the list, which is London.

After the print() function is executed inside the while loop, the value of i is incremented by 1 and cities[1] selects the second item from the list, and so on. The code exits the loop when i becomes 4. By then, all the items in the list will have been printed out.

Lists are one of the most frequently used data structures in Python. To write efficient programs, you need to have a comprehensive understanding of lists and how to interact with them. You can find beginner-level Python list exercises in this article .

Example 4: A while Loop with a Dictionary

Constructing a while loop with a dictionary is similar to constructing a while loop with a list. However, we need a couple of extra operations because a dictionary is a more complex data structure.

If you want to learn more about Python data structures, we offer the Python Data Structures in Practice course that uses interactive exercises to explain the said data structures.

A dictionary in Python consists of key-value pairs. What do we mean by key-value pairs? This describes a two-part data structure where the value is associated with the key. In the following dictionary, names are the keys and the corresponding numeric scores are the dictionary values:

There are different ways of iterating over a dictionary. We’ll create a Python while loop that goes over the scores dictionary and prints the names and their scores:

The keys() method returns the dictionary keys, which are then converted to a list using the list() constructor. Thus, the keys variable created before the while loop is ['Jane', 'John', 'Emily'] . The while loop condition is similar to the previous example. The code inside the loop is executed until the value of i becomes more than the number of items in the dictionary (i.e. the length of the dictionary). The expression keys[i] gives us the i th item in the keys list. The expression scores[keys[i]] gives us the value of the key given by keys[i] . For example, the first item in the keys list is Jane and scores['Jane'] returns the value associated with Jane in the scores dictionary, which is 88.

Example 5: Nested while Loops

A nested while loop is simply a while loop inside another while loop. We provide separate conditions for each while loop. We also need to make sure to do the increments properly so that the code does not go into an infinite loop.

In the following Python while loop example, we have a while loop nested in another while loop:

The result output has been shortened for demonstration purposes.

The first loop goes over integers from 1 to 9. For each iteration of the first while loop (i.e. for each number from 1 to 9), the while loop inside (i.e. the nested loop) is executed. The nested loop in our example also goes over integers from 1 to 9.

The execution inside the second while loop is multiplying the values of i and j . The i is the value from the outer while loop and j is the value from the nested while loop. Thus, the code iterates integers from 1 to 9 for every integer from 1 to 9.

In the result, we have a multiplication table. The important point here is to do the increments accurately. The variables i and j must each be incremented inside their own while loop.

Example 6: Infinite Loops and the break Statement

Since while loops continue execution until the given condition is no longer met, we can end up in an infinite loop if the condition is not set properly.

The following is an example of an infinite while loop. The initial value of i is 10 and the while loop checks if the value of i is greater than 5, which is true. Inside the loop, we print the value of i and increment it by 1 so it’ll always be more than 5 and the while loop will continue execution forever.

Another way to create infinite while loops is by giving the condition as while True .

The break statement provides a way to exit the loop. Even if the condition is still true, when the code sees the break , it’ll exit the loop. Let’s do a Python while loop example to show how break works:

The while loop goes over the scores list and prints the items in it. Before it prints the item, there is an if statement that checks if the item is an integer. If not, the code exits the while loop because of the break statement. If the item is an integer, the loop execution continues and the item is printed.

Example 7: Using continue in a while Loop

The continue statement is quite similar to the break statement. The break command exits the while loop so the code continues executing the next piece of code if there is any. On the other hand, continue returns the control to the beginning of the while loop so the execution of the while loop continues using the next value. Let’s repeat the previous while loop example, this time using continue instead of break to understand the difference:

When there’s a break statement and the condition is not met for the value "fifty", the code exits the while loop. However, when we use the continue statement, the execution of the while loop continues from the next item, which is 65.

Example 8: A while Loop with the else Clause

In Python, a while loop can be used with an else clause. When the condition in the while loop becomes false, the else block is executed. However, if there is a break statement inside the while loop block, the code exits the loop without executing the else block.

Let’s do an example to better understand this case:

This code searches for a name in the given names list. When the name is found, it prints “Name found!” and exits the loop. If the name is not found after going through all the names in the names list, the else block is executed, which prints “Name not found!”.

If we did not have the break statement inside the while loop, the code block would print both “Name found!” and “Name not found!” if the name exists in the list. Feel free to test it yourself.

Common while Mistakes and Tips

Python while loops are very useful for writing robust and efficient programs. However, there are some important points to keep in mind if you want to use them properly.

The biggest mistake is to forget about incrementing the loop variable – or not incrementing it correctly. Both may result in infinite loops or loops that do not function as expected. This might need extra attention, especially when working with nested loops.

Another tip: Do not overuse the break statement. Keep in mind that it exits the while loop entirely. If you place a break statement with an if condition, the while loop does not execute the remaining part. Recall the examples we have done on the break and continue statements. It’s better to use the continue statement if you want to complete the entire loop.

Need More Python while Loop Examples?

We learned Python while loops by solving 8 examples. Each example focused on a specific use case or particular component of the while loop. We have also learned the break and continue statements and how they improve the functionality of while loops.

To build your knowledge of while loops, keep practicing and solving more exercises. LearnPython.com offers several interactive online courses that will help you improve your Python skills. You can start with our three-course Python Basics track, which offers the opportunity to practice while learning Python programming from scratch. It contains 259 coding challenges.

If you want to practice working with Python data structures like lists, dictionaries, tuples, or sets, I recommend our course Python Data Structures in Practice . Happy learning!

You may also like

How Do You Write a SELECT Statement in SQL?

What Is a Foreign Key in SQL?

Enumerate and Explain All the Basic Elements of an SQL Query

Python Tutorial

File handling, python modules, python numpy, python pandas, python matplotlib, python scipy, machine learning, python mysql, python mongodb, python reference, module reference, python how to, python examples, python for loops.

A for loop is used for iterating over a sequence (that is either a list, a tuple, a dictionary, a set, or a string).

This is less like the for keyword in other programming languages, and works more like an iterator method as found in other object-orientated programming languages.

With the for loop we can execute a set of statements, once for each item in a list, tuple, set etc.

Print each fruit in a fruit list:

The for loop does not require an indexing variable to set beforehand.

Looping Through a String

Even strings are iterable objects, they contain a sequence of characters:

Loop through the letters in the word "banana":

The break Statement

With the break statement we can stop the loop before it has looped through all the items:

Exit the loop when x is "banana":

Exit the loop when x is "banana", but this time the break comes before the print:

Advertisement

The continue Statement

With the continue statement we can stop the current iteration of the loop, and continue with the next:

Do not print banana:

The range() Function

The range() function returns a sequence of numbers, starting from 0 by default, and increments by 1 (by default), and ends at a specified number.

Using the range() function:

Note that range(6) is not the values of 0 to 6, but the values 0 to 5.

The range() function defaults to 0 as a starting value, however it is possible to specify the starting value by adding a parameter: range(2, 6) , which means values from 2 to 6 (but not including 6):

Using the start parameter:

The range() function defaults to increment the sequence by 1, however it is possible to specify the increment value by adding a third parameter: range(2, 30, 3 ) :

Increment the sequence with 3 (default is 1):

Else in For Loop

The else keyword in a for loop specifies a block of code to be executed when the loop is finished:

Print all numbers from 0 to 5, and print a message when the loop has ended:

Note: The else block will NOT be executed if the loop is stopped by a break statement.

Break the loop when x is 3, and see what happens with the else block:

Nested Loops

A nested loop is a loop inside a loop.

The "inner loop" will be executed one time for each iteration of the "outer loop":

Print each adjective for every fruit:

The pass Statement

for loops cannot be empty, but if you for some reason have a for loop with no content, put in the pass statement to avoid getting an error.

COLOR PICKER

Contact Sales

If you want to use W3Schools services as an educational institution, team or enterprise, send us an e-mail: [email protected]

Report Error

If you want to report an error, or if you want to make a suggestion, send us an e-mail: [email protected]

Top Tutorials

Top references, top examples, get certified.

Learn Python practically and Get Certified .

Popular Tutorials

Popular examples, reference materials, learn python interactively, python introduction.

- Get Started With Python

- Your First Python Program

- Python Comments

Python Fundamentals

- Python Variables and Literals

- Python Type Conversion

- Python Basic Input and Output

- Python Operators

Python Flow Control

Python if...else Statement

Python for Loop

Python while Loop

Python break and continue

- Python pass Statement

Python Data types

- Python Numbers and Mathematics

- Python List

- Python Tuple

- Python String

- Python Dictionary

- Python Functions

- Python Function Arguments

- Python Variable Scope

- Python Global Keyword

- Python Recursion

- Python Modules

- Python Package

- Python Main function

Python Files

- Python Directory and Files Management

- Python CSV: Read and Write CSV files

- Reading CSV files in Python

- Writing CSV files in Python

- Python Exception Handling

- Python Exceptions

- Python Custom Exceptions

Python Object & Class

- Python Objects and Classes

- Python Inheritance

- Python Multiple Inheritance

- Polymorphism in Python

- Python Operator Overloading

Python Advanced Topics

- List comprehension

- Python Lambda/Anonymous Function

- Python Iterators

- Python Generators

- Python Namespace and Scope

- Python Closures

- Python Decorators

- Python @property decorator

- Python RegEx

Python Date and Time

- Python datetime

- Python strftime()

- Python strptime()

- How to get current date and time in Python?

- Python Get Current Time

- Python timestamp to datetime and vice-versa

- Python time Module

- Python sleep()

Additional Topic

- Precedence and Associativity of Operators in Python

- Python Keywords and Identifiers

- Python Asserts

- Python Json

- Python *args and **kwargs

Python Tutorials

Python Looping Techniques

- Python range() Function

In Python, we use a while loop to repeat a block of code until a certain condition is met. For example,

In the above example, we have used a while loop to print the numbers from 1 to 3 . The loop runs as long as the condition number <= 3 is True .

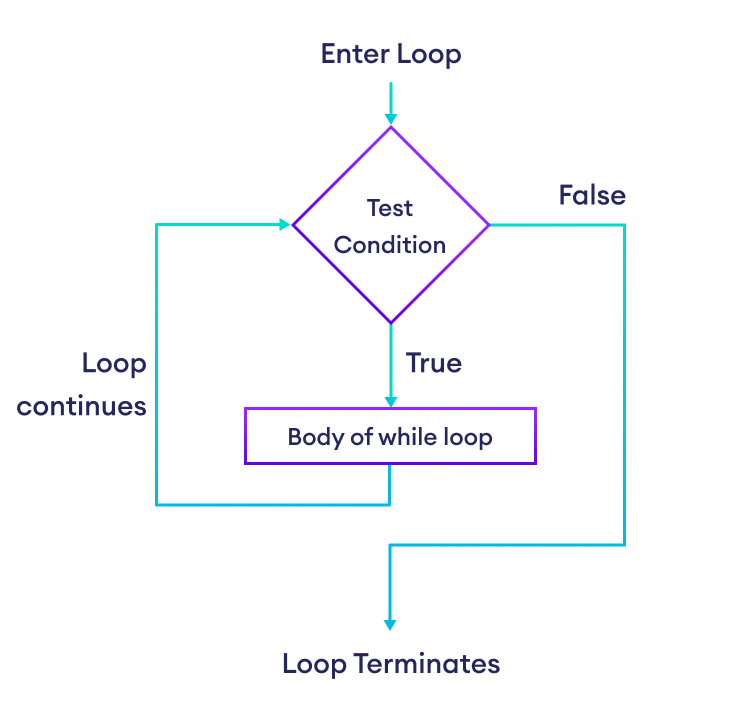

- while Loop Syntax

- The while loop evaluates condition , which is a boolean expression.

- If the condition is True , body of while loop is executed. The condition is evaluated again.

- This process continues until the condition is False .

- Once the condition evaluates to False , the loop terminates.

Tip: We should update the variables used in condition inside the loop so that it eventually evaluates to False . Otherwise, the loop keeps running, creating an infinite loop.

- Flowchart of Python while Loop

- Example: Python while Loop

Here is how the above program works:

- It asks the user to enter a number.

- If the user enters a number other than 0 , it is printed.

- If the user enters 0 , the loop terminates.

- Infinite while Loop

If the condition of a while loop always evaluates to True , the loop runs continuously, forming an infinite while loop . For example,

The above program is equivalent to:

More on Python while Loop

We can use a break statement inside a while loop to terminate the loop immediately without checking the test condition. For example,

Here, the condition of the while loop is always True . However, if the user enters end , the loop termiantes because of the break statement.

Here, on the third iteration, the counter becomes 2 which terminates the loop. It then executes the else block and prints This is inside else block .

Note : The else block will not execute if the while loop is terminated by a break statement.

The for loop is usually used in the sequence when the number of iterations is known. For example,

The while loop is usually used when the number of iterations is unknown. For example,

Table of Contents

- Introduction

Write a function to get the Fibonacci sequence less than a given number.

- The Fibonacci sequence starts with 0 and 1 . Each subsequent number is the sum of the previous two.

- For input 22 , the return value should be [0, 1, 1, 2, 3, 5, 8, 13, 21]

Video: Python while Loop

Sorry about that.

Related Tutorials

Python Tutorial

- Python Course

- Python Basics

- Interview Questions

- Python Quiz

- Popular Packages

- Python Projects

- Practice Python

- AI With Python

- Learn Python3

- Python Automation

- Python Web Dev

- DSA with Python

- Python OOPs

- Dictionaries

Assignment Operators in Python

The Python Operators are used to perform operations on values and variables. These are the special symbols that carry out arithmetic, logical, and bitwise computations. The value the operator operates on is known as the Operand. Here, we will cover Different Assignment operators in Python .

Operators |

| ||

|---|---|---|---|

| = | Assign the value of the right side of the expression to the left side operand | c = a + b |

| += | Add right side operand with left side operand and then assign the result to left operand | a += b |

| -= | Subtract right side operand from left side operand and then assign the result to left operand | a -= b |

| *= | Multiply right operand with left operand and then assign the result to the left operand | a *= b |

| /= | Divide left operand with right operand and then assign the result to the left operand | a /= b |

| %= | Divides the left operand with the right operand and then assign the remainder to the left operand | a %= b |

| //= | Divide left operand with right operand and then assign the value(floor) to left operand | a //= b |

| **= | Calculate exponent(raise power) value using operands and then assign the result to left operand | a **= b |

| &= | Performs Bitwise AND on operands and assign the result to left operand | a &= b |

| |= | Performs Bitwise OR on operands and assign the value to left operand | a |= b |

| ^= | Performs Bitwise XOR on operands and assign the value to left operand | a ^= b |

| >>= | Performs Bitwise right shift on operands and assign the result to left operand | a >>= b |

| <<= | Performs Bitwise left shift on operands and assign the result to left operand | a <<= b |

| := | Assign a value to a variable within an expression | a := exp |

Here are the Assignment Operators in Python with examples.

Assignment Operator

Assignment Operators are used to assign values to variables. This operator is used to assign the value of the right side of the expression to the left side operand.

Addition Assignment Operator

The Addition Assignment Operator is used to add the right-hand side operand with the left-hand side operand and then assigning the result to the left operand.

Example: In this code we have two variables ‘a’ and ‘b’ and assigned them with some integer value. Then we have used the addition assignment operator which will first perform the addition operation and then assign the result to the variable on the left-hand side.

S ubtraction Assignment Operator

The Subtraction Assignment Operator is used to subtract the right-hand side operand from the left-hand side operand and then assigning the result to the left-hand side operand.

Example: In this code we have two variables ‘a’ and ‘b’ and assigned them with some integer value. Then we have used the subtraction assignment operator which will first perform the subtraction operation and then assign the result to the variable on the left-hand side.

M ultiplication Assignment Operator

The Multiplication Assignment Operator is used to multiply the right-hand side operand with the left-hand side operand and then assigning the result to the left-hand side operand.

Example: In this code we have two variables ‘a’ and ‘b’ and assigned them with some integer value. Then we have used the multiplication assignment operator which will first perform the multiplication operation and then assign the result to the variable on the left-hand side.

D ivision Assignment Operator

The Division Assignment Operator is used to divide the left-hand side operand with the right-hand side operand and then assigning the result to the left operand.

Example: In this code we have two variables ‘a’ and ‘b’ and assigned them with some integer value. Then we have used the division assignment operator which will first perform the division operation and then assign the result to the variable on the left-hand side.

M odulus Assignment Operator

The Modulus Assignment Operator is used to take the modulus, that is, it first divides the operands and then takes the remainder and assigns it to the left operand.

Example: In this code we have two variables ‘a’ and ‘b’ and assigned them with some integer value. Then we have used the modulus assignment operator which will first perform the modulus operation and then assign the result to the variable on the left-hand side.

F loor Division Assignment Operator

The Floor Division Assignment Operator is used to divide the left operand with the right operand and then assigs the result(floor value) to the left operand.

Example: In this code we have two variables ‘a’ and ‘b’ and assigned them with some integer value. Then we have used the floor division assignment operator which will first perform the floor division operation and then assign the result to the variable on the left-hand side.

Exponentiation Assignment Operator

The Exponentiation Assignment Operator is used to calculate the exponent(raise power) value using operands and then assigning the result to the left operand.

Example: In this code we have two variables ‘a’ and ‘b’ and assigned them with some integer value. Then we have used the exponentiation assignment operator which will first perform exponent operation and then assign the result to the variable on the left-hand side.

Bitwise AND Assignment Operator

The Bitwise AND Assignment Operator is used to perform Bitwise AND operation on both operands and then assigning the result to the left operand.

Example: In this code we have two variables ‘a’ and ‘b’ and assigned them with some integer value. Then we have used the bitwise AND assignment operator which will first perform Bitwise AND operation and then assign the result to the variable on the left-hand side.

Bitwise OR Assignment Operator

The Bitwise OR Assignment Operator is used to perform Bitwise OR operation on the operands and then assigning result to the left operand.

Example: In this code we have two variables ‘a’ and ‘b’ and assigned them with some integer value. Then we have used the bitwise OR assignment operator which will first perform bitwise OR operation and then assign the result to the variable on the left-hand side.

Bitwise XOR Assignment Operator

The Bitwise XOR Assignment Operator is used to perform Bitwise XOR operation on the operands and then assigning result to the left operand.

Example: In this code we have two variables ‘a’ and ‘b’ and assigned them with some integer value. Then we have used the bitwise XOR assignment operator which will first perform bitwise XOR operation and then assign the result to the variable on the left-hand side.

Bitwise Right Shift Assignment Operator

The Bitwise Right Shift Assignment Operator is used to perform Bitwise Right Shift Operation on the operands and then assign result to the left operand.

Example: In this code we have two variables ‘a’ and ‘b’ and assigned them with some integer value. Then we have used the bitwise right shift assignment operator which will first perform bitwise right shift operation and then assign the result to the variable on the left-hand side.

Bitwise Left Shift Assignment Operator

The Bitwise Left Shift Assignment Operator is used to perform Bitwise Left Shift Opertator on the operands and then assign result to the left operand.

Example: In this code we have two variables ‘a’ and ‘b’ and assigned them with some integer value. Then we have used the bitwise left shift assignment operator which will first perform bitwise left shift operation and then assign the result to the variable on the left-hand side.

Walrus Operator

The Walrus Operator in Python is a new assignment operator which is introduced in Python version 3.8 and higher. This operator is used to assign a value to a variable within an expression.

Example: In this code, we have a Python list of integers. We have used Python Walrus assignment operator within the Python while loop . The operator will solve the expression on the right-hand side and assign the value to the left-hand side operand ‘x’ and then execute the remaining code.

Assignment Operators in Python – FAQs

What are assignment operators in python.

Assignment operators in Python are used to assign values to variables. These operators can also perform additional operations during the assignment. The basic assignment operator is = , which simply assigns the value of the right-hand operand to the left-hand operand. Other common assignment operators include += , -= , *= , /= , %= , and more, which perform an operation on the variable and then assign the result back to the variable.

What is the := Operator in Python?

The := operator, introduced in Python 3.8, is known as the “walrus operator”. It is an assignment expression, which means that it assigns values to variables as part of a larger expression. Its main benefit is that it allows you to assign values to variables within expressions, including within conditions of loops and if statements, thereby reducing the need for additional lines of code. Here’s an example: # Example of using the walrus operator in a while loop while (n := int(input("Enter a number (0 to stop): "))) != 0: print(f"You entered: {n}") This loop continues to prompt the user for input and immediately uses that input in both the condition check and the loop body.

What is the Assignment Operator in Structure?

In programming languages that use structures (like C or C++), the assignment operator = is used to copy values from one structure variable to another. Each member of the structure is copied from the source structure to the destination structure. Python, however, does not have a built-in concept of ‘structures’ as in C or C++; instead, similar functionality is achieved through classes or dictionaries.

What is the Assignment Operator in Python Dictionary?

In Python dictionaries, the assignment operator = is used to assign a new key-value pair to the dictionary or update the value of an existing key. Here’s how you might use it: my_dict = {} # Create an empty dictionary my_dict['key1'] = 'value1' # Assign a new key-value pair my_dict['key1'] = 'updated value' # Update the value of an existing key print(my_dict) # Output: {'key1': 'updated value'}

What is += and -= in Python?

The += and -= operators in Python are compound assignment operators. += adds the right-hand operand to the left-hand operand and assigns the result to the left-hand operand. Conversely, -= subtracts the right-hand operand from the left-hand operand and assigns the result to the left-hand operand. Here are examples of both: # Example of using += a = 5 a += 3 # Equivalent to a = a + 3 print(a) # Output: 8 # Example of using -= b = 10 b -= 4 # Equivalent to b = b - 4 print(b) # Output: 6 These operators make code more concise and are commonly used in loops and iterative data processing.

Please Login to comment...

Similar reads.

- Python-Operators

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Assignment Expressions: The Walrus Operator

- Discussion (8)

In this lesson, you’ll learn about the biggest change in Python 3.8: the introduction of assignment expressions . Assignment expression are written with a new notation (:=) .This operator is often called the walrus operator as it resembles the eyes and tusks of a walrus on its side.

Assignment expressions allow you to assign and return a value in the same expression. For example, if you want to assign to a variable and print its value, then you typically do something like this:

In Python 3.8, you’re allowed to combine these two statements into one, using the walrus operator:

The assignment expression allows you to assign True to walrus , and immediately print the value. But keep in mind that the walrus operator does not do anything that isn’t possible without it. It only makes certain constructs more convenient, and can sometimes communicate the intent of your code more clearly.

One pattern that shows some of the strengths of the walrus operator is while loops where you need to initialize and update a variable. For example, the following code asks the user for input until they type quit :

This code is less than ideal. You’re repeating the input() statement, and somehow you need to add current to the list before asking the user for it. A better solution is to set up an infinite while loop, and use break to stop the loop:

This code is equivalent to the code above, but avoids the repetition and somehow keeps the lines in a more logical order. If you use an assignment expression, then you can simplify this loop further:

This moves the test back to the while line, where it should be. However, there are now several things happening at that line, so it takes a bit more effort to read it properly. Use your best judgement about when the walrus operator helps make your code more readable.

PEP 572 describes all the details of assignment expressions, including some of the rationale for introducing them into the language, as well as several examples of how the walrus operator can be used. The Python 3.8 documentation also includes some good examples of assignment expressions.

Here are a few resources for more info on using bpython, the REPL (Read–Eval–Print Loop) tool used in most of these videos:

- Discover bpython: A Python REPL With IDE-Like Features

- A better Python REPL: bpython vs python

- bpython Homepage

- bpython Docs

00:00 In this video, you’ll learn about what’s being called the walrus operator. One of the biggest changes in Python 3.8 is the introduction of these assignment expressions. So, what does it do?

00:12 Well, it allows the assignment and the return of a value in the same expression, using a new notation. On the left side, you’d have the name of the object that you’re assigning, and then you have the operator, a colon and an equal sign ( := ), affectionately known as the walrus operator as it resembles the eyes and tusks of a walrus on its side.

00:32 And it’s assigning this expression on the right side, so it’s assigning and returning the value in the same expression. Let me have you practice with this operator with some code.

00:44 Throughout this tutorial, when I use a REPL, I’m going to be using this custom REPL called bpython . I’ll include links on how to install bpython below this video.

00:53 So, how do you use this assignment operator? Let me have you start with a small example. You could have an object named walrus and assign it the value of False , and then you could print it. In Python 3.8, you can combine those two statements and do a single statement using the walrus operator. So inside of print() , you could say walrus , the new object, and use the operator, the assignment expression := , and a space, and then say True . That’s going to do two things. Most notably, in reverse order, it returned the value True . And then it also assigned the value to walrus , and of course the type of 'bool' .

01:38 Keep in mind, the walrus operator doesn’t do anything that isn’t possible without it. It only makes certain constructs a bit more convenient, and can sometimes communicate the intent of your code more clearly.

01:48 Let me show you another example. It’s a pattern that shows some of the strengths of the walrus operator inside of while loops, where you need to initialize and update a variable. For example, create a new file, and name it write_something.py . Here’s write_something.py .

02:09 It starts with inputs , which will be a list. So create a list called inputs .

02:16 Into an object named current , use an input() statement. The input() statement is going to provide a prompt and read a string in from standard input. The prompt will be this, "Write something: " .

02:28 So when the user inputs that, that’ll go into current . So while current != "quit" — if the person has not typed quit yet— you’re going to take inputs and append the current value.

02:44 And then here, you’re asking to "Write something: " again.

02:50 Down here at my terminal, after saving—let’s see, make sure you’re saved. Okay. Now that’s saved.

03:00 So here, I could say, Hello , Welcome , and then finally quit , which then would quit it. So, this code isn’t ideal.

03:08 You’re repeating the input() statement twice, and somehow you need to add current to the list before asking the user for it. So a better solution is going to be to set up maybe an infinite while loop, and then use a break to stop the loop. How would that look?

03:22 You’re going to rearrange this a little bit. Move the while loop up, and say while True:

03:35 and here say if current == "quit": then break . Otherwise, go ahead and append it. So, a little different here, but this is a while loop that’s going to continue as long as it doesn’t get broken out of by someone typing quit . Okay.

03:53 Running it again. And there, you can see it breaking out. Nice. So, that code avoids the repetition and kind of keeps things in a more logical order, but there’s a way to simplify this to use that new assignment expression, the walrus operator. In that case, you’re going to modify this quite a bit.

04:17 Here you’re going to say while , current and then use that assignment operator ( := ) to create current .

04:23 But also, while doing that, check to see that it’s not equal to "quit" . So here, each time that assigns the value to current and it’s returned, so the value can be checked.

04:35 So while , current , assigning the value from the input() , and then if it’s not equal to "quit" , you’re going to append current . Make sure to save.

04:42 Run the code one more time.

04:47 And it works the same. This moves that test all the way back to the while line, where it should be. However, there’s a couple of things now happening all in one line, and that might take a little more effort to read what’s happening and to understand it properly.

05:00 There are a handful of other examples that you could look into to learn a little more about assignment expressions. I’ll include a link to PEP 572, and also a link to the Python docs for version 3.8, both of which include more code examples.

05:14 So you need to use your best judgment as to when this operator’s going to make your code more readable and more useful. In the next video, you’ll learn about the new feature of positional-only arguments.

rajeshboyalla on Dec. 4, 2019

Why do you use list() to initialize a list rather than using [] ?

Geir Arne Hjelle RP Team on Dec. 4, 2019

My two cents about list() vs [] (I wrote the original article this video series is based on):

- I find spelling out list() to be more readable and easier to notice and interpret than []

- [] is several times faster than list() , but we’re still talking nanoseconds. On my computer [] takes about 15ns, while list() runs in 60ns. Typically, lists are initiated once, so this does not cause any meaningful slowdown of code.

That said, if I’m initializing a list with existing elements, I usually use [elem1, elem2, ...] , since list(...) has different–and sometimes surprising–semantics.

Jason on April 3, 2020

Sorry for my ignorance, did the the standard assignment = operator work in this way? I don’t understand what has been gained from adding the := operator. If anything I think it will allow people to write more obfuscated code. But minds better than mine have been working on this, so I’ll have to take their word it is an improvement.

As for the discussion on whether [] is more readable than list(). I’d never seen list() before, so to me [] is better. I’ve only just come over from the dark 2.7 side so maybe it’s an old python programmer thing?

Oh I checked the operation on the assignment operator. I was obviously wrong. lol Still I think the existing operator could’ve been tweaked to do the same thing as := … I’m still on the fence about that one.

gedece on April 3, 2020