Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- What Is a Case Study? | Definition, Examples & Methods

What Is a Case Study? | Definition, Examples & Methods

Published on May 8, 2019 by Shona McCombes . Revised on November 20, 2023.

A case study is a detailed study of a specific subject, such as a person, group, place, event, organization, or phenomenon. Case studies are commonly used in social, educational, clinical, and business research.

A case study research design usually involves qualitative methods , but quantitative methods are sometimes also used. Case studies are good for describing , comparing, evaluating and understanding different aspects of a research problem .

Table of contents

When to do a case study, step 1: select a case, step 2: build a theoretical framework, step 3: collect your data, step 4: describe and analyze the case, other interesting articles.

A case study is an appropriate research design when you want to gain concrete, contextual, in-depth knowledge about a specific real-world subject. It allows you to explore the key characteristics, meanings, and implications of the case.

Case studies are often a good choice in a thesis or dissertation . They keep your project focused and manageable when you don’t have the time or resources to do large-scale research.

You might use just one complex case study where you explore a single subject in depth, or conduct multiple case studies to compare and illuminate different aspects of your research problem.

| Research question | Case study |

|---|---|

| What are the ecological effects of wolf reintroduction? | Case study of wolf reintroduction in Yellowstone National Park |

| How do populist politicians use narratives about history to gain support? | Case studies of Hungarian prime minister Viktor Orbán and US president Donald Trump |

| How can teachers implement active learning strategies in mixed-level classrooms? | Case study of a local school that promotes active learning |

| What are the main advantages and disadvantages of wind farms for rural communities? | Case studies of three rural wind farm development projects in different parts of the country |

| How are viral marketing strategies changing the relationship between companies and consumers? | Case study of the iPhone X marketing campaign |

| How do experiences of work in the gig economy differ by gender, race and age? | Case studies of Deliveroo and Uber drivers in London |

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Once you have developed your problem statement and research questions , you should be ready to choose the specific case that you want to focus on. A good case study should have the potential to:

- Provide new or unexpected insights into the subject

- Challenge or complicate existing assumptions and theories

- Propose practical courses of action to resolve a problem

- Open up new directions for future research

TipIf your research is more practical in nature and aims to simultaneously investigate an issue as you solve it, consider conducting action research instead.

Unlike quantitative or experimental research , a strong case study does not require a random or representative sample. In fact, case studies often deliberately focus on unusual, neglected, or outlying cases which may shed new light on the research problem.

Example of an outlying case studyIn the 1960s the town of Roseto, Pennsylvania was discovered to have extremely low rates of heart disease compared to the US average. It became an important case study for understanding previously neglected causes of heart disease.

However, you can also choose a more common or representative case to exemplify a particular category, experience or phenomenon.

Example of a representative case studyIn the 1920s, two sociologists used Muncie, Indiana as a case study of a typical American city that supposedly exemplified the changing culture of the US at the time.

While case studies focus more on concrete details than general theories, they should usually have some connection with theory in the field. This way the case study is not just an isolated description, but is integrated into existing knowledge about the topic. It might aim to:

- Exemplify a theory by showing how it explains the case under investigation

- Expand on a theory by uncovering new concepts and ideas that need to be incorporated

- Challenge a theory by exploring an outlier case that doesn’t fit with established assumptions

To ensure that your analysis of the case has a solid academic grounding, you should conduct a literature review of sources related to the topic and develop a theoretical framework . This means identifying key concepts and theories to guide your analysis and interpretation.

There are many different research methods you can use to collect data on your subject. Case studies tend to focus on qualitative data using methods such as interviews , observations , and analysis of primary and secondary sources (e.g., newspaper articles, photographs, official records). Sometimes a case study will also collect quantitative data.

Example of a mixed methods case studyFor a case study of a wind farm development in a rural area, you could collect quantitative data on employment rates and business revenue, collect qualitative data on local people’s perceptions and experiences, and analyze local and national media coverage of the development.

The aim is to gain as thorough an understanding as possible of the case and its context.

Prevent plagiarism. Run a free check.

In writing up the case study, you need to bring together all the relevant aspects to give as complete a picture as possible of the subject.

How you report your findings depends on the type of research you are doing. Some case studies are structured like a standard scientific paper or thesis , with separate sections or chapters for the methods , results and discussion .

Others are written in a more narrative style, aiming to explore the case from various angles and analyze its meanings and implications (for example, by using textual analysis or discourse analysis ).

In all cases, though, make sure to give contextual details about the case, connect it back to the literature and theory, and discuss how it fits into wider patterns or debates.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Ecological validity

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, November 20). What Is a Case Study? | Definition, Examples & Methods. Scribbr. Retrieved September 23, 2024, from https://www.scribbr.com/methodology/case-study/

Is this article helpful?

Shona McCombes

Other students also liked, primary vs. secondary sources | difference & examples, what is a theoretical framework | guide to organizing, what is action research | definition & examples, what is your plagiarism score.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Case Study | Definition, Examples & Methods

Case Study | Definition, Examples & Methods

Published on 5 May 2022 by Shona McCombes . Revised on 30 January 2023.

A case study is a detailed study of a specific subject, such as a person, group, place, event, organisation, or phenomenon. Case studies are commonly used in social, educational, clinical, and business research.

A case study research design usually involves qualitative methods , but quantitative methods are sometimes also used. Case studies are good for describing , comparing, evaluating, and understanding different aspects of a research problem .

Table of contents

When to do a case study, step 1: select a case, step 2: build a theoretical framework, step 3: collect your data, step 4: describe and analyse the case.

A case study is an appropriate research design when you want to gain concrete, contextual, in-depth knowledge about a specific real-world subject. It allows you to explore the key characteristics, meanings, and implications of the case.

Case studies are often a good choice in a thesis or dissertation . They keep your project focused and manageable when you don’t have the time or resources to do large-scale research.

You might use just one complex case study where you explore a single subject in depth, or conduct multiple case studies to compare and illuminate different aspects of your research problem.

| Research question | Case study |

|---|---|

| What are the ecological effects of wolf reintroduction? | Case study of wolf reintroduction in Yellowstone National Park in the US |

| How do populist politicians use narratives about history to gain support? | Case studies of Hungarian prime minister Viktor Orbán and US president Donald Trump |

| How can teachers implement active learning strategies in mixed-level classrooms? | Case study of a local school that promotes active learning |

| What are the main advantages and disadvantages of wind farms for rural communities? | Case studies of three rural wind farm development projects in different parts of the country |

| How are viral marketing strategies changing the relationship between companies and consumers? | Case study of the iPhone X marketing campaign |

| How do experiences of work in the gig economy differ by gender, race, and age? | Case studies of Deliveroo and Uber drivers in London |

Prevent plagiarism, run a free check.

Once you have developed your problem statement and research questions , you should be ready to choose the specific case that you want to focus on. A good case study should have the potential to:

- Provide new or unexpected insights into the subject

- Challenge or complicate existing assumptions and theories

- Propose practical courses of action to resolve a problem

- Open up new directions for future research

Unlike quantitative or experimental research, a strong case study does not require a random or representative sample. In fact, case studies often deliberately focus on unusual, neglected, or outlying cases which may shed new light on the research problem.

If you find yourself aiming to simultaneously investigate and solve an issue, consider conducting action research . As its name suggests, action research conducts research and takes action at the same time, and is highly iterative and flexible.

However, you can also choose a more common or representative case to exemplify a particular category, experience, or phenomenon.

While case studies focus more on concrete details than general theories, they should usually have some connection with theory in the field. This way the case study is not just an isolated description, but is integrated into existing knowledge about the topic. It might aim to:

- Exemplify a theory by showing how it explains the case under investigation

- Expand on a theory by uncovering new concepts and ideas that need to be incorporated

- Challenge a theory by exploring an outlier case that doesn’t fit with established assumptions

To ensure that your analysis of the case has a solid academic grounding, you should conduct a literature review of sources related to the topic and develop a theoretical framework . This means identifying key concepts and theories to guide your analysis and interpretation.

There are many different research methods you can use to collect data on your subject. Case studies tend to focus on qualitative data using methods such as interviews, observations, and analysis of primary and secondary sources (e.g., newspaper articles, photographs, official records). Sometimes a case study will also collect quantitative data .

The aim is to gain as thorough an understanding as possible of the case and its context.

In writing up the case study, you need to bring together all the relevant aspects to give as complete a picture as possible of the subject.

How you report your findings depends on the type of research you are doing. Some case studies are structured like a standard scientific paper or thesis, with separate sections or chapters for the methods , results , and discussion .

Others are written in a more narrative style, aiming to explore the case from various angles and analyse its meanings and implications (for example, by using textual analysis or discourse analysis ).

In all cases, though, make sure to give contextual details about the case, connect it back to the literature and theory, and discuss how it fits into wider patterns or debates.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2023, January 30). Case Study | Definition, Examples & Methods. Scribbr. Retrieved 23 September 2024, from https://www.scribbr.co.uk/research-methods/case-studies/

Is this article helpful?

Shona McCombes

Other students also liked, correlational research | guide, design & examples, a quick guide to experimental design | 5 steps & examples, descriptive research design | definition, methods & examples.

A Guide To Secondary Data Analysis

What is secondary data analysis? How do you carry it out? Find out in this post.

Historically, the only way data analysts could obtain data was to collect it themselves. This type of data is often referred to as primary data and is still a vital resource for data analysts.

However, technological advances over the last few decades mean that much past data is now readily available online for data analysts and researchers to access and utilize. This type of data—known as secondary data—is driving a revolution in data analytics and data science.

Primary and secondary data share many characteristics. However, there are some fundamental differences in how you prepare and analyze secondary data. This post explores the unique aspects of secondary data analysis. We’ll briefly review what secondary data is before outlining how to source, collect and validate them. We’ll cover:

- What is secondary data analysis?

- How to carry out secondary data analysis (5 steps)

- Summary and further reading

Ready for a crash course in secondary data analysis? Let’s go!

1. What is secondary data analysis?

Secondary data analysis uses data collected by somebody else. This contrasts with primary data analysis, which involves a researcher collecting predefined data to answer a specific question. Secondary data analysis has numerous benefits, not least that it is a time and cost-effective way of obtaining data without doing the research yourself.

It’s worth noting here that secondary data may be primary data for the original researcher. It only becomes secondary data when it’s repurposed for a new task. As a result, a dataset can simultaneously be a primary data source for one researcher and a secondary data source for another. So don’t panic if you get confused! We explain exactly what secondary data is in this guide .

In reality, the statistical techniques used to carry out secondary data analysis are no different from those used to analyze other kinds of data. The main differences lie in collection and preparation. Once the data have been reviewed and prepared, the analytics process continues more or less as it usually does. For a recap on what the data analysis process involves, read this post .

In the following sections, we’ll focus specifically on the preparation of secondary data for analysis. Where appropriate, we’ll refer to primary data analysis for comparison.

2. How to carry out secondary data analysis

Step 1: define a research topic.

The first step in any data analytics project is defining your goal. This is true regardless of the data you’re working with, or the type of analysis you want to carry out. In data analytics lingo, this typically involves defining:

- A statement of purpose

- Research design

Defining a statement of purpose and a research approach are both fundamental building blocks for any project. However, for secondary data analysis, the process of defining these differs slightly. Let’s find out how.

Step 2: Establish your statement of purpose

Before beginning any data analytics project, you should always have a clearly defined intent. This is called a ‘statement of purpose.’ A healthcare analyst’s statement of purpose, for example, might be: ‘Reduce admissions for mental health issues relating to Covid-19′. The more specific the statement of purpose, the easier it is to determine which data to collect, analyze, and draw insights from.

A statement of purpose is helpful for both primary and secondary data analysis. It’s especially relevant for secondary data analysis, though. This is because there are vast amounts of secondary data available. Having a clear direction will keep you focused on the task at hand, saving you from becoming overwhelmed. Being selective with your data sources is key.

Step 3: Design your research process

After defining your statement of purpose, the next step is to design the research process. For primary data, this involves determining the types of data you want to collect (e.g. quantitative, qualitative, or both ) and a methodology for gathering them.

For secondary data analysis, however, your research process will more likely be a step-by-step guide outlining the types of data you require and a list of potential sources for gathering them. It may also include (realistic) expectations of the output of the final analysis. This should be based on a preliminary review of the data sources and their quality.

Once you have both your statement of purpose and research design, you’re in a far better position to narrow down potential sources of secondary data. You can then start with the next step of the process: data collection.

Step 4: Locate and collect your secondary data

Collecting primary data involves devising and executing a complex strategy that can be very time-consuming to manage. The data you collect, though, will be highly relevant to your research problem.

Secondary data collection, meanwhile, avoids the complexity of defining a research methodology. However, it comes with additional challenges. One of these is identifying where to find the data. This is no small task because there are a great many repositories of secondary data available. Your job, then, is to narrow down potential sources. As already mentioned, it’s necessary to be selective, or else you risk becoming overloaded.

Some popular sources of secondary data include:

- Government statistics , e.g. demographic data, censuses, or surveys, collected by government agencies/departments (like the US Bureau of Labor Statistics).

- Technical reports summarizing completed or ongoing research from educational or public institutions (colleges or government).

- Scientific journals that outline research methodologies and data analysis by experts in fields like the sciences, medicine, etc.

- Literature reviews of research articles, books, and reports, for a given area of study (once again, carried out by experts in the field).

- Trade/industry publications , e.g. articles and data shared in trade publications, covering topics relating to specific industry sectors, such as tech or manufacturing.

- Online resources: Repositories, databases, and other reference libraries with public or paid access to secondary data sources.

Once you’ve identified appropriate sources, you can go about collecting the necessary data. This may involve contacting other researchers, paying a fee to an organization in exchange for a dataset, or simply downloading a dataset for free online .

Step 5: Evaluate your secondary data

Secondary data is usually well-structured, so you might assume that once you have your hands on a dataset, you’re ready to dive in with a detailed analysis. Unfortunately, that’s not the case!

First, you must carry out a careful review of the data. Why? To ensure that they’re appropriate for your needs. This involves two main tasks:

Evaluating the secondary dataset’s relevance

- Assessing its broader credibility

Both these tasks require critical thinking skills. However, they aren’t heavily technical. This means anybody can learn to carry them out.

Let’s now take a look at each in a bit more detail.

The main point of evaluating a secondary dataset is to see if it is suitable for your needs. This involves asking some probing questions about the data, including:

What was the data’s original purpose?

Understanding why the data were originally collected will tell you a lot about their suitability for your current project. For instance, was the project carried out by a government agency or a private company for marketing purposes? The answer may provide useful information about the population sample, the data demographics, and even the wording of specific survey questions. All this can help you determine if the data are right for you, or if they are biased in any way.

When and where were the data collected?

Over time, populations and demographics change. Identifying when the data were first collected can provide invaluable insights. For instance, a dataset that initially seems suited to your needs may be out of date.

On the flip side, you might want past data so you can draw a comparison with a present dataset. In this case, you’ll need to ensure the data were collected during the appropriate time frame. It’s worth mentioning that secondary data are the sole source of past data. You cannot collect historical data using primary data collection techniques.

Similarly, you should ask where the data were collected. Do they represent the geographical region you require? Does geography even have an impact on the problem you are trying to solve?

What data were collected and how?

A final report for past data analytics is great for summarizing key characteristics or findings. However, if you’re planning to use those data for a new project, you’ll need the original documentation. At the very least, this should include access to the raw data and an outline of the methodology used to gather them. This can be helpful for many reasons. For instance, you may find raw data that wasn’t relevant to the original analysis, but which might benefit your current task.

What questions were participants asked?

We’ve already touched on this, but the wording of survey questions—especially for qualitative datasets—is significant. Questions may deliberately be phrased to preclude certain answers. A question’s context may also impact the findings in a way that’s not immediately obvious. Understanding these issues will shape how you perceive the data.

What is the form/shape/structure of the data?

Finally, to practical issues. Is the structure of the data suitable for your needs? Is it compatible with other sources or with your preferred analytics approach? This is purely a structural issue. For instance, if a dataset of people’s ages is saved as numerical rather than continuous variables, this could potentially impact your analysis. In general, reviewing a dataset’s structure helps better understand how they are categorized, allowing you to account for any discrepancies. You may also need to tidy the data to ensure they are consistent with any other sources you’re using.

This is just a sample of the types of questions you need to consider when reviewing a secondary data source. The answers will have a clear impact on whether the dataset—no matter how well presented or structured it seems—is suitable for your needs.

Assessing secondary data’s credibility

After identifying a potentially suitable dataset, you must double-check the credibility of the data. Namely, are the data accurate and unbiased? To figure this out, here are some key questions you might want to include:

What are the credentials of those who carried out the original research?

Do you have access to the details of the original researchers? What are their credentials? Where did they study? Are they an expert in the field or a newcomer? Data collection by an undergraduate student, for example, may not be as rigorous as that of a seasoned professor.

And did the original researcher work for a reputable organization? What other affiliations do they have? For instance, if a researcher who works for a tobacco company gathers data on the effects of vaping, this represents an obvious conflict of interest! Questions like this help determine how thorough or qualified the researchers are and if they have any potential biases.

Do you have access to the full methodology?

Does the dataset include a clear methodology, explaining in detail how the data were collected? This should be more than a simple overview; it must be a clear breakdown of the process, including justifications for the approach taken. This allows you to determine if the methodology was sound. If you find flaws (or no methodology at all) it throws the quality of the data into question.

How consistent are the data with other sources?

Do the secondary data match with any similar findings? If not, that doesn’t necessarily mean the data are wrong, but it does warrant closer inspection. Perhaps the collection methodology differed between sources, or maybe the data were analyzed using different statistical techniques. Or perhaps unaccounted-for outliers are skewing the analysis. Identifying all these potential problems is essential. A flawed or biased dataset can still be useful but only if you know where its shortcomings lie.

Have the data been published in any credible research journals?

Finally, have the data been used in well-known studies or published in any journals? If so, how reputable are the journals? In general, you can judge a dataset’s quality based on where it has been published. If in doubt, check out the publication in question on the Directory of Open Access Journals . The directory has a rigorous vetting process, only permitting journals of the highest quality. Meanwhile, if you found the data via a blurry image on social media without cited sources, then you can justifiably question its quality!

Again, these are just a few of the questions you might ask when determining the quality of a secondary dataset. Consider them as scaffolding for cultivating a critical thinking mindset; a necessary trait for any data analyst!

Presuming your secondary data holds up to scrutiny, you should be ready to carry out your detailed statistical analysis. As we explained at the beginning of this post, the analytical techniques used for secondary data analysis are no different than those for any other kind of data. Rather than go into detail here, check out the different types of data analysis in this post.

3. Secondary data analysis: Key takeaways

In this post, we’ve looked at the nuances of secondary data analysis, including how to source, collect and review secondary data. As discussed, much of the process is the same as it is for primary data analysis. The main difference lies in how secondary data are prepared.

Carrying out a meaningful secondary data analysis involves spending time and effort exploring, collecting, and reviewing the original data. This will help you determine whether the data are suitable for your needs and if they are of good quality.

Why not get to know more about what data analytics involves with this free, five-day introductory data analytics short course ? And, for more data insights, check out these posts:

- Discrete vs continuous data variables: What’s the difference?

- What are the four levels of measurement? Nominal, ordinal, interval, and ratio data explained

- What are the best tools for data mining?

Case study research: opening up research opportunities

RAUSP Management Journal

ISSN : 2531-0488

Article publication date: 30 December 2019

Issue publication date: 3 March 2020

The case study approach has been widely used in management studies and the social sciences more generally. However, there are still doubts about when and how case studies should be used. This paper aims to discuss this approach, its various uses and applications, in light of epistemological principles, as well as the criteria for rigor and validity.

Design/methodology/approach

This paper discusses the various concepts of case and case studies in the methods literature and addresses the different uses of cases in relation to epistemological principles and criteria for rigor and validity.

The use of this research approach can be based on several epistemologies, provided the researcher attends to the internal coherence between method and epistemology, or what the authors call “alignment.”

Originality/value

This study offers a number of implications for the practice of management research, as it shows how the case study approach does not commit the researcher to particular data collection or interpretation methods. Furthermore, the use of cases can be justified according to multiple epistemological orientations.

- Epistemology

Takahashi, A.R.W. and Araujo, L. (2020), "Case study research: opening up research opportunities", RAUSP Management Journal , Vol. 55 No. 1, pp. 100-111. https://doi.org/10.1108/RAUSP-05-2019-0109

Emerald Publishing Limited

Copyright © 2019, Adriana Roseli Wünsch Takahashi and Luis Araujo.

Published in RAUSP Management Journal . Published by Emerald Publishing Limited. This article is published under the Creative Commons Attribution (CC BY 4.0) licence. Anyone may reproduce, distribute, translate and create derivative works of this article (for both commercial and non-commercial purposes), subject to full attribution to the original publication and authors. The full terms of this licence may be seen at http://creativecommons.org/licences/by/4.0/legalcode

1. Introduction

The case study as a research method or strategy brings us to question the very term “case”: after all, what is a case? A case-based approach places accords the case a central role in the research process ( Ragin, 1992 ). However, doubts still remain about the status of cases according to different epistemologies and types of research designs.

Despite these doubts, the case study is ever present in the management literature and represents the main method of management research in Brazil ( Coraiola, Sander, Maccali, & Bulgacov, 2013 ). Between 2001 and 2010, 2,407 articles (83.14 per cent of qualitative research) were published in conferences and management journals as case studies (Takahashi & Semprebom, 2013 ). A search on Spell.org.br for the term “case study” under title, abstract or keywords, for the period ranging from January 2010 to July 2019, yielded 3,040 articles published in the management field. Doing research using case studies, allows the researcher to immerse him/herself in the context and gain intensive knowledge of a phenomenon, which in turn demands suitable methodological principles ( Freitas et al. , 2017 ).

Our objective in this paper is to discuss notions of what constitutes a case and its various applications, considering epistemological positions as well as criteria for rigor and validity. The alignment between these dimensions is put forward as a principle advocating coherence among all phases of the research process.

This article makes two contributions. First, we suggest that there are several epistemological justifications for using case studies. Second, we show that the quality and rigor of academic research with case studies are directly related to the alignment between epistemology and research design rather than to choices of specific forms of data collection or analysis. The article is structured as follows: the following four sections discuss concepts of what is a case, its uses, epistemological grounding as well as rigor and quality criteria. The brief conclusions summarize the debate and invite the reader to delve into the literature on the case study method as a way of furthering our understanding of contemporary management phenomena.

2. What is a case study?

The debate over what constitutes a case in social science is a long-standing one. In 1988, Howard Becker and Charles Ragin organized a workshop to discuss the status of the case as a social science method. As the discussion was inconclusive, they posed the question “What is a case?” to a select group of eight social scientists in 1989, and later to participants in a symposium on the subject. Participants were unable to come up with a consensual answer. Since then, we have witnessed that further debates and different answers have emerged. The original question led to an even broader issue: “How do we, as social scientists, produce results and seem to know what we know?” ( Ragin, 1992 , p. 16).

An important step that may help us start a reflection on what is a case is to consider the phenomena we are looking at. To do that, we must know something about what we want to understand and how we might study it. The answer may be a causal explanation, a description of what was observed or a narrative of what has been experienced. In any case, there will always be a story to be told, as the choice of the case study method demands an answer to what the case is about.

A case may be defined ex ante , prior to the start of the research process, as in Yin’s (2015) classical definition. But, there is no compelling reason as to why cases must be defined ex ante . Ragin (1992 , p. 217) proposed the notion of “casing,” to indicate that what the case is emerges from the research process:

Rather than attempt to delineate the many different meanings of the term “case” in a formal taxonomy, in this essay I offer instead a view of cases that follows from the idea implicit in many of the contributions – that concocting cases is a varied but routine social scientific activity. […] The approach of this essay is that this activity, which I call “casing”, should be viewed in practical terms as a research tactic. It is selectively invoked at many different junctures in the research process, usually to resolve difficult issues in linking ideas and evidence.

In other words, “casing” is tied to the researcher’s practice, to the way he/she delimits or declares a case as a significant outcome of a process. In 2013, Ragin revisited the 1992 concept of “casing” and explored its multiple possibilities of use, paying particular attention to “negative cases.”

According to Ragin (1992) , a case can be centered on a phenomenon or a population. In the first scenario, cases are representative of a phenomenon, and are selected based on what can be empirically observed. The process highlights different aspects of cases and obscures others according to the research design, and allows for the complexity, specificity and context of the phenomenon to be explored. In the alternative, population-focused scenario, the selection of cases precedes the research. Both positive and negative cases are considered in exploring a phenomenon, with the definition of the set of cases dependent on theory and the central objective to build generalizations. As a passing note, it is worth mentioning here that a study of multiple cases requires a definition of the unit of analysis a priori . Otherwise, it will not be possible to make cross-case comparisons.

These two approaches entail differences that go beyond the mere opposition of quantitative and qualitative data, as a case often includes both types of data. Thus, the confusion about how to conceive cases is associated with Ragin’s (1992) notion of “small vs large N,” or McKeown’s (1999) “statistical worldview” – the notion that relevant findings are only those that can be made about a population based on the analysis of representative samples. In the same vein, Byrne (2013) argues that we cannot generate nomothetic laws that apply in all circumstances, periods and locations, and that no social science method can claim to generate invariant laws. According to the same author, case studies can help us understand that there is more than one ideographic variety and help make social science useful. Generalizations still matter, but they should be understood as part of defining the research scope, and that scope points to the limitations of knowledge produced and consumed in concrete time and space.

Thus, what defines the orientation and the use of cases is not the mere choice of type of data, whether quantitative or qualitative, but the orientation of the study. A statistical worldview sees cases as data units ( Byrne, 2013 ). Put differently, there is a clear distinction between statistical and qualitative worldviews; the use of quantitative data does not by itself means that the research is (quasi) statistical, or uses a deductive logic:

Case-based methods are useful, and represent, among other things, a way of moving beyond a useless and destructive tradition in the social sciences that have set quantitative and qualitative modes of exploration, interpretation, and explanation against each other ( Byrne, 2013 , p. 9).

Other authors advocate different understandings of what a case study is. To some, it is a research method, to others it is a research strategy ( Creswell, 1998 ). Sharan Merrian and Robert Yin, among others, began to write about case study research as a methodology in the 1980s (Merrian, 2009), while authors such as Eisenhardt (1989) called it a research strategy. Stake (2003) sees the case study not as a method, but as a choice of what to be studied, the unit of study. Regardless of their differences, these authors agree that case studies should be restricted to a particular context as they aim to provide an in-depth knowledge of a given phenomenon: “A case study is an in-depth description and analysis of a bounded system” (Merrian, 2009, p. 40). According to Merrian, a qualitative case study can be defined by the process through which the research is carried out, by the unit of analysis or the final product, as the choice ultimately depends on what the researcher wants to know. As a product of research, it involves the analysis of a given entity, phenomenon or social unit.

Thus, whether it is an organization, an individual, a context or a phenomenon, single or multiple, one must delimit it, and also choose between possible types and configurations (Merrian, 2009; Yin, 2015 ). A case study may be descriptive, exploratory, explanatory, single or multiple ( Yin, 2015 ); intrinsic, instrumental or collective ( Stake, 2003 ); and confirm or build theory ( Eisenhardt, 1989 ).

both went through the same process of implementing computer labs intended for the use of information and communication technologies in 2007;

both took part in the same regional program (Paraná Digital); and

they shared similar characteristics regarding location (operation in the same neighborhood of a city), number of students, number of teachers and technicians and laboratory sizes.

However, the two institutions differed in the number of hours of program use, with one of them displaying a significant number of hours/use while the other showed a modest number, according to secondary data for the period 2007-2013. Despite the context being similar and the procedures for implementing the technology being the same, the mechanisms of social integration – an idiosyncratic factor of each institution – were different in each case. This explained differences in their use of resource, processes of organizational learning and capacity to absorb new knowledge.

On the other hand, multiple case studies seek evidence in different contexts and do not necessarily require direct comparisons ( Stake, 2003 ). Rather, there is a search for patterns of convergence and divergence that permeate all the cases, as the same issues are explored in every case. Cases can be added progressively until theoretical saturation is achieved. An example is of a study that investigated how entrepreneurial opportunity and management skills were developed through entrepreneurial learning ( Zampier & Takahashi, 2014 ). The authors conducted nine case studies, based on primary and secondary data, with each one analyzed separately, so a search for patterns could be undertaken. The convergence aspects found were: the predominant way of transforming experience into knowledge was exploitation; managerial skills were developed through by taking advantages of opportunities; and career orientation encompassed more than one style. As for divergence patterns: the experience of success and failure influenced entrepreneurs differently; the prevailing rationality logic of influence was different; and the combination of styles in career orientation was diverse.

A full discussion of choice of case study design is outside the scope of this article. For the sake of illustration, we make a brief mention to other selection criteria such as the purpose of the research, the state of the art of the research theme, the time and resources involved and the preferred epistemological position of the researcher. In the next section, we look at the possibilities of carrying out case studies in line with various epistemological traditions, as the answers to the “what is a case?” question reveal varied methodological commitments as well as diverse epistemological and ontological positions ( Ragin, 2013 ).

3. Epistemological positioning of case study research

Ontology and epistemology are like skin, not a garment to be occasionally worn ( Marsh & Furlong, 2002 ). According to these authors, ontology and epistemology guide the choice of theory and method because they cannot or should not be worn as a garment. Hence, one must practice philosophical “self-knowledge” to recognize one’s vision of what the world is and of how knowledge of that world is accessed and validated. Ontological and epistemological positions are relevant in that they involve the positioning of the researcher in social science and the phenomena he or she chooses to study. These positions do not tend to vary from one project to another although they can certainly change over time for a single researcher.

Ontology is the starting point from which the epistemological and methodological positions of the research arise ( Grix, 2002 ). Ontology expresses a view of the world, what constitutes reality, nature and the image one has of social reality; it is a theory of being ( Marsh & Furlong, 2002 ). The central question is the nature of the world out there regardless of our ability to access it. An essentialist or foundationalist ontology acknowledges that there are differences that persist over time and these differences are what underpin the construction of social life. An opposing, anti-foundationalist position presumes that the differences found are socially constructed and may vary – i.e. they are not essential but specific to a given culture at a given time ( Marsh & Furlong, 2002 ).

Epistemology is centered around a theory of knowledge, focusing on the process of acquiring and validating knowledge ( Grix, 2002 ). Positivists look at social phenomena as a world of causal relations where there is a single truth to be accessed and confirmed. In this tradition, case studies test hypotheses and rely on deductive approaches and quantitative data collection and analysis techniques. Scholars in the field of anthropology and observation-based qualitative studies proposed alternative epistemologies based on notions of the social world as a set of manifold and ever-changing processes. In management studies since the 1970s, the gradual acceptance of qualitative research has generated a diverse range of research methods and conceptions of the individual and society ( Godoy, 1995 ).

The interpretative tradition, in direct opposition to positivism, argues that there is no single objective truth to be discovered about the social world. The social world and our knowledge of it are the product of social constructions. Thus, the social world is constituted by interactions, and our knowledge is hermeneutic as the world does not exist independent of our knowledge ( Marsh & Furlong, 2002 ). The implication is that it is not possible to access social phenomena through objective, detached methods. Instead, the interaction mechanisms and relationships that make up social constructions have to be studied. Deductive approaches, hypothesis testing and quantitative methods are not relevant here. Hermeneutics, on the other hand, is highly relevant as it allows the analysis of the individual’s interpretation, of sayings, texts and actions, even though interpretation is always the “truth” of a subject. Methods such as ethnographic case studies, interviews and observations as data collection techniques should feed research designs according to interpretivism. It is worth pointing out that we are to a large extent, caricaturing polar opposites rather characterizing a range of epistemological alternatives, such as realism, conventionalism and symbolic interactionism.

If diverse ontologies and epistemologies serve as a guide to research approaches, including data collection and analysis methods, and if they should be regarded as skin rather than clothing, how does one make choices regarding case studies? What are case studies, what type of knowledge they provide and so on? The views of case study authors are not always explicit on this point, so we must delve into their texts to glean what their positions might be.

Two of the cited authors in case study research are Robert Yin and Kathleen Eisenhardt. Eisenhardt (1989) argues that a case study can serve to provide a description, test or generate a theory, the latter being the most relevant in contributing to the advancement of knowledge in a given area. She uses terms such as populations and samples, control variables, hypotheses and generalization of findings and even suggests an ideal number of case studies to allow for theory construction through replication. Although Eisenhardt includes observation and interview among her recommended data collection techniques, the approach is firmly anchored in a positivist epistemology:

Third, particularly in comparison with Strauss (1987) and Van Maanen (1988), the process described here adopts a positivist view of research. That is, the process is directed toward the development of testable hypotheses and theory which are generalizable across settings. In contrast, authors like Strauss and Van Maanen are more concerned that a rich, complex description of the specific cases under study evolve and they appear less concerned with development of generalizable theory ( Eisenhardt, 1989 , p. 546).

This position attracted a fair amount of criticism. Dyer & Wilkins (1991) in a critique of Eisenhardt’s (1989) article focused on the following aspects: there is no relevant justification for the number of cases recommended; it is the depth and not the number of cases that provides an actual contribution to theory; and the researcher’s purpose should be to get closer to the setting and interpret it. According to the same authors, discrepancies from prior expectations are also important as they lead researchers to reflect on existing theories. Eisenhardt & Graebner (2007 , p. 25) revisit the argument for the construction of a theory from multiple cases:

A major reason for the popularity and relevance of theory building from case studies is that it is one of the best (if not the best) of the bridges from rich qualitative evidence to mainstream deductive research.

Although they recognize the importance of single-case research to explore phenomena under unique or rare circumstances, they reaffirm the strength of multiple case designs as it is through them that better accuracy and generalization can be reached.

Likewise, Robert Yin emphasizes the importance of variables, triangulation in the search for “truth” and generalizable theoretical propositions. Yin (2015 , p. 18) suggests that the case study method may be appropriate for different epistemological orientations, although much of his work seems to invoke a realist epistemology. Authors such as Merrian (2009) and Stake (2003) suggest an interpretative version of case studies. Stake (2003) looks at cases as a qualitative option, where the most relevant criterion of case selection should be the opportunity to learn and understand a phenomenon. A case is not just a research method or strategy; it is a researcher’s choice about what will be studied:

Even if my definition of case study was agreed upon, and it is not, the term case and study defy full specification (Kemmis, 1980). A case study is both a process of inquiry about the case and the product of that inquiry ( Stake, 2003 , p. 136).

Later, Stake (2003 , p. 156) argues that:

[…] the purpose of a case report is not to represent the world, but to represent the case. […] The utility of case research to practitioners and policy makers is in its extension of experience.

Still according to Stake (2003 , pp. 140-141), to do justice to complex views of social phenomena, it is necessary to analyze the context and relate it to the case, to look for what is peculiar rather than common in cases to delimit their boundaries, to plan the data collection looking for what is common and unusual about facts, what could be valuable whether it is unique or common:

Reflecting upon the pertinent literature, I find case study methodology written largely by people who presume that the research should contribute to scientific generalization. The bulk of case study work, however, is done by individuals who have intrinsic interest in the case and little interest in the advance of science. Their designs aim the inquiry toward understanding of what is important about that case within its own world, which is seldom the same as the worlds of researchers and theorists. Those designs develop what is perceived to be the case’s own issues, contexts, and interpretations, its thick descriptions . In contrast, the methods of instrumental case study draw the researcher toward illustrating how the concerns of researchers and theorists are manifest in the case. Because the critical issues are more likely to be know in advance and following disciplinary expectations, such a design can take greater advantage of already developed instruments and preconceived coding schemes.

The aforementioned authors were listed to illustrate differences and sometimes opposing positions on case research. These differences are not restricted to a choice between positivism and interpretivism. It is worth noting that Ragin’s (2013 , p. 523) approach to “casing” is compatible with the realistic research perspective:

In essence, to posit cases is to engage in ontological speculation regarding what is obdurately real but only partially and indirectly accessible through social science. Bringing a realist perspective to the case question deepens and enriches the dialogue, clarifying some key issues while sweeping others aside.

cases are actual entities, reflecting their operations of real causal mechanism and process patterns;

case studies are interactive processes and are open to revisions and refinements; and

social phenomena are complex, contingent and context-specific.

Ragin (2013 , p. 532) concludes:

Lurking behind my discussion of negative case, populations, and possibility analysis is the implication that treating cases as members of given (and fixed) populations and seeking to infer the properties of populations may be a largely illusory exercise. While demographers have made good use of the concept of population, and continue to do so, it is not clear how much the utility of the concept extends beyond their domain. In case-oriented work, the notion of fixed populations of cases (observations) has much less analytic utility than simply “the set of relevant cases,” a grouping that must be specified or constructed by the researcher. The demarcation of this set, as the work of case-oriented researchers illustrates, is always tentative, fluid, and open to debate. It is only by casing social phenomena that social scientists perceive the homogeneity that allows analysis to proceed.

In summary, case studies are relevant and potentially compatible with a range of different epistemologies. Researchers’ ontological and epistemological positions will guide their choice of theory, methodologies and research techniques, as well as their research practices. The same applies to the choice of authors describing the research method and this choice should be coherent. We call this research alignment , an attribute that must be judged on the internal coherence of the author of a study, and not necessarily its evaluator. The following figure illustrates the interrelationship between the elements of a study necessary for an alignment ( Figure 1 ).

In addition to this broader aspect of the research as a whole, other factors should be part of the researcher’s concern, such as the rigor and quality of case studies. We will look into these in the next section taking into account their relevance to the different epistemologies.

4. Rigor and quality in case studies

Traditionally, at least in positivist studies, validity and reliability are the relevant quality criteria to judge research. Validity can be understood as external, internal and construct. External validity means identifying whether the findings of a study are generalizable to other studies using the logic of replication in multiple case studies. Internal validity may be established through the theoretical underpinning of existing relationships and it involves the use of protocols for the development and execution of case studies. Construct validity implies defining the operational measurement criteria to establish a chain of evidence, such as the use of multiple sources of evidence ( Eisenhardt, 1989 ; Yin, 2015 ). Reliability implies conducting other case studies, instead of just replicating results, to minimize the errors and bias of a study through case study protocols and the development of a case database ( Yin, 2015 ).

Several criticisms have been directed toward case studies, such as lack of rigor, lack of generalization potential, external validity and researcher bias. Case studies are often deemed to be unreliable because of a lack of rigor ( Seuring, 2008 ). Flyvbjerg (2006 , p. 219) addresses five misunderstandings about case-study research, and concludes that:

[…] a scientific discipline without a large number of thoroughly executed case studies is a discipline without systematic production of exemplars, and a discipline without exemplars is an ineffective one.

theoretical knowledge is more valuable than concrete, practical knowledge;

the case study cannot contribute to scientific development because it is not possible to generalize on the basis of an individual case;

the case study is more useful for generating rather than testing hypotheses;

the case study contains a tendency to confirm the researcher’s preconceived notions; and

it is difficult to summarize and develop general propositions and theories based on case studies.

These criticisms question the validity of the case study as a scientific method and should be corrected.

The critique of case studies is often framed from the standpoint of what Ragin (2000) labeled large-N research. The logic of small-N research, to which case studies belong, is different. Cases benefit from depth rather than breadth as they: provide theoretical and empirical knowledge; contribute to theory through propositions; serve not only to confirm knowledge, but also to challenge and overturn preconceived notions; and the difficulty in summarizing their conclusions is because of the complexity of the phenomena studies and not an intrinsic limitation of the method.

Thus, case studies do not seek large-scale generalizations as that is not their purpose. And yet, this is a limitation from a positivist perspective as there is an external reality to be “apprehended” and valid conclusions to be extracted for an entire population. If positivism is the epistemology of choice, the rigor of a case study can be demonstrated by detailing the criteria used for internal and external validity, construct validity and reliability ( Gibbert & Ruigrok, 2010 ; Gibbert, Ruigrok, & Wicki, 2008 ). An example can be seen in case studies in the area of information systems, where there is a predominant orientation of positivist approaches to this method ( Pozzebon & Freitas, 1998 ). In this area, rigor also involves the definition of a unit of analysis, type of research, number of cases, selection of sites, definition of data collection and analysis procedures, definition of the research protocol and writing a final report. Creswell (1998) presents a checklist for researchers to assess whether the study was well written, if it has reliability and validity and if it followed methodological protocols.

In case studies with a non-positivist orientation, rigor can be achieved through careful alignment (coherence among ontology, epistemology, theory and method). Moreover, the concepts of validity can be understood as concern and care in formulating research, research development and research results ( Ollaik & Ziller, 2012 ), and to achieve internal coherence ( Gibbert et al. , 2008 ). The consistency between data collection and interpretation, and the observed reality also help these studies meet coherence and rigor criteria. Siggelkow (2007) argues that a case study should be persuasive and that even a single case study may be a powerful example to contest a widely held view. To him, the value of a single case study or studies with few cases can be attained by their potential to provide conceptual insights and coherence to the internal logic of conceptual arguments: “[…] a paper should allow a reader to see the world, and not just the literature, in a new way” ( Siggelkow, 2007 , p. 23).

Interpretative studies should not be justified by criteria derived from positivism as they are based on a different ontology and epistemology ( Sandberg, 2005 ). The rejection of an interpretive epistemology leads to the rejection of an objective reality: “As Bengtsson points out, the life-world is the subjects’ experience of reality, at the same time as it is objective in the sense that it is an intersubjective world” ( Sandberg, 2005 , p. 47). In this event, how can one demonstrate what positivists call validity and reliability? What would be the criteria to justify knowledge as truth, produced by research in this epistemology? Sandberg (2005 , p. 62) suggests an answer based on phenomenology:

This was demonstrated first by explicating life-world and intentionality as the basic assumptions underlying the interpretative research tradition. Second, based on those assumptions, truth as intentional fulfillment, consisting of perceived fulfillment, fulfillment in practice, and indeterminate fulfillment, was proposed. Third, based on the proposed truth constellation, communicative, pragmatic, and transgressive validity and reliability as interpretative awareness were presented as the most appropriate criteria for justifying knowledge produced within interpretative approach. Finally, the phenomenological epoché was suggested as a strategy for achieving these criteria.

From this standpoint, the research site must be chosen according to its uniqueness so that one can obtain relevant insights that no other site could provide ( Siggelkow, 2007 ). Furthermore, the view of what is being studied is at the center of the researcher’s attention to understand its “truth,” inserted in a given context.

The case researcher is someone who can reduce the probability of misinterpretations by analyzing multiple perceptions, searches for data triangulation to check for the reliability of interpretations ( Stake, 2003 ). It is worth pointing out that this is not an option for studies that specifically seek the individual’s experience in relation to organizational phenomena.

In short, there are different ways of seeking rigor and quality in case studies, depending on the researcher’s worldview. These different forms pervade everything from the research design, the choice of research questions, the theory or theories to look at a phenomenon, research methods, the data collection and analysis techniques, to the type and style of research report produced. Validity can also take on different forms. While positivism is concerned with validity of the research question and results, interpretivism emphasizes research processes without neglecting the importance of the articulation of pertinent research questions and the sound interpretation of results ( Ollaik & Ziller, 2012 ). The means to achieve this can be diverse, such as triangulation (of multiple theories, multiple methods, multiple data sources or multiple investigators), pre-tests of data collection instrument, pilot case, study protocol, detailed description of procedures such as field diary in observations, researcher positioning (reflexivity), theoretical-empirical consistency, thick description and transferability.

5. Conclusions

The central objective of this article was to discuss concepts of case study research, their potential and various uses, taking into account different epistemologies as well as criteria of rigor and validity. Although the literature on methodology in general and on case studies in particular, is voluminous, it is not easy to relate this approach to epistemology. In addition, method manuals often focus on the details of various case study approaches which confuse things further.

Faced with this scenario, we have tried to address some central points in this debate and present various ways of using case studies according to the preferred epistemology of the researcher. We emphasize that this understanding depends on how a case is defined and the particular epistemological orientation that underpins that conceptualization. We have argued that whatever the epistemological orientation is, it is possible to meet appropriate criteria of research rigor and quality provided there is an alignment among the different elements of the research process. Furthermore, multiple data collection techniques can be used in in single or multiple case study designs. Data collection techniques or the type of data collected do not define the method or whether cases should be used for theory-building or theory-testing.

Finally, we encourage researchers to consider case study research as one way to foster immersion in phenomena and their contexts, stressing that the approach does not imply a commitment to a particular epistemology or type of research, such as qualitative or quantitative. Case study research allows for numerous possibilities, and should be celebrated for that diversity rather than pigeon-holed as a monolithic research method.

The interrelationship between the building blocks of research

Byrne , D. ( 2013 ). Case-based methods: Why We need them; what they are; how to do them . Byrne D. In D Byrne. and C.C Ragin (Eds.), The SAGE handbooks of Case-Based methods , pp. 1 – 10 . London : SAGE Publications Inc .

Creswell , J. W. ( 1998 ). Qualitative inquiry and research design: choosing among five traditions , London : Sage Publications .

Coraiola , D. M. , Sander , J. A. , Maccali , N. & Bulgacov , S. ( 2013 ). Estudo de caso . In A. R. W. Takahashi , (Ed.), Pesquisa qualitativa em administração: Fundamentos, métodos e usos no Brasil , pp. 307 – 341 . São Paulo : Atlas .

Dyer , W. G. , & Wilkins , A. L. ( 1991 ). Better stories, not better constructs, to generate better theory: a rejoinder to Eisenhardt . The Academy of Management Review , 16 , 613 – 627 .

Eisenhardt , K. ( 1989 ). Building theory from case study research . Academy of Management Review , 14 , 532 – 550 .

Eisenhardt , K. M. , & Graebner , M. E. ( 2007 ). Theory building from cases: Opportunities and challenges . Academy of Management Journal , 50 , 25 – 32 .

Flyvbjerg , B. ( 2006 ). Five misunderstandings about case-study research . Qualitative Inquiry , 12 , 219 – 245 .

Freitas , J. S. , Ferreira , J. C. A. , Campos , A. A. R. , Melo , J. C. F. , Cheng , L. C. , & Gonçalves , C. A. ( 2017 ). Methodological roadmapping: a study of centering resonance analysis . RAUSP Management Journal , 53 , 459 – 475 .

Gibbert , M. , Ruigrok , W. , & Wicki , B. ( 2008 ). What passes as a rigorous case study? . Strategic Management Journal , 29 , 1465 – 1474 .

Gibbert , M. , & Ruigrok , W. ( 2010 ). The “what” and “how” of case study rigor: Three strategies based on published work . Organizational Research Methods , 13 , 710 – 737 .

Godoy , A. S. ( 1995 ). Introdução à pesquisa qualitativa e suas possibilidades . Revista de Administração de Empresas , 35 , 57 – 63 .

Grix , J. ( 2002 ). Introducing students to the generic terminology of social research . Politics , 22 , 175 – 186 .

Marsh , D. , & Furlong , P. ( 2002 ). A skin, not a sweater: ontology and epistemology in political science . In D Marsh. , & G Stoker , (Eds.), Theory and Methods in Political Science , New York, NY : Palgrave McMillan , pp. 17 – 41 .

McKeown , T. J. ( 1999 ). Case studies and the statistical worldview: Review of King, Keohane, and Verba’s designing social inquiry: Scientific inference in qualitative research . International Organization , 53 , 161 – 190 .

Merriam , S. B. ( 2009 ). Qualitative research: a guide to design and implementation .

Ollaik , L. G. , & Ziller , H. ( 2012 ). Distintas concepções de validade em pesquisas qualitativas . Educação e Pesquisa , 38 , 229 – 241 .

Picoli , F. R. , & Takahashi , A. R. W. ( 2016 ). Capacidade de absorção, aprendizagem organizacional e mecanismos de integração social . Revista de Administração Contemporânea , 20 , 1 – 20 .

Pozzebon , M. , & Freitas , H. M. R. ( 1998 ). Pela aplicabilidade: com um maior rigor científico – dos estudos de caso em sistemas de informação . Revista de Administração Contemporânea , 2 , 143 – 170 .

Sandberg , J. ( 2005 ). How do we justify knowledge produced within interpretive approaches? . Organizational Research Methods , 8 , 41 – 68 .

Seuring , S. A. ( 2008 ). Assessing the rigor of case study research in supply chain management. Supply chain management . Supply Chain Management: An International Journal , 13 , 128 – 137 .

Siggelkow , N. ( 2007 ). Persuasion with case studies . Academy of Management Journal , 50 , 20 – 24 .

Stake , R. E. ( 2003 ). Case studies . In N. K. , Denzin , & Y. S. , Lincoln (Eds.). Strategies of Qualitative Inquiry , London : Sage Publications . pp. 134 – 164 .

Takahashi , A. R. W. , & Semprebom , E. ( 2013 ). Resultados gerais e desafios . In A. R. W. , Takahashi (Ed.), Pesquisa qualitativa em administração: Fundamentos, métodos e usos no brasil , pp. 343 – 354 . São Paulo : Atlas .

Ragin , C. C. ( 1992 ). Introduction: Cases of “what is a case? . In H. S. , Becker , & C. C. Ragin and (Eds). What is a case? Exploring the foundations of social inquiry , pp. 1 – 18 .

Ragin , C. C. ( 2013 ). Reflections on casing and Case-Oriented research . In D , Byrne. , & C. C. Ragin (Eds.), The SAGE handbooks of Case-Based methods , London : SAGE Publications , pp. 522 – 534 .

Yin , R. K. ( 2015 ). Estudo de caso: planejamento e métodos , Porto Alegre : Bookman .

Zampier , M. A. , & Takahashi , A. R. W. ( 2014 ). Aprendizagem e competências empreendedoras: Estudo de casos de micro e pequenas empresas do setor educacional . RGO Revista Gestão Organizacional , 6 , 1 – 18 .

Acknowledgements

Author contributions: Both authors contributed equally.

Corresponding author

Related articles, all feedback is valuable.

Please share your general feedback

Report an issue or find answers to frequently asked questions

Contact Customer Support

- Case Reports

Qualitative Case Study Methodology: Study Design and Implementation for Novice Researchers

- January 2010

- The Qualitative Report 13(4)

- McMaster University

Abstract and Figures

Discover the world's research

- 25+ million members

- 160+ million publication pages

- 2.3+ billion citations

- Yihalem Tamiru

- Afework Mulugeta

- Zan Haggerty

- Ramachandran Saraswathy Gopakumar

- Sonu S. Babu

- Soumya Gopakumar

- Ravi Prasad Varma

- Alexander Poth

- RES SOC ADMIN PHARM

- Bob Algozzine

- B J Breitmayer

- Y.S. Lincoln

- ADV NURS SCI

- Margarete Sandelowski

- John W. Scheib

- Robert E. Stake

- Thomas J. Richards

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- Product Demos

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Artificial Intelligence

Market Research

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Secondary Research

Try Qualtrics for free

Secondary research: definition, methods, & examples.

19 min read This ultimate guide to secondary research helps you understand changes in market trends, customers buying patterns and your competition using existing data sources.

In situations where you’re not involved in the data gathering process ( primary research ), you have to rely on existing information and data to arrive at specific research conclusions or outcomes. This approach is known as secondary research.

In this article, we’re going to explain what secondary research is, how it works, and share some examples of it in practice.

Free eBook: The ultimate guide to conducting market research

What is secondary research?

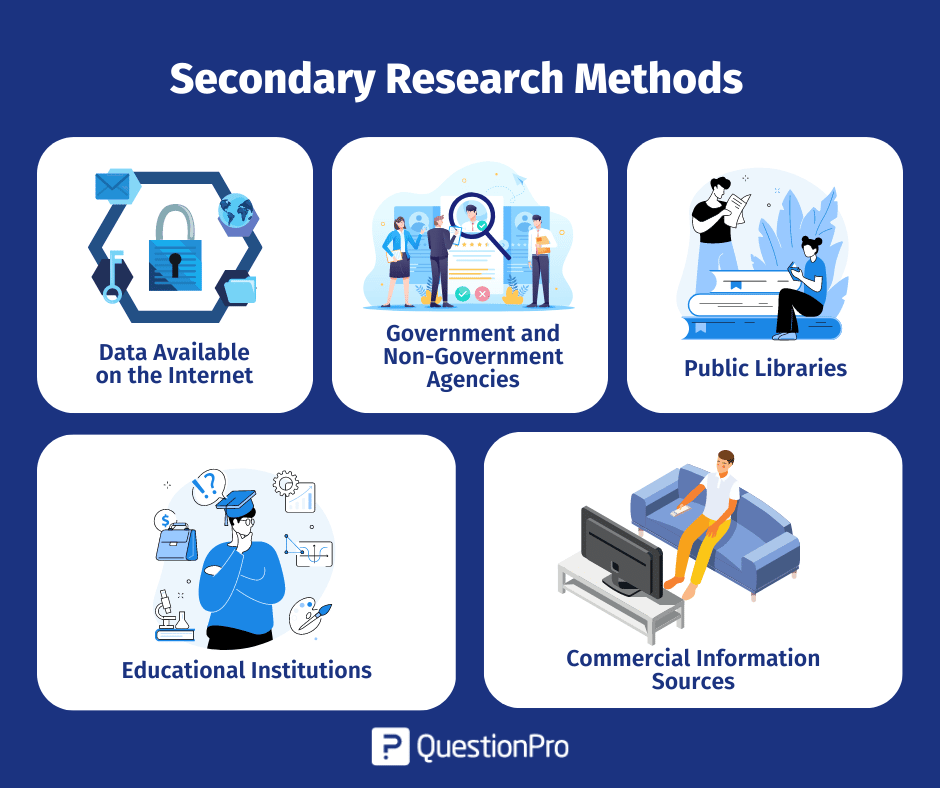

Secondary research, also known as desk research, is a research method that involves compiling existing data sourced from a variety of channels . This includes internal sources (e.g.in-house research) or, more commonly, external sources (such as government statistics, organizational bodies, and the internet).

Secondary research comes in several formats, such as published datasets, reports, and survey responses , and can also be sourced from websites, libraries, and museums.

The information is usually free — or available at a limited access cost — and gathered using surveys , telephone interviews, observation, face-to-face interviews, and more.

When using secondary research, researchers collect, verify, analyze and incorporate it to help them confirm research goals for the research period.

As well as the above, it can be used to review previous research into an area of interest. Researchers can look for patterns across data spanning several years and identify trends — or use it to verify early hypothesis statements and establish whether it’s worth continuing research into a prospective area.

How to conduct secondary research

There are five key steps to conducting secondary research effectively and efficiently:

1. Identify and define the research topic

First, understand what you will be researching and define the topic by thinking about the research questions you want to be answered.

Ask yourself: What is the point of conducting this research? Then, ask: What do we want to achieve?

This may indicate an exploratory reason (why something happened) or confirm a hypothesis. The answers may indicate ideas that need primary or secondary research (or a combination) to investigate them.

2. Find research and existing data sources

If secondary research is needed, think about where you might find the information. This helps you narrow down your secondary sources to those that help you answer your questions. What keywords do you need to use?

Which organizations are closely working on this topic already? Are there any competitors that you need to be aware of?

Create a list of the data sources, information, and people that could help you with your work.

3. Begin searching and collecting the existing data

Now that you have the list of data sources, start accessing the data and collect the information into an organized system. This may mean you start setting up research journal accounts or making telephone calls to book meetings with third-party research teams to verify the details around data results.

As you search and access information, remember to check the data’s date, the credibility of the source, the relevance of the material to your research topic, and the methodology used by the third-party researchers. Start small and as you gain results, investigate further in the areas that help your research’s aims.

4. Combine the data and compare the results

When you have your data in one place, you need to understand, filter, order, and combine it intelligently. Data may come in different formats where some data could be unusable, while other information may need to be deleted.

After this, you can start to look at different data sets to see what they tell you. You may find that you need to compare the same datasets over different periods for changes over time or compare different datasets to notice overlaps or trends. Ask yourself: What does this data mean to my research? Does it help or hinder my research?

5. Analyze your data and explore further

In this last stage of the process, look at the information you have and ask yourself if this answers your original questions for your research. Are there any gaps? Do you understand the information you’ve found? If you feel there is more to cover, repeat the steps and delve deeper into the topic so that you can get all the information you need.

If secondary research can’t provide these answers, consider supplementing your results with data gained from primary research. As you explore further, add to your knowledge and update your findings. This will help you present clear, credible information.

Primary vs secondary research

Unlike secondary research, primary research involves creating data first-hand by directly working with interviewees, target users, or a target market. Primary research focuses on the method for carrying out research, asking questions, and collecting data using approaches such as:

- Interviews (panel, face-to-face or over the phone)

- Questionnaires or surveys

- Focus groups

Using these methods, researchers can get in-depth, targeted responses to questions, making results more accurate and specific to their research goals. However, it does take time to do and administer.

Unlike primary research, secondary research uses existing data, which also includes published results from primary research. Researchers summarize the existing research and use the results to support their research goals.

Both primary and secondary research have their places. Primary research can support the findings found through secondary research (and fill knowledge gaps), while secondary research can be a starting point for further primary research. Because of this, these research methods are often combined for optimal research results that are accurate at both the micro and macro level.

| First-hand research to collect data. May require a lot of time | The research collects existing, published data. May require a little time |

| Creates raw data that the researcher owns | The researcher has no control over data method or ownership |

| Relevant to the goals of the research | May not be relevant to the goals of the research |