Free AI Detector

Identify AI-generated content, including ChatGPT and Copilot, with Scribbr's free AI detector

Why use Scribbr’s AI Detector

Authority on ai and plagiarism.

Our plagiarism and AI detector tools and helpful content are used by millions of users every month.

Advanced algorithms

Our AI checker is built using advanced algorithms for detecting AI-generated content. It’s also been enhanced to distinguish between human-written, AI-generated, and AI-refined writing.

Unlimited free AI checks

Perform an unlimited number of AI checks for free, with a limit of up to 1,200 words per submission, ensuring all of your work is authentic.

No sign-up required

Start detecting AI-generated content instantly, without having to create an account.

Confidentiality guaranteed

Rest easy knowing your submissions remain private; we do not store or share your data.

Paraphrasing detection

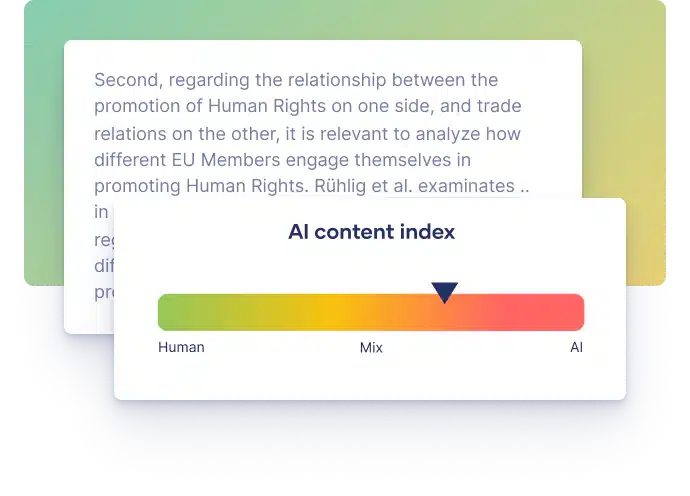

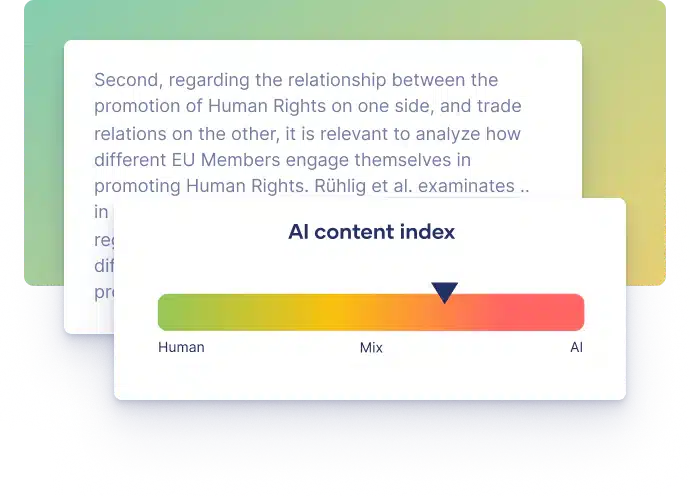

Scribbr’s AI Detector provides insight into whether a piece of writing is fully AI-generated, AI-refined, or completely human-written.

Multilingual support

Our AI Detector supports multiple languages, including German, French, and Spanish. We check and analyze your content at a high level of accuracy across many different languages.

Paragraph level feedback

Our AI Content Detector identifies specific areas in your text that are likely AI-generated or AI-refined to provide a detailed analysis of the content.

AI Detector for ChatGPT, Copilot, Gemini, and more

Scribbr’s AI Content Detector accurately detects texts generated by the most popular tools, like ChatGPT, Gemini, and Copilot. It also offers advanced features, such as differentiation between human-written, AI-generated, and AI-refined content and paragraph-level feedback for more detailed analysis of your writing.

Our advanced AI checker tool can detect the latest models, like GPT4 with high accuracy. Note that no AI Detector can provide complete accuracy ( see our research ). As language models continue to develop, detection tools will always have to race to keep up with them.

Our AI Content Detector is perfect for..

Confidently submit your papers

Scribbr’s AI Detector helps ensure that your essays and papers adhere to your university guidelines.

- Verify the authenticity of your sources, ensuring that you only present trustworthy information.

- Identify any AI-generated content, like that created by ChatGPT, that might need proper attribution.

- Analyze content in English, German, French, and Spanish.

Check the authenticity of your students’ work

More and more students are using AI tools like ChatGPT in their writing process. Our AI checker helps educators detect AI-generated, AI-refined, and human-written content in text.

- Analyze the content submitted by your students to ensure that their work is actually written by them.

- Promote a culture of honesty and originality among your students.

- Receive feedback at the paragraph level for more detailed analysis.

Prevent search algorithm penalties

Using our AI content detector ensures that your content will be indexed by assisting you to publish high-quality and original content.

- Analyze the authenticity of articles written by external contributors or agencies before publishing them.

- Deliver unique content that engages your audience and drives traffic to your website.

- Differentiate between human-written, AI-generated, and AI-refined content for detailed analysis.

AI Detectors vs. Plagiarism Checkers

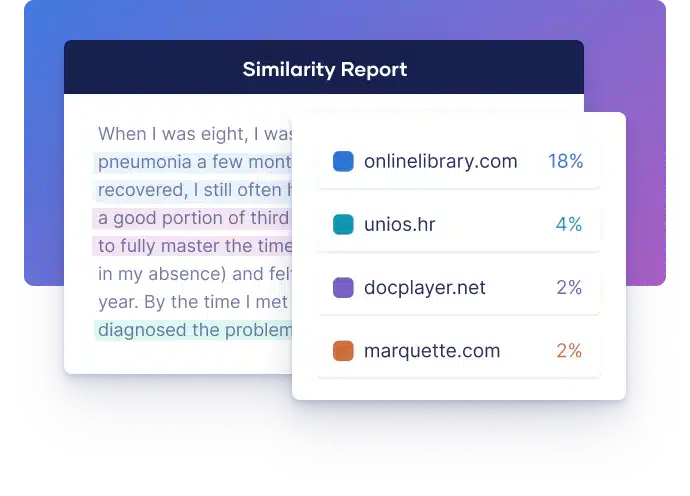

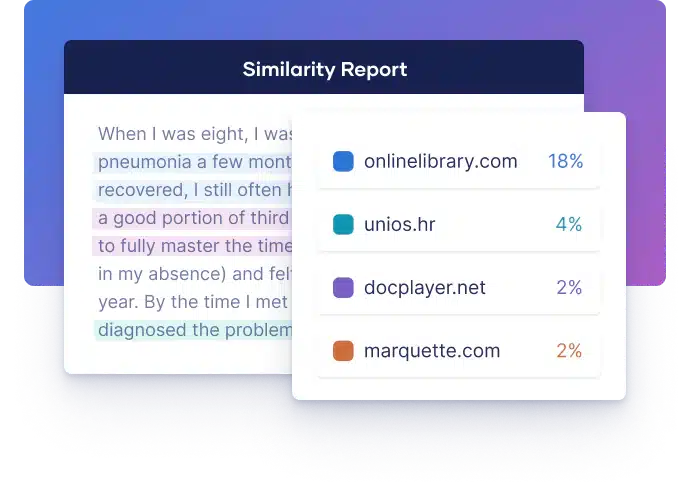

AI detectors and plagiarism checkers are both used to verify the originality and authenticity of a text, but they differ in terms of how they work and what they’re looking for.

AI Detector or ChatGPT Detector

AI detectors try to find text that looks like it was generated by an AI writing tool, like ChatGPT. They do this by measuring specific characteristics of the text like sentence structure and length, word choice, and predictability — not by comparing it to a database of content.

Plagiarism Checker

Plagiarism checkers try to find text that is copied from a different source. They do this by comparing the text to a large database of web pages, news articles, journals, and so on, and detecting similarities — not by measuring specific characteristics of the text.

Scribbr & academic integrity

Scribbr is committed to protecting academic integrity. Our tools, like the AI Detector, Plagiarism Checker , and Citation Generator are designed to help students produce quality academic papers and prevent academic misconduct.

We make every effort to prevent our software from being used for fraudulent or manipulative purposes.

Your questions, answered

Scribbr’s AI Detector can confidently detect most English texts generated by popular tools like ChatGPT, Gemini, and Copilot.

Our free AI Detector can detect texts written using GPT2, GPT3, and GPT3.5 with average accuracy, while our Premium AI Detector has high accuracy and the ability to detect GPT4.

Our AI Detector is carefully trained to detect most texts generated by popular tools like ChatGPT and Bard. These texts often contain certain phrases, patterns, or awkward wording that indicate they were not created by a human. However, no AI model on the market can guarantee 100% accuracy, including ours. To get the best results, we recommend scanning longer pieces of text rather than individual sentences or paragraphs.

Our research into the best AI detectors indicates that no tool can provide complete accuracy; the highest accuracy we found was 84% in a premium tool or 68% in the best free tool.

The AI score is a percentage between 0% and 100%, indicating how much of the text contains content likely written or refined using AI tools.

No—Scribbr’s AI Detector will only give you a percentage between 0% and 100% that indicates the likelihood that your text contains contains AI-generated, AI-refined, or human-written content.

No—our AI content checker can only identify AI-generated, AI-refined, and human-written content. Our Plagiarism Checker can help prevent unintentional plagiarism in your writing.

Yes—our AI Detector can currently analyze text in English, Spanish, German, and French

Detect ChatGPT3.5, GPT4 and Gemini in seconds

Get in touch with questions.

We answer your questions quickly and personally from 9:00 to 23:00 CET

- Start live chat

- Email [email protected]

- Call +1 (510) 822-8066

- WhatsApp +31 20 261 6040

Learn how to use AI tools responsibly

How to cite chatgpt, how to write a paper with chatgpt, how do ai detectors work, university policies on ai writing tools.

MIT Technology Review

- Newsletters

How to spot AI-generated text

The internet is increasingly awash with text written by AI software. We need new tools to detect it.

- Melissa Heikkilä archive page

This sentence was written by an AI—or was it? OpenAI’s new chatbot, ChatGPT, presents us with a problem: How will we know whether what we read online is written by a human or a machine?

Since it was released in late November, ChatGPT has been used by over a million people. It has the AI community enthralled, and it is clear the internet is increasingly being flooded with AI-generated text. People are using it to come up with jokes, write children’s stories, and craft better emails.

ChatGPT is OpenAI’s spin-off of its large language model GPT-3 , which generates remarkably human-sounding answers to questions that it’s asked. The magic—and danger—of these large language models lies in the illusion of correctness. The sentences they produce look right—they use the right kinds of words in the correct order. But the AI doesn’t know what any of it means. These models work by predicting the most likely next word in a sentence. They haven’t a clue whether something is correct or false, and they confidently present information as true even when it is not.

In an already polarized, politically fraught online world, these AI tools could further distort the information we consume. If they are rolled out into the real world in real products, the consequences could be devastating.

We’re in desperate need of ways to differentiate between human- and AI-written text in order to counter potential misuses of the technology, says Irene Solaiman, policy director at AI startup Hugging Face, who used to be an AI researcher at OpenAI and studied AI output detection for the release of GPT-3’s predecessor GPT-2.

New tools will also be crucial to enforcing bans on AI-generated text and code, like the one recently announced by Stack Overflow, a website where coders can ask for help. ChatGPT can confidently regurgitate answers to software problems, but it’s not foolproof. Getting code wrong can lead to buggy and broken software, which is expensive and potentially chaotic to fix.

A spokesperson for Stack Overflow says that the company’s moderators are “examining thousands of submitted community member reports via a number of tools including heuristics and detection models” but would not go into more detail.

In reality, it is incredibly difficult, and the ban is likely almost impossible to enforce.

Today’s detection tool kit

There are various ways researchers have tried to detect AI-generated text. One common method is to use software to analyze different features of the text—for example, how fluently it reads, how frequently certain words appear, or whether there are patterns in punctuation or sentence length.

“If you have enough text, a really easy cue is the word ‘the’ occurs too many times,” says Daphne Ippolito, a senior research scientist at Google Brain, the company’s research unit for deep learning.

Because large language models work by predicting the next word in a sentence, they are more likely to use common words like “the,” “it,” or “is” instead of wonky, rare words. This is exactly the kind of text that automated detector systems are good at picking up, Ippolito and a team of researchers at Google found in research they published in 2019.

But Ippolito’s study also showed something interesting: the human participants tended to think this kind of “clean” text looked better and contained fewer mistakes, and thus that it must have been written by a person.

In reality, human-written text is riddled with typos and is incredibly variable, incorporating different styles and slang, while “language models very, very rarely make typos. They’re much better at generating perfect texts,” Ippolito says.

“A typo in the text is actually a really good indicator that it was human written,” she adds.

Large language models themselves can also be used to detect AI-generated text. One of the most successful ways to do this is to retrain the model on some texts written by humans, and others created by machines, so it learns to differentiate between the two, says Muhammad Abdul-Mageed, who is the Canada research chair in natural-language processing and machine learning at the University of British Columbia and has studied detection .

Scott Aaronson, a computer scientist at the University of Texas on secondment as a researcher at OpenAI for a year, meanwhile, has been developing watermarks for longer pieces of text generated by models such as GPT-3—“an otherwise unnoticeable secret signal in its choices of words, which you can use to prove later that, yes, this came from GPT,” he writes in his blog.

A spokesperson for OpenAI confirmed that the company is working on watermarks, and said its policies state that users should clearly indicate text generated by AI “in a way no one could reasonably miss or misunderstand.”

But these technical fixes come with big caveats. Most of them don’t stand a chance against the latest generation of AI language models, as they are built on GPT-2 or other earlier models. Many of these detection tools work best when there is a lot of text available; they will be less efficient in some concrete use cases, like chatbots or email assistants, which rely on shorter conversations and provide less data to analyze. And using large language models for detection also requires powerful computers, and access to the AI model itself, which tech companies don’t allow, Abdul-Mageed says.

The bigger and more powerful the model, the harder it is to build AI models to detect what text is written by a human and what isn’t, says Solaiman.

“What’s so concerning now is that [ChatGPT has] really impressive outputs. Detection models just can’t keep up. You’re playing catch-up this whole time,” she says.

Training the human eye

There is no silver bullet for detecting AI-written text, says Solaiman. “A detection model is not going to be your answer for detecting synthetic text in the same way that a safety filter is not going to be your answer for mitigating biases,” she says.

To have a chance of solving the problem, we’ll need improved technical fixes and more transparency around when humans are interacting with an AI, and people will need to learn to spot the signs of AI-written sentences.

“What would be really nice to have is a plug-in to Chrome or to whatever web browser you’re using that will let you know if any text on your web page is machine generated,” Ippolito says.

Some help is already out there. Researchers at Harvard and IBM developed a tool called Giant Language Model Test Room (GLTR), which supports humans by highlighting passages that might have been generated by a computer program.

But AI is already fooling us. Researchers at Cornell University found that people found fake news articles generated by GPT-2 credible about 66% of the time.

Another study found that untrained humans were able to correctly spot text generated by GPT-3 only at a level consistent with random chance.

The good news is that people can be trained to be better at spotting AI-generated text, Ippolito says. She built a game to test how many sentences a computer can generate before a player catches on that it’s not human, and found that people got gradually better over time.

“If you look at lots of generative texts and you try to figure out what doesn’t make sense about it, you can get better at this task,” she says. One way is to pick up on implausible statements, like the AI saying it takes 60 minutes to make a cup of coffee.

Artificial intelligence

Google DeepMind’s new AI systems can now solve complex math problems

AlphaProof and AlphaGeometry 2 are steps toward building systems that can reason, which could unlock exciting new capabilities.

- Rhiannon Williams archive page

OpenAI has released a new ChatGPT bot that you can talk to

The voice-enabled chatbot will be available to a small group of people today, and to all ChatGPT Plus users in the fall.

AI trained on AI garbage spits out AI garbage

As junk web pages written by AI proliferate, the models that rely on that data will suffer.

- Scott J Mulligan archive page

A short history of AI, and what it is (and isn’t)

Maybe it’s magic, maybe it’s math—nobody can decide.

Stay connected

Get the latest updates from mit technology review.

Discover special offers, top stories, upcoming events, and more.

Thank you for submitting your email!

It looks like something went wrong.

We’re having trouble saving your preferences. Try refreshing this page and updating them one more time. If you continue to get this message, reach out to us at [email protected] with a list of newsletters you’d like to receive.

GPT Essay Checker for Students

How to Interpret the Result of AI Detection

To use our GPT checker, you won’t need to do any preparation work!

Take the 3 steps:

- Copy and paste the text you want to be analyzed,

- Click the button,

- Follow the prompts to interpret the result.

Our AI detector doesn’t give a definitive answer. It’s only a free beta test that will be improved later. For now, it provides a preliminary conclusion and analyzes the provided text, implementing the color-coding system that you can see above the analysis.

It is you who decides whether the text is written by a human or AI:

- Your text was likely generated by an AI if it is mostly red with some orange words. This means that the word choice of the whole document is nowhere near unique or unpredictable.

- Your text looks unique and human-made if our GPT essay checker adds plenty of orange, green, and blue to the color palette.

- 🔮 The Tool’s Benefits

🤖 Will AI Replace Human Writers?

✅ ai in essay writing.

- 🕵 How do GPT checkers work?

Chat GPT in Essay Writing – the Shortcomings

- The tool doesn’t know anything about what happened after 2021. Novel history is not its strong side. Sometimes it needs to be corrected about earlier events. For instance, request information about Heathrow Terminal 1 . The program will tell you it is functioning, although it has been closed since 2015.

- The reliability of answers is questionable. AI takes information from the web which abounds in fake news, bias, and conspiracy theories.

- References also need to be checked. The links that the tool generates are sometimes incorrect, and sometimes even fake.

- Two AI generated essays on the same topic can be very similar. Although a plagiarism checker will likely consider the texts original, your teacher will easily see the same structure and arguments.

- Chat GPT essay detectors are being actively developed now. Traditional plagiarism checkers are not good at finding texts made by ChatGPT. But this does not mean that an AI-generated piece cannot be detected at all.

🕵 How Do GPT Checkers Work?

An AI-generated text is too predictable. Its creation is based on the word frequency in each particular case.

Thus, its strong side (being life-like) makes it easily discernible for ChatGPT detectors.

Once again, conventional anti-plagiarism essay checkers won’t work there merely because this writing features originality. Meanwhile, it will be too similar to hundreds of other texts covering the same topic.

Here’s an everyday example. Two people give birth to a baby. When kids become adults, they are very much like their parents. But can we tell this particular human is a child of the other two humans? No, if we cannot make a genetic test. This GPT essay checker is a paternity test for written content.

❓ GPT Essay Checker FAQ

Updated: Jul 19th, 2024

- Abstracts written by ChatGPT fool scientists - Nature

- How to... use ChatGPT to boost your writing

- Will ChatGPT Kill the Student Essay? - The Atlantic

- ChatGPT: how to use the AI chatbot taking over the world

- Overview of ChatGPT - Technology Hits - Medium

IvyPanda uses cookies and similar technologies to enhance your experience, enabling functionalities such as:

- Basic site functions

- Ensuring secure, safe transactions

- Secure account login

- Remembering account, browser, and regional preferences

- Remembering privacy and security settings

- Analyzing site traffic and usage

- Personalized search, content, and recommendations

- Displaying relevant, targeted ads on and off IvyPanda

Please refer to IvyPanda's Cookies Policy and Privacy Policy for detailed information.

Certain technologies we use are essential for critical functions such as security and site integrity, account authentication, security and privacy preferences, internal site usage and maintenance data, and ensuring the site operates correctly for browsing and transactions.

Cookies and similar technologies are used to enhance your experience by:

- Remembering general and regional preferences

- Personalizing content, search, recommendations, and offers

Some functions, such as personalized recommendations, account preferences, or localization, may not work correctly without these technologies. For more details, please refer to IvyPanda's Cookies Policy .

To enable personalized advertising (such as interest-based ads), we may share your data with our marketing and advertising partners using cookies and other technologies. These partners may have their own information collected about you. Turning off the personalized advertising setting won't stop you from seeing IvyPanda ads, but it may make the ads you see less relevant or more repetitive.

Personalized advertising may be considered a "sale" or "sharing" of the information under California and other state privacy laws, and you may have the right to opt out. Turning off personalized advertising allows you to exercise your right to opt out. Learn more in IvyPanda's Cookies Policy and Privacy Policy .

- Skip to main content

- Keyboard shortcuts for audio player

A college student created an app that can tell whether AI wrote an essay

Emma Bowman

GPTZero in action: The bot correctly detected AI-written text. The writing sample that was submitted? ChatGPT's attempt at "an essay on the ethics of AI plagiarism that could pass a ChatGPT detector tool." GPTZero.me/Screenshot by NPR hide caption

GPTZero in action: The bot correctly detected AI-written text. The writing sample that was submitted? ChatGPT's attempt at "an essay on the ethics of AI plagiarism that could pass a ChatGPT detector tool."

Teachers worried about students turning in essays written by a popular artificial intelligence chatbot now have a new tool of their own.

Edward Tian, a 22-year-old senior at Princeton University, has built an app to detect whether text is written by ChatGPT, the viral chatbot that's sparked fears over its potential for unethical uses in academia.

Edward Tian, a 22-year-old computer science student at Princeton, created an app that detects essays written by the impressive AI-powered language model known as ChatGPT. Edward Tian hide caption

Edward Tian, a 22-year-old computer science student at Princeton, created an app that detects essays written by the impressive AI-powered language model known as ChatGPT.

Tian, a computer science major who is minoring in journalism, spent part of his winter break creating GPTZero, which he said can "quickly and efficiently" decipher whether a human or ChatGPT authored an essay.

His motivation to create the bot was to fight what he sees as an increase in AI plagiarism. Since the release of ChatGPT in late November, there have been reports of students using the breakthrough language model to pass off AI-written assignments as their own.

"there's so much chatgpt hype going around. is this and that written by AI? we as humans deserve to know!" Tian wrote in a tweet introducing GPTZero.

Tian said many teachers have reached out to him after he released his bot online on Jan. 2, telling him about the positive results they've seen from testing it.

More than 30,000 people had tried out GPTZero within a week of its launch. It was so popular that the app crashed. Streamlit, the free platform that hosts GPTZero, has since stepped in to support Tian with more memory and resources to handle the web traffic.

How GPTZero works

To determine whether an excerpt is written by a bot, GPTZero uses two indicators: "perplexity" and "burstiness." Perplexity measures the complexity of text; if GPTZero is perplexed by the text, then it has a high complexity and it's more likely to be human-written. However, if the text is more familiar to the bot — because it's been trained on such data — then it will have low complexity and therefore is more likely to be AI-generated.

Separately, burstiness compares the variations of sentences. Humans tend to write with greater burstiness, for example, with some longer or complex sentences alongside shorter ones. AI sentences tend to be more uniform.

In a demonstration video, Tian compared the app's analysis of a story in The New Yorker and a LinkedIn post written by ChatGPT. It successfully distinguished writing by a human versus AI.

A new AI chatbot might do your homework for you. But it's still not an A+ student

Tian acknowledged that his bot isn't foolproof, as some users have reported when putting it to the test. He said he's still working to improve the model's accuracy.

But by designing an app that sheds some light on what separates human from AI, the tool helps work toward a core mission for Tian: bringing transparency to AI.

"For so long, AI has been a black box where we really don't know what's going on inside," he said. "And with GPTZero, I wanted to start pushing back and fighting against that."

The quest to curb AI plagiarism

Untangling Disinformation

Ai-generated fake faces have become a hallmark of online influence operations.

The college senior isn't alone in the race to rein in AI plagiarism and forgery. OpenAI, the developer of ChatGPT, has signaled a commitment to preventing AI plagiarism and other nefarious applications. Last month, Scott Aaronson, a researcher currently focusing on AI safety at OpenAI, revealed that the company has been working on a way to "watermark" GPT-generated text with an "unnoticeable secret signal" to identify its source.

The open-source AI community Hugging Face has put out a tool to detect whether text was created by GPT-2, an earlier version of the AI model used to make ChatGPT. A philosophy professor in South Carolina who happened to know about the tool said he used it to catch a student submitting AI-written work.

The New York City education department said on Thursday that it's blocking access to ChatGPT on school networks and devices over concerns about its "negative impacts on student learning, and concerns regarding the safety and accuracy of content."

Tian is not opposed to the use of AI tools like ChatGPT.

GPTZero is "not meant to be a tool to stop these technologies from being used," he said. "But with any new technologies, we need to be able to adopt it responsibly and we need to have safeguards."

Free AI Detector

Identify AI-generated content, including ChatGPT and Copilot, with Scribbr's free AI detector

Why use Scribbr’s AI Detector

Authority on ai and plagiarism.

Our plagiarism and AI detection tools and helpful content are used by millions of users every month.

Unlimited free AI checks

Perform an unlimited number of AI content checks for free, ensuring all of your work is authentic.

No sign-up required

Start detecting AI-generated content instantly, without having to create an account.

Confidentiality guaranteed

Rest easy knowing your submissions remain private; we do not store or share your data.

AI Detector for ChatGPT, Copilot, Gemini, and more

Scribbr’s AI Detector confidently detects texts generated by the most popular tools, like ChatGPT, Gemini, and Copilot.

Our advanced AI checker tool can detect the latest models, like GPT4 with high accuracy.

Note that no AI Detector can provide complete accuracy ( see our research ). As language models continue to develop, detection tools will always have to race to keep up with them.

The AI Detector is perfect for...

Confidently submit your papers

Scribbr’s AI Detector helps ensure that your essays and papers adhere to your university guidelines.

- Verify the authenticity of your sources ensuring that you only present trustworthy information.

- Identify any AI-generated content, like ChatGPT, that might need proper attribution.

Check the authenticity of your students’ work

More and more students are using AI tools, like ChatGPT in their writing process.

- Analyze the content submitted by your students, ensuring that their work is actually written by them.

- Promote a culture of honesty and originality among your students.

Prevent search algorithm penalties

Ensure that your content is indexed by publishing high-quality and original content.

- Analyze the authenticity of articles written by external contributors or agencies before publishing them.

- Deliver unique content that engages your audience and drives traffic to your website.

AI Detectors vs. Plagiarism Checkers

AI detectors and plagiarism checkers are both used to verify the originality and authenticity of a text, but they differ in terms of how they work and what they’re looking for.

AI Detector or ChatGPT Detector

AI detectors try to find text that looks like it was generated by an AI writing tool, like ChatGPT. They do this by measuring specific characteristics of the text like sentence structure and length, word choice, and predictability — not by comparing it to a database of content.

Plagiarism Checker

Plagiarism checkers try to find text that is copied from a different source. They do this by comparing the text to a large database of web pages, news articles, journals, and so on, and detecting similarities — not by measuring specific characteristics of the text.

Scribbr & academic integrity

Scribbr is committed to protecting academic integrity. Our tools, like the AI Detector , Plagiarism Checker , and Citation Generator are designed to help students produce quality academic papers and prevent academic misconduct.

We make every effort to prevent our software from being used for fraudulent or manipulative purposes.

Your questions, answered

Scribbr’s AI Detectors can confidently detect most English texts generated by popular tools like ChatGPT, Gemini, and Copilot.

Our free AI detector can detect GPT2, GPT3, and GPT3.5 with average accuracy, while the Premium AI Detector has high accuracy and the ability to detect GPT4.

Our AI Detector can detect most texts generated by popular tools like ChatGPT and Bard. Unfortunately, we can’t guarantee 100% accuracy. The software works especially well with longer texts but can make mistakes if the AI output was prompted to be less predictable or was edited or paraphrased after being generated.

Our research into the best AI detectors indicates that no tool can provide complete accuracy; the highest accuracy we found was 84% in a premium tool or 68% in the best free tool.

The AI score is a percentage between 0% and 100%, indicating the likelihood that a text has been generated by AI.

Detect ChatGPT3.5, GPT4 and Gemini in seconds

Get in touch with questions.

We answer your questions quickly and personally from 9:00 to 23:00 CET

- Start live chat

- Email [email protected]

- Call +44 (0)20 3917 4242

- WhatsApp +31 20 261 6040

Learn how to use AI tools responsibly

How to cite chatgpt, how to write a paper with chatgpt, how do ai detectors work, university policies on ai writing tools.

- AI Detector and Humanizer

- Business Solutions

- Try it Free

How To Tell if AI Wrote an Essay: 5 Effective Methods

At the 2016 Rio Olympics, The Washington Post made the choice to use automated storytelling for their coverage of the event.

They created an exclusive digital platform filled with AI content creation tools needed by today’s publishers.

Because of their platform, the writers could produce more than 850 stories. This puts them above the rest when it comes to providing coverage, making them a top choice for readers.

This is how AI-generated content is done right. Having timely narratives alongside traditional reporting is a smart use of technology.

That being said, like most great things, AI can also be abused.

As a professional educator, researcher, or just a curious reader, it’s important to know how to tell if AI wrote an essay.

Because of how advanced AI content creation has gotten, it may not be easy to tell which is human and AI-generated at first glance.

Here’s everything you need to know about AI-content detection.

5 Strategies for Identifying AI-Written Essays

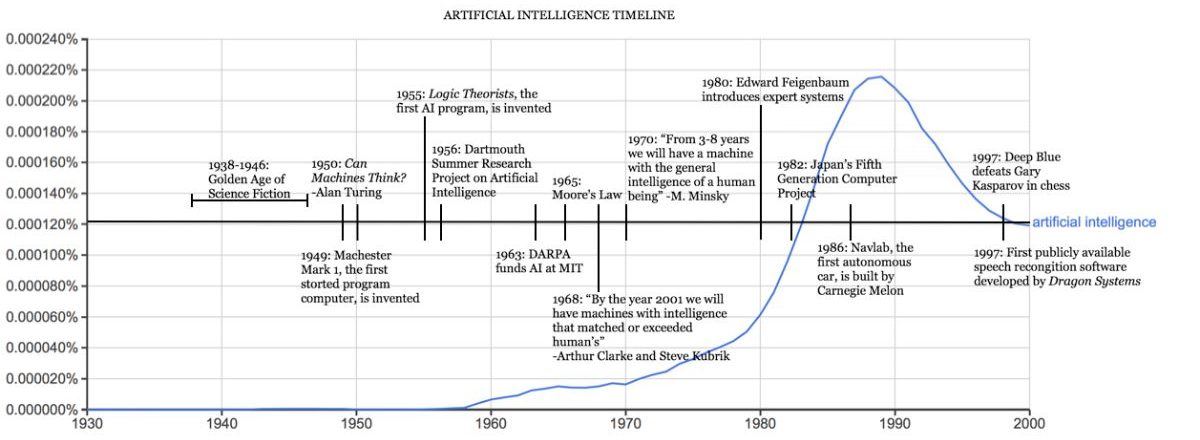

AI has come a long way, going way back to the 1950s by Alan Turing. The Turing Test was used to measure a machine’s ability to display human-like intelligence.

Basically, if a machine can engage with a human without being detected as a machine, it passes the test.

Decades later, AI is now used for content creation.

This is all thanks to how technology has evolved with natural language processing (NLP) and other machine learning algorithms that can analyze huge amounts of data and create human-like responses.

AI is now widely used, especially in academic contexts. Without responsible AI use, the authenticity and transparency that institutions protect are tarnished.

Even in college admissions , knowing how to tell if an essay is AI-generated is becoming a priority.

Colleges and universities are taking proactive measures to prevent AI from influencing admission decisions.

They are now investing in tools and planning multiple strategies to prevent the submission of AI-written material.

To help you out with this challenge, here are five effective strategies to identify AI-written content.

1. Using AI Content Detection Tools

Consider a teacher receiving a big batch of electronically submitted student essays.

As she reviews them, she notices one that gives off a very unusual writing style. With the workload she’s handling, checking manually can be a hassle.

Thanks to AI content detection tools, she can easily submit the text and check.

This lets her identify essays that might require a closer look, ensuring the academic integrity and fairness of grading stays intact.

Remember that we now live in a time where AI plagiarism actually exists.

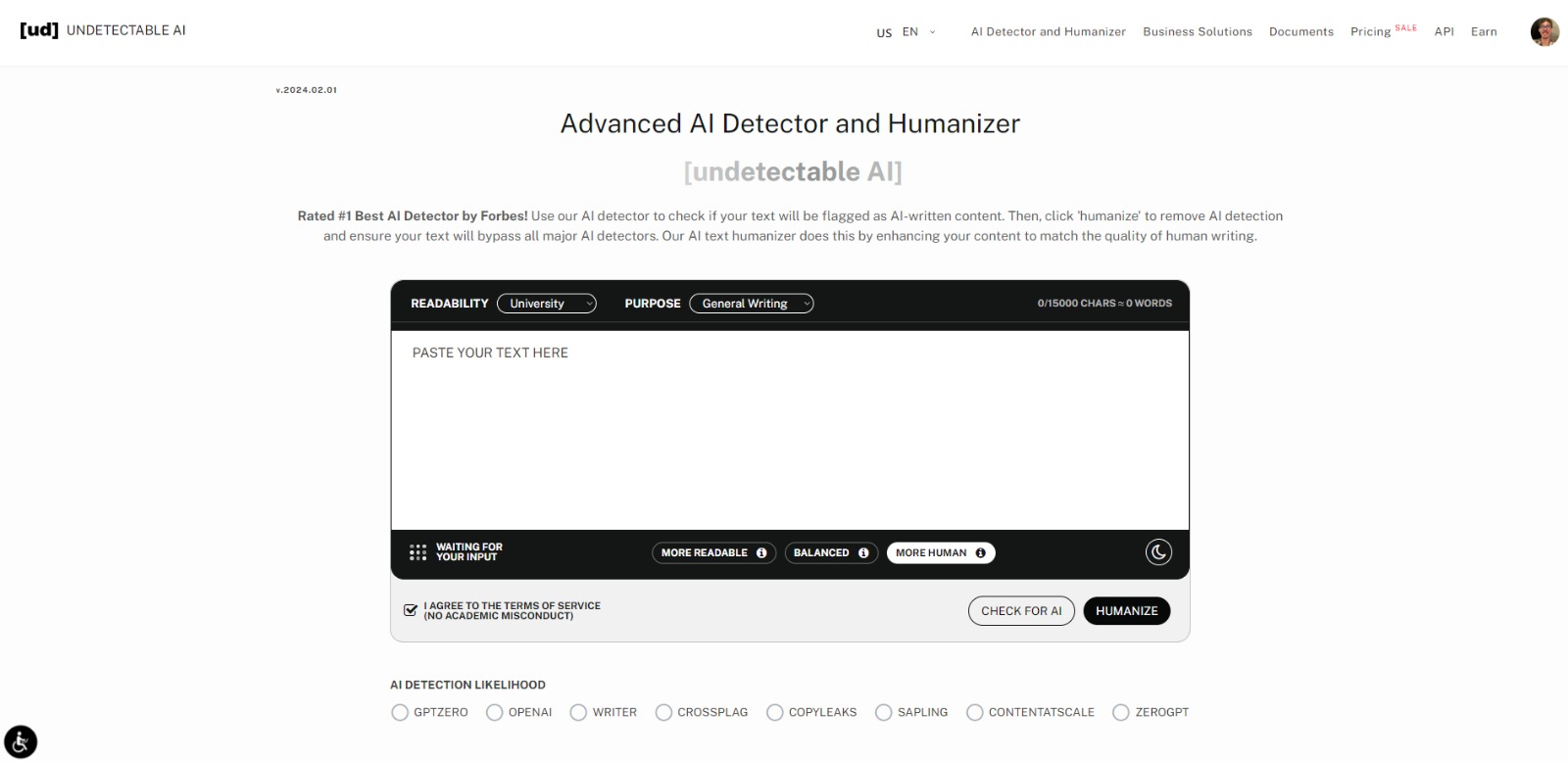

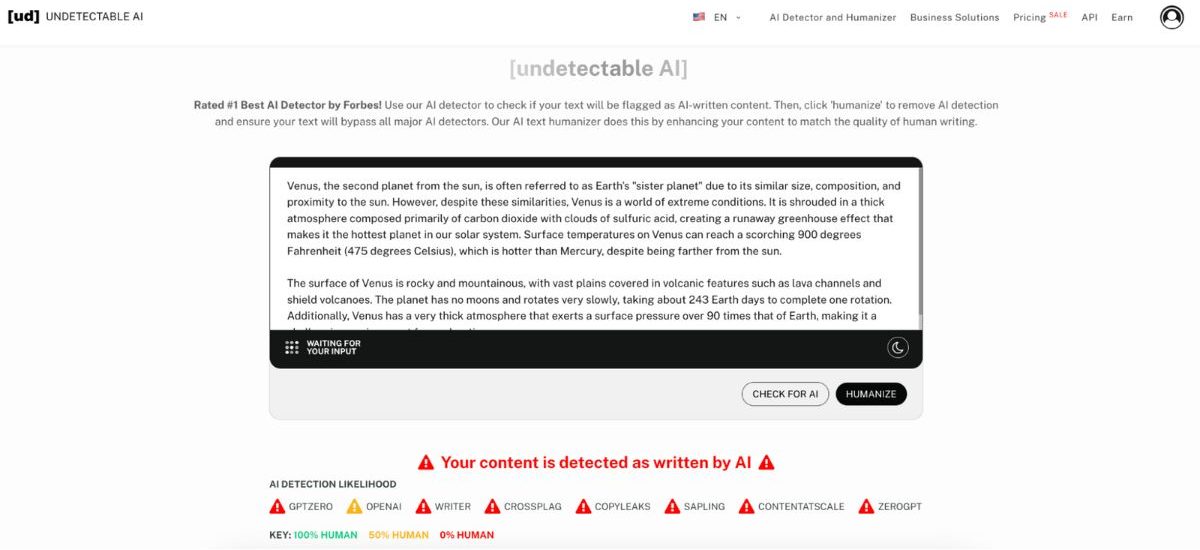

For instance, a highly-rated AI content detector like Undetectable AI can detect AI content with unparalleled accuracy.

It can quickly find inconsistencies, deviations, and unnatural language within seconds – all wrapped in an easy-to-use interface.

Here are some ways that AI detectors like Undetectable AI are commonly used.

- Educational institutions and academic platforms can use AI content detection tools to screen assignments submitted by students.

- Publishers, media outlets, and content creators use AI content detection to evaluate how authentic their written content is before reaching publication.

- Researchers and scholars rely on AI content detection tools to prevent the spread of misinformation or inaccurate findings.

- Beyond just identifying AI-written essays, AI content detection includes comparing a vast database of content to make sure that the written text is not plagiarized.

- Content producers and marketers take advantage of AI tools to preserve brand reputation by checking that the writing style and tone are not inconsistent.

- AI content detection tools assist in verifying the authenticity of written documents and maintaining compliance with intellectual property laws.

With the use of AI content detection tools, it will be much easier for institutions and organizations to adapt to the rise of AI use across various professions.

But when AI is used correctly, it can help automate processes, lessen routine work, and empower professionals to work harder on what makes an impact.

It’s a combo move that’s worth putting in the effort.

2. Analyzing Tone and Style

A simple yet effective hands-on way how to tell if an essay is AI-generated is by looking at how the written content sounds.

You can usually watch out for these red flags.

- Real human-written essays usually show some sort of “natural variability.” This means that there is a tone that’s specific to the writer, making it uniquely theirs.

- AI content generators have a really hard time incorporating human emotion into their content. These generators create content purely based on a prompt and usually feel very generic and, as cliché as it sounds, robotic.

- There’s a lack of relatable experiences and fresh perspectives, basically looking like the text was paraphrased from an already existing article.

- AI isn’t capable of going deeper into complex topics. There’s a huge lack of sophistication in the style of writing, being unable to provide clear analysis, interpretation, and insights.

By honing your observation skills, you, as a human reader, can instinctively detect these subtle nuances.

While automated tools can undoubtedly help with identifying AI-written essays, the power of human observation never goes away.

3. Identifying Lack of Personal Touch

When it comes to writing, the personal touch is the unique style that makes a written work uniquely the author’s.

It’s the individual’s voice and connection to the reader that makes essays feel real. This is why identifying a lack of personal touch is one of the best ways to know if something is AI-generated.

While AI tools like ChatGPT certainly have promise for the future of academic research, writers and researchers need to acknowledge the current limitations must be approached with caution.

As H. Holden Thorp , Editor-in-Chief of the Science family of journals, points out, “ChatGPT is fun, but not an author.”

While AI content tools can be useful tools for inspiration and idea generation, we need to think ethically and make sure that the content we produce stays accurate and reliable.

4. Spotting Repetitive Language

One of the biggest telltale signs of AI-generated content is repetitive language. AI algorithms rely heavily on established patterns and templates to produce text.

As a result, AI-generated content usually shows a lot of repetitions, which shows how limited the algorithm’s programming is.

From the get-go, you will notice how monotonous the text is. This is because the repetitive texts and phrases used are also generic.

AI has a limited vocabulary when generating texts, which really stand out when you read them.

While repeating words doesn’t automatically mean something’s AI content, it’s a supplementary action that you can take note of to know if written content feels off.

5. Assessing Accuracy and Relevance

If you instruct an AI content generator to provide a statistic on the number of people who wake up from their sleep and walk down the stairs to drink a glass of milk, it is most likely to do so.

But what it doesn’t do is verify whether the information it just provided is correct or if it even exists.

What data AI provides is only the data in its database. This means that the information can be outdated.

There can also be inconsistencies and even bias, depending on where the data comes from.

It’s scary to think that AI-generated content can be alarmingly convincing.

People are 3% less likely to spot inaccurate information when content is AI-written because AI makes content condensed and easy to process.

This is why it’s important to be cautious about how you use information online.

Importance of Distinguishing Human and AI-Crafted Essays

In a nutshell, it’s important to recognize the differences between human and AI content to maintain authenticity, prevent misinformation, and avoid plagiarism.

Here’s a rundown of why you should distinguish human from AI work.

- Preventing plagiarism and ensuring that students receive recognition for their original work.

- Preserving the credibility of published works, research findings, and journalistic pieces.

- Fighting the spread of fake news online.

- Clarifying the origin of essays supports ethical practices in writing and publishing.

Take note that this does not mean that we should outright ban the use of AI.

We should encourage responsible innovation in the field, and find the balance between using AI technology for efficiency and still being able to maintain the unique value of human creativity and expression.

How to Humanize Your Essays with Undetectable

Knowing how to tell if AI wrote an essay takes a lot of time when done manually, but thankfully, tools like Undetectable AI exist to make it easier. Just paste the text, and there you have it.

But if you were researching and found some great ideas with the help of AI, you can use our AI humanizer to ensure that any text you write stays real. This dual functionality offers peace of mind.

Create authentic and engaging essays with confidence, all without having to sacrifice the personal touch that sets human writing apart.

With both tools available on one platform, you can enjoy even more creativity, efficiency, and reliability when you write.

Ready to see the difference? Test the Undetectable AI widget below and experience firsthand how it can enhance your writing (English only).

With some human intuition, you can identify if any written work is made by a human or AI. With these strategies, you can be more confident about the information you read.

But it doesn’t hurt to consider the use of AI content detection tools to ease the process.

It can be time-consuming to manually do all the work, so having Undetectable by your side is a good idea.

With its advanced AI detection capabilities and humanization features, Undetectable lets you tell the difference between human and AI essays just within seconds.

We live in a time where human and AI-generated content continues to blur, so by staying informed and taking a proactive approach, we can protect ourselves from misinformation while still being able to maximize the benefits of AI technology for content creation.

Student Creates App to Detect Essays Written by AI

In response to the text-generating bot ChatGPT, the new tool measures sentence complexity and variation to predict whether an author was human

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/MargaretOsborne.png)

Margaret Osborne

Daily Correspondent

:focal(1061x707:1062x708)/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer_public/47/1e/471e0924-d9c0-40b0-a8b0-61369a6df36f/gettyimages-1346781823.jpg)

In November, artificial intelligence company OpenAI released a powerful new bot called ChatGPT, a free tool that can generate text about a variety of topics based on a user’s prompts. The AI quickly captivated users across the internet, who asked it to write anything from song lyrics in the style of a particular artist to programming code.

But the technology has also sparked concerns of AI plagiarism among teachers, who have seen students use the app to write their assignments and claim the work as their own. Some professors have shifted their curricula because of ChatGPT, replacing take-home essays with in-class assignments, handwritten papers or oral exams, reports Kalley Huang for the New York Times .

“[ChatGPT] is very much coming up with original content,” Kendall Hartley , a professor of educational training at the University of Nevada, Las Vegas, tells Scripps News . “So, when I run it through the services that I use for plagiarism detection, it shows up as a zero.”

Now, a student at Princeton University has created a new tool to combat this form of plagiarism: an app that aims to determine whether text was written by a human or AI. Twenty-two-year-old Edward Tian developed the app, called GPTZero , while on winter break and unveiled it on January 2. Within the first week of its launch, more than 30,000 people used the tool, per NPR ’s Emma Bowman. On Twitter, it has garnered more than 7 million views.

GPTZero uses two variables to determine whether the author of a particular text is human: perplexity, or how complex the writing is, and burstiness, or how variable it is. Text that’s more complex with varied sentence length tends to be human-written, while prose that is more uniform and familiar to GPTZero tends to be written by AI.

But the app, while almost always accurate, isn’t foolproof. Tian tested it out using BBC articles and text generated by AI when prompted with the same headline. He tells BBC News ’ Nadine Yousif that the app determined the difference with a less than 2 percent false positive rate.

“This is at the same time a very useful tool for professors, and on the other hand a very dangerous tool—trusting it too much would lead to exacerbation of the false flags,” writes one GPTZero user, per the Guardian ’s Caitlin Cassidy.

Tian is now working on improving the tool’s accuracy, per NPR. And he’s not alone in his quest to detect plagiarism. OpenAI is also working on ways that ChatGPT’s text can easily be identified.

“We don’t want ChatGPT to be used for misleading purposes in schools or anywhere else,” a spokesperson for the company tells the Washington Post ’s Susan Svrluga in an email, “We’re already developing mitigations to help anyone identify text generated by that system.” One such idea is a watermark , or an unnoticeable signal that accompanies text written by a bot.

Tian says he’s not against artificial intelligence, and he’s even excited about its capabilities, per BBC News. But he wants more transparency surrounding when the technology is used.

“A lot of people are like … ‘You’re trying to shut down a good thing we’ve got going here!’” he tells the Post . “That’s not the case. I am not opposed to students using AI where it makes sense. … It’s just we have to adopt this technology responsibly.”

Get the latest stories in your inbox every weekday.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/MargaretOsborne.png)

Margaret Osborne | | READ MORE

Margaret Osborne is a freelance journalist based in the southwestern U.S. Her work has appeared in the Sag Harbor Express and has aired on WSHU Public Radio.

How to tell if something is written by AI

New Tool Can Tell If Something Is AI-Written With 99% Accuracy

- Share to Facebook

- Share to Twitter

- Share to Linkedin

A new study found an AI detector developed by the University of Kansas can detect AI-generated content in academic papers with a 99% accuracy rate, one of the only detectors on the market specifically geared toward academic writing.

Genuine human error? Perhaps, but at least not artificial intelligence.

According to a report published Wednesday in the journal Cell Reports Physical Science, researchers created a tool that can prove AI detection in academic papers with 99% accuracy.

The team of researchers selected 64 perspectives (a type of article) and used them to make 128 articles using ChatGPT, which was then used to train the AI detector.

The model had a 100% accuracy rate of identifying human-created articles from AI-generated ones, and a 92% accuracy rate for identifying specific paragraphs within the text.

According to a survey by BestColleges.com, 89% of college students have admitted to using ChatGPT to help with assignments, while 34% of educators believe the software should be banned, though 66% support students having access.

Crucial Quote

“Right now, there are some pretty glaring problems with AI writing," lead author Heather Desaire said in a statement. "One of the biggest problems is that it assembles text from many sources and there isn't any kind of accuracy check—it's kind of like the game Two Truths and a Lie."

AI detectors have not proven to be 100% accurate. A University of California at Davis student alleges she was falsely accused by her university of cheating with AI. After uploading a paper for one of her classes, she received an email from her professor claiming a portion was flagged in the program Turnitin as being AI-generated. Her case was immediately forwarded to the Office of Student Support and Judicial Affairs, which handles discipline for academic misconduct. The student pleaded her case and ultimately won, using time stamps to prove she wrote the paper. This wasn’t the only time educators falsely labeled assignments as AI-generated. A Texas A&M commerce professor attempted to flunk over half of his senior class after using ChatGPT to test whether the students used the chatbot to write their papers. He copied and pasted the papers into ChatGPT and asked if it wrote them, to which it replied yes. This caused their diplomas to be withheld by the university, though the professor offered the opportunity to redo the assignment. However, the university confirmed to Insider no students were failed or barred from graduating.

Key Background

OpenAI’s chatbot, ChatGPT, was opened to the public in November 2022, and in less than a week surpassed the million-users mark, with people using it for things like creating code and writing essays. The AI’s intelligence led to several schools either indefinitely or temporarily banning the software, including New York Public Schools, Seattle Public Schools and the Los Angeles Unified School District.

There are already many existing programs and services that promise to identify AI-written content.

- TurnitIn released an AI detection tool for papers. Before, it only had the capability to check for plagiarism. The feature has been added to its similarity report and shows an overall percentage of the amount of work AI software generated within the paper. The company claims its AI detection tool is 98% accurate at spotting AI-written work. Its detection model is trained to detect content from GPT-3 and GPT-3.5 language models, including ChatGPT. However, it claims that because GPT-4’s writing characteristics are similar to earlier models, it can detect content from this version “most of the time.”

- Copyleaks claims to have an AI detection accuracy rate of 99%. Its software can detect AI-generated text across several models, including GPT-4 and earlier versions, and content created with Jasper AI. It also says it can detect AI content in multiple languages, including Spanish, Russian, French, Dutch and German.

- Winston AI launched in February, and claims it performs this task with 99% accuracy. It only supports detection in English and French, though the company is looking to expand this soon to Spanish and German. It can detect content made using ChatGPT, Bard, Bing Chat, GPT-4 and other text generation tools.

- OpenAI’s Classifier launched in January to distinguish between AI-written and human-written text. Though these are the same makers behind ChatGPT, the tool isn’t very accurate. It has a success rate of around 26% and incorrectly labels human work as AI work 9% of the time. However, OpenAI claims the accuracy increases as the length of the text increases as well. It’s “very unreliable” on texts with 1,000 characters or less, and OpenAI only recommends using the software on documents written in English. The company also warns against using Classifier as the primary decision making tool and suggests using it to complement other methods of detection.

- AI Writing Check was developed by Quill and CommonLit to help teachers check for AI-created work in assignments. Its developers predict its accuracy is between 80% and 90%. It only allows detection for text up to 400 words at a time and for anything longer, users must break it down into sections. The detection software was created by OpenAI and is able to identify language syntactical patterns within text that aren’t quite humanlike.

Further Reading

ChatGPT In Schools: Here’s Where It’s Banned—And How It Could Potentially Help Students (Forbes)

Here’s What To Know About OpenAI’s ChatGPT—What It’s Disrupting And How To Use It (Forbes)

- Editorial Standards

- Reprints & Permissions

Join The Conversation

One Community. Many Voices. Create a free account to share your thoughts.

Forbes Community Guidelines

Our community is about connecting people through open and thoughtful conversations. We want our readers to share their views and exchange ideas and facts in a safe space.

In order to do so, please follow the posting rules in our site's Terms of Service. We've summarized some of those key rules below. Simply put, keep it civil.

Your post will be rejected if we notice that it seems to contain:

- False or intentionally out-of-context or misleading information

- Insults, profanity, incoherent, obscene or inflammatory language or threats of any kind

- Attacks on the identity of other commenters or the article's author

- Content that otherwise violates our site's terms.

User accounts will be blocked if we notice or believe that users are engaged in:

- Continuous attempts to re-post comments that have been previously moderated/rejected

- Racist, sexist, homophobic or other discriminatory comments

- Attempts or tactics that put the site security at risk

- Actions that otherwise violate our site's terms.

So, how can you be a power user?

- Stay on topic and share your insights

- Feel free to be clear and thoughtful to get your point across

- ‘Like’ or ‘Dislike’ to show your point of view.

- Protect your community.

- Use the report tool to alert us when someone breaks the rules.

Thanks for reading our community guidelines. Please read the full list of posting rules found in our site's Terms of Service.

A Princeton student built an app which can detect if ChatGPT wrote an essay to combat AI-based plagiarism

- A Princeton student built an app that aims to tell if essays were written by AIs like ChatGPT.

- The app analyzes text to see how randomly it is written, allowing it to detect if it was written by AI.

- The website hosting the app, built by Edward Tian, crashed due to high traffic.

A new app can detect whether your essay was written by ChatGPT, as researchers look to combat AI plagiarism.

Edward Tian, a computer science student at Princeton, said he spent the holiday period building GPTZero.

He shared two videos comparing the app's analysis of a New Yorker article and a letter written by ChatGPT. It correctly identified that they were respectively written by a human and AI.

—Edward Tian (@edward_the6) January 3, 2023

GPTZero scores text on its "perplexity and burstiness" – referring to how complicated it is and how randomly it is written.

The app was so popular that it crashed "due to unexpectedly high web traffic," and currently displays a beta-signup page . GPTZero is still available to use on Tian's Streamlit page, after the website hosts stepped in to increase its capacity.

Tian, a former data journalist with the BBC, said that he was motivated to build GPTZero after seeing increased instances of AI plagiarism.

Related stories

"Are high school teachers going to want students using ChatGPT to write their history essays? Likely not," he tweeted.

The Guardian recently reported that ChatGPT is introducing its own system to combat plagiarism by making it easier to identify, and watermarking the bot's output.

That follows The New York Times' report that Google issued a "code red" alert over the AI's popularity.

Insider's Beatrice Nolan also tested ChatGPT to write cover letters for job applications , with one hiring manager saying she'd have got an interview, though another said the letter lacked personality.

Tian added that he's planning to publish a paper with accuracy stats using student journalism articles as data, alongside Princeton's Natural Language Processing group.

OpenAI and Tian didn't immediately respond to Insider's request for comment, sent outside US working hours.

- Main content

How AI Writing is Detected

September 11, 2024

By: Chaddeus

The rise of AI writing tools is transforming content creation, producing text often indistinguishable from human writing.

This raises concerns about the authenticity and reliability of online information, necessitating methods to differentiate between AI-generated and human-written content. Imagine reading content without knowing if it’s AI or human-authored.

This scenario raises ethical questions about academic integrity and the trustworthiness of product reviews.

AI writing’s popularity stems from:

- Advanced language models like GPT-4 producing high-quality text

- Abundant data for AI learning

- High demand for quality content

While AI writing tools offer convenience, their increasing use highlights the need for detection methods to maintain information integrity, but stick to the end where I share what I think is the real answer to all this.

[ Editor’s note : during the writing of this article we tested TONS of passages against several AI detection tools and it’s clear there are inconsistencies… and my guess is they err on the side of calling things AI.

I don’t want to call any particular tool out and the tools themselves are not the purpose of this article. But I did want to share my thoughts. ]

In this post:

How Detection Works

The ability to identify AI-generated text relies on analyzing the subtle differences between human and machine-written content. Linguistic analysis , syntax , and semantic analysis are the core principles that underpin AI writing detection.

Let’s look a little closer:

Linguistic analysis focuses on the patterns and structures of language.

AI-generated text often exhibits predictable patterns, such as the overuse of certain words or phrases, or the absence of certain grammatical structures. For example, AI models might struggle with complex sentence structures, resulting in simpler sentences with repetitive phrases.

They might also overuse common idioms or clichés, creating a somewhat unnatural and repetitive style.

Syntax refers to the grammatical structure of sentences.

AI models often struggle with the complexities of human syntax, resulting in inconsistencies or errors in sentence structure. For instance, AI-generated text might have awkward phrasing, misplaced modifiers, or incorrect pronoun references.

These syntactic inconsistencies can be a telltale sign of AI authorship.

Semantic analysis examines the meaning and coherence of the text.

AI models often struggle with understanding the nuances of human language, resulting in text that lacks depth or coherence. For example, AI-generated text might fail to connect ideas logically, or it might use words in a way that is grammatically correct but semantically incorrect.

This lack of semantic depth can be a red flag for AI-generated content. By analyzing these linguistic, syntactic, and semantic features, AI detection tools can identify patterns and inconsistencies that are characteristic of AI-generated text.

These tools use sophisticated algorithms to compare the characteristics of a given text against a database of known AI-generated content, allowing them to assess the likelihood that the text was written by a machine. For example, a detection tool might analyze the frequency of certain words or phrases, the complexity of sentence structures, or the coherence of the overall text.

If the text exhibits patterns that are consistent with AI-generated content, the tool might flag it as potentially AI-written. However, it’s important to note that AI writing detection is not a perfect science.

AI models are constantly improving, and it can be challenging to distinguish between human-written and AI-generated text with absolute certainty.

Add in Contextual Analysis

AI detection tools don’t just look at words and sentences in isolation.

They also look at the bigger picture. This is called contextual analysis , and it’s pretty clever stuff.

It looks at how everything fits together in a piece of writing to spot things that don’t quite match up with how humans typically write. You see, us humans have our own special way of putting thoughts on paper.

Our experiences, what we know, and how we feel all play a part in shaping our words. AI can string together grammatically correct sentences, sure, but it often misses the mark when it comes to capturing the subtle twists and turns of human thinking.

It’s like the difference between a paint-by-numbers picture and a freehand masterpiece. Both might look good at first glance, but there’s just something about the human touch that stands out when you look closer.

Think about a news piece on a scientific discovery. A human writer might throw in personal stories or expert opinions, giving the article more depth and a genuine feel.

An AI, though? It might just spit out the facts, missing that human spark.

Looking at how ideas flow can also reveal AI-written text. We humans tend to use little phrases to connect our thoughts, making our writing flow smoothly.

AI? Not so much.

It might struggle to link ideas, leaving you with a jumbled mess that’s hard to follow.

Here’s how we can spot AI-written stuff using context clues:

- Staying on topic: AI can be a bit scatterbrained, jumping from one thing to another without much rhyme or reason. Us humans? We’re pretty good at sticking to the point, backing up what we say, and making a solid case.

- Feeling the feels: We humans are emotional creatures, and it shows in our writing. AI? Not so much. It might try to fake it, but often ends up sounding about as emotional as a toaster.

- Cultural know-how: Our writing is flavored by our experiences and background. AI doesn’t have that personal touch, so its writing can come off as bland or culturally tone-deaf.

AI detectors are getting smarter they don’t just look at the words they examine the whole context of a piece. This helps them spot those little quirks that often give away AI-written text.

It’s not just one trick, though. These tools use a bunch of different methods, like picking apart the language and digging into the meaning.

By combining all these approaches, they’re better equipped to unmask AI’s sneaky attempts at mimicking human writing.

Machine Learning Adds Another Layer

Machine learning is like a digital Sherlock Holmes in AI content detection.

It’s a clever system that learns from experience, getting better and better at spotting the telltale signs that separate human writing from AI-generated text. These models are trained on huge collections of both human and AI-written content, helping them pick up on the unique quirks and patterns of each.

Picture a machine learning model devouring thousands of student essays. As it munches through these writings, it starts to recognize the hallmarks of human writing.

It might notice that we humans love to mix things up with complex sentences, throw in some fancy words, and sprinkle in personal anecdotes. Then, it’s time for a feast of AI-generated essays.

This helps the model spot the quirks and weak spots in AI writing. For example, it might catch on that AI tends to play it safe with simpler sentences, gets stuck on repeat with certain phrases, and struggles to convey those pesky human emotions.

The training process helps the AI model grasp the nuanced differences between human and machine-written text. It then applies this knowledge to analyze new writing samples and determine if they’re more likely penned by a person or a computer.

Thanks to leaps in machine learning, AI writing detection has become much more accurate. As these models keep learning and growing, they’re getting better at spotting even the cleverest AI-generated content.

They dive deep into the text, looking at things like how complex the sentences are, what words are used, and how well the whole story flows. This lets them make smarter guesses about where a piece of writing came from.

Machine learning is a game-changer in tackling AI-generated content. It helps us stay one step ahead as AI writing tools get fancier and churn out more convincing stuff.

By tapping into machine learning’s power, we can keep online info honest and make sure human creativity still shines through.

Ethical Considerations

The ability to generate undetectable AI content raises significant ethical concerns.

While AI writing tools can be a valuable resource for content creation, their potential for misuse poses a serious threat to academic integrity, plagiarism, and the future of content creation. The most immediate concern is the potential for students to use AI tools to write their essays and assignments, undermining the educational process.

Imagine a student submitting an essay that they didn’t actually write. This would be a form of plagiarism, and it would be unfair to the other students who put in the effort to write their own work.

Teachers and professors would need to develop new ways to assess student work and ensure that students are learning the material, not just using AI tools to get by. Furthermore, the use of AI to generate fake reviews could mislead consumers about the quality of products and services.

If businesses could use AI to create positive reviews for their products, it would be difficult for consumers to know which reviews are genuine and which are fake. This could harm businesses that are trying to provide honest and accurate information to their customers.

The potential for AI to generate false or misleading information could also have a significant impact on the future of content creation. Imagine a world where it’s impossible to know whether the news articles you’re reading or the social media posts you’re seeing are real or fake.

This could lead to a loss of trust in online information and make it difficult for people to make informed decisions. It’s important to remember that AI is a tool, and like any tool, it can be used for good or for bad.

It’s up to us to use AI responsibly and ethically. This means being aware of the potential risks and taking steps to mitigate them.

It also means promoting transparency and accountability in the use of AI, so that people can be confident that the information they are consuming is accurate and reliable.

AI Writing Detection Tools

The landscape of AI writing detection is always changing, with fresh tools popping up and old ones getting better.

These clever apps use a mix of language analysis, meaning interpretation, and machine learning to spot telltale signs and quirks that give away AI-written text. These tools are like digital detectives, sifting through words and sentences to find the fingerprints of artificial intelligence.

They’re constantly learning and adapting, just like the AI writing tools they’re trying to catch. It’s a bit of a cat-and-mouse game, really, with each side trying to outsmart the other.

Humbot, BypassGPT, Undetectable, etc. – These tools detect AI-generated content, providing scores that indicate the likelihood of AI authorship, sometimes with other features like plagiarism checking and content analysis.

They’re all pretty much the same thing, many even with just a slight change to the same UI.

Strengths and Limitations of AI Detection Tools

While these tools offer valuable insights into the potential use of AI in writing, it’s important to acknowledge their limitations.

AI writing detection is not a perfect science, and these tools are constantly evolving to keep up with the advancements in AI writing technology.

Here are some of the key strengths and limitations of AI writing detection tools:

- Improved Accuracy: AI detection tools are becoming increasingly accurate as they are trained on larger datasets and refined with new algorithms. They are capable of identifying subtle patterns and inconsistencies that are difficult for humans to detect.

- Efficiency: AI detection tools can analyze text quickly and efficiently, providing results in real-time. This makes them a valuable tool for educators, publishers, and businesses that need to quickly assess the authenticity of content.

- Transparency: Many AI detection tools provide detailed reports that explain their findings, allowing users to understand the reasoning behind their assessments. This transparency can help build trust in the accuracy of these tools.

Limitations:

- False Positives: AI detection tools

How effective are AI Detection Tools?

AI writing detection tools are handy for spotting computer-generated text, but they’re not perfect.

It’s like a cat-and-mouse game between AI writers and detectors, with both sides constantly upping their game. As AI gets smarter at mimicking human writing, it’s becoming trickier for these tools to catch the fake stuff.

One big problem with current detection methods is that they’re too focused on number crunching . These tools often look at how often certain words or phrases pop up, or how sentences are put together, to figure out if a computer wrote it.

But here’s the catch: AI writers can be taught to mix things up and sound more human-like. They can learn from tons of real human writing, picking up on all sorts of writing styles, fancy words, and clever ways of saying things.

This makes it super hard for detectors to rely just on patterns and stats to spot the AI-written stuff. Another limitation is the lack of understanding of context and intent .

These tools often struggle to interpret the meaning and purpose behind a piece of writing. They may fail to recognize the subtle nuances of human expression, such as sarcasm, irony, and humor, which can be difficult for AI models to replicate accurately.

For example, a detection tool might flag a piece of writing as AI-generated simply because it uses a particular phrase or sentence structure that is common in AI-generated text, even if the context of the writing suggests it was written by a human.

[ Editor’s note : to test this theory, during the writing of this article, I wrote a 200-word passage completely from scratch about ramen in Tokyo (my favorite test subject that I also happen to know a TON about, I’ve lived in Tokyo for nearly 30 years).

The detector claimed my own writing as “confident it’s AI”.” Clearly something is wrong… my guess, these tools err on the side of “yes, it’s AI” since it’s very likely they’re being used purely to pass detector tests before they’re used. ]

It’s worth noting that AI writing detection tools aren’t foolproof .

Clever AI writing systems can be designed to dodge detection, using sneaky tricks like obscuring text, rewording phrases, and sprinkling in random bits to throw off the scent. These crafty methods can leave detection tools scratching their digital heads, potentially mistaking AI-generated text for human handiwork.

Smart folks are cooking up new ways to spot AI-written content more accurately. They’re tinkering with fancy deep learning models that can dive deeper into text, looking at how ideas flow, word choices, and the overall story structure.

They’re also exploring context clues , examining the bigger picture to spot things that just don’t quite fit with how humans typically write.

The Future of AI Detection

The battle between AI writing tools and detection methods is a constant game of cat and mouse. As AI models become more sophisticated, capable of producing increasingly human-like text, detection methods need to evolve to stay ahead. The future of AI detection lies in advancements in natural language processing (NLP) and machine learning (ML) , which will lead to more sophisticated and accurate detection techniques.

One promising avenue is the development of contextual understanding in NLP models.

Current detection methods often struggle to grasp the nuances of human expression, such as sarcasm, irony, and humor. Future models could be trained on a broader range of text, including literary works, social media conversations, and even personal journals, to better understand the complexities of human language and identify inconsistencies in AI-generated text.

These models could analyze the flow of ideas, the use of vocabulary, and the overall narrative structure to assess the authenticity of a piece of writing.

Another area of focus is the development of adversarial machine learning techniques.

These techniques aim to train detection models to identify and counter the tactics used by AI writing tools to evade detection. This could involve building models that can recognize and analyze the subtle patterns and characteristics of AI-generated text, even when it has been obfuscated or altered to resemble human-written content.

The future of AI writing detection also hinges on the development of collaborative efforts between researchers, developers, and users. But is this the right approach?

Of course we need the ability to detect AI content when it’s a vital situation such as academic integrity or legal matters.

But on the flip side, I think much of the industry is more focused on how to avoid content detection to avoid getting caught using AI-generated content.

And that’s unfortunate. Just as a calculator helps get math done faster, AI can help us get content done faster (and often better).

Human+AI is the Way

The rise of AI writing tools has undoubtedly changed the landscape of content creation.

While some may see AI as a threat to human creativity, it’s crucial to recognize its potential as a powerful tool for enhancing content quality and fostering innovation. The key lies in embracing a collaborative approach, where human writers and AI tools work together to create engaging, authentic, and insightful content.

Imagine a world where AI tools are used to generate initial drafts, brainstorm ideas, and research information, while human writers bring their unique perspectives, critical thinking, and emotional intelligence to refine, edit, and personalize the content. This synergistic partnership allows us to leverage the strengths of both humans and AI, creating content that is both informative and engaging.

Tools like Chibi AI are emerging as valuable allies in this human-AI collaboration.

Chibi AI, with its familiar document editor+unique AI tools, can help writers generate creative content, translate languages, summarize complex information, and so much more. By integrating AI tools like Chibi AI into their workflow, writers can streamline their writing process, improve their productivity, and enhance the quality of their content.

The use of AI in writing doesn’t diminish the value of human creativity. Instead, it allows writers to focus on what they do best: crafting compelling narratives, expressing unique perspectives, and connecting with their audience on an emotional level.

AI tools can handle the tedious parts of writing, freeing writers to explore their creativity and deliver content that resonates with readers. As we move forward in this dynamic landscape, it’s essential to remember that AI is a tool, not a replacement for human creativity.

By embracing a collaborative approach and leveraging the power of AI responsibly, we can usher in a new era of content creation where human ingenuity and AI technology work hand in hand to produce exceptional results.

- Today's Paper

- Markets Data

- Work & Careers

How to spot when a CV has been written by AI

Subscribe to gift this article

Gift 5 articles to anyone you choose each month when you subscribe.

Already a subscriber? Login

It’s not always easy to tell when a CV or cover letter was written by generative artificial intelligence – at least not for humans.

But perhaps the biggest red flag is the lack of personality or the sense that the words on the page are so abstract and vague they could be used to describe anyone.

Introducing your Newsfeed

Follow the topics, people and companies that matter to you.

Latest In Workplace

Fetching latest articles

Most Viewed In Work and careers

- Research article

- Open access

- Published: 13 September 2024

Comparison of generative AI performance on undergraduate and postgraduate written assessments in the biomedical sciences

- Andrew Williams ORCID: orcid.org/0000-0002-0908-0364 1

International Journal of Educational Technology in Higher Education volume 21 , Article number: 52 ( 2024 ) Cite this article

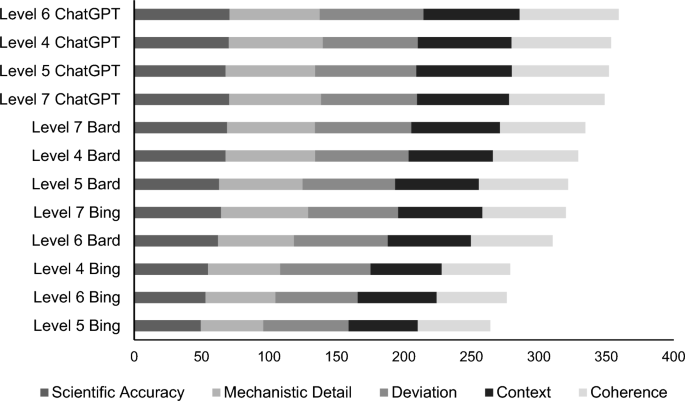

Metrics details

The value of generative AI tools in higher education has received considerable attention. Although there are many proponents of its value as a learning tool, many are concerned with the issues regarding academic integrity and its use by students to compose written assessments. This study evaluates and compares the output of three commonly used generative AI tools, ChatGPT, Bing and Bard. Each AI tool was prompted with an essay question from undergraduate (UG) level 4 (year 1), level 5 (year 2), level 6 (year 3) and postgraduate (PG) level 7 biomedical sciences courses. Anonymised AI generated output was then evaluated by four independent markers, according to specified marking criteria and matched to the Frameworks for Higher Education Qualifications (FHEQ) of UK level descriptors. Percentage scores and ordinal grades were given for each marking criteria across AI generated papers, inter-rater reliability was calculated using Kendall’s coefficient of concordance and generative AI performance ranked. Across all UG and PG levels, ChatGPT performed better than Bing or Bard in areas of scientific accuracy, scientific detail and context. All AI tools performed consistently well at PG level compared to UG level, although only ChatGPT consistently met levels of high attainment at all UG levels. ChatGPT and Bing did not provide adequate references, while Bing falsified references. In conclusion, generative AI tools are useful for providing scientific information consistent with the academic standards required of students in written assignments. These findings have broad implications for the design, implementation and grading of written assessments in higher education.

Introduction