Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

8.6 Hypothesis Tests for a Population Mean with Known Population Standard Deviation

Learning objectives.

- Conduct and interpret hypothesis tests for a population mean with known population standard deviation.

Some notes about conducting a hypothesis test:

- The null hypothesis [latex]H_0[/latex] is always an “equal to.” The null hypothesis is the original claim about the population parameter.

- The alternative hypothesis [latex]H_a[/latex] is a “less than,” “greater than,” or “not equal to.” The form of the alternative hypothesis depends on the context of the question.

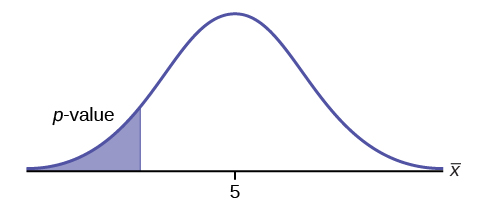

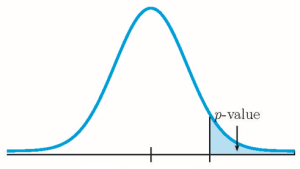

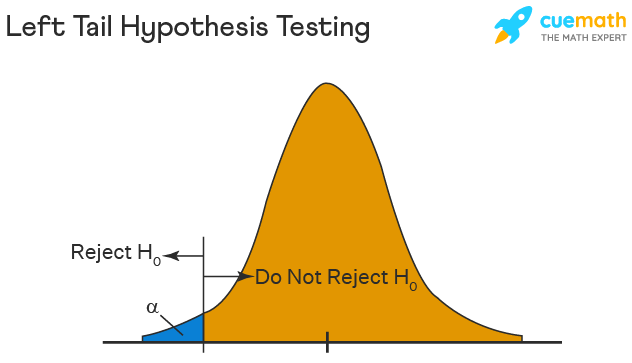

- If the alternative hypothesis is a “less than”, then the test is left-tail. The p -value is the area in the left-tail of the distribution.

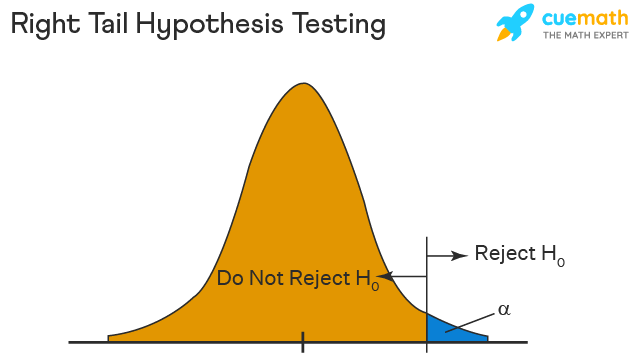

- If the alternative hypothesis is a “greater than”, then the test is right-tail. The p -value is the area in the right-tail of the distribution.

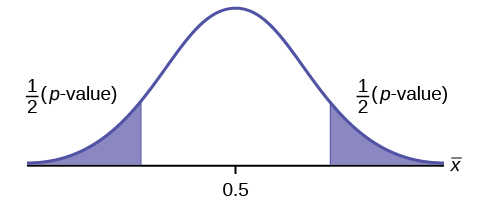

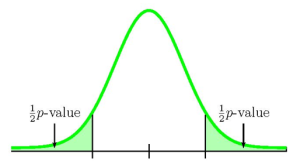

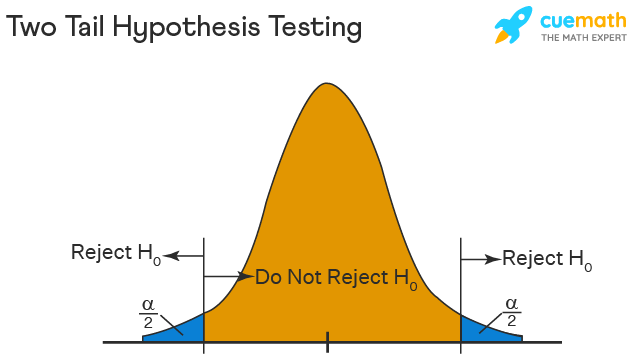

- If the alternative hypothesis is a “not equal to”, then the test is two-tail. The p -value is the sum of the area in the two-tails of the distribution. Each tail represents exactly half of the p -value.

- Think about the meaning of the p -value. A data analyst (and anyone else) should have more confidence that they made the correct decision to reject the null hypothesis with a smaller p -value (for example, 0.001 as opposed to 0.04) even if using a significance level of 0.05. Similarly, for a large p -value such as 0.4, as opposed to a p -value of 0.056 (a significance level of 0.05 is less than either number), a data analyst should have more confidence that they made the correct decision in not rejecting the null hypothesis. This makes the data analyst use judgment rather than mindlessly applying rules.

- The significance level must be identified before collecting the sample data and conducting the test. Generally, the significance level will be included in the question. If no significance level is given, a common standard is to use a significance level of 5%.

- An alternative approach for hypothesis testing is to use what is called the critical value approach . In this book, we will only use the p -value approach. Some of the videos below may mention the critical value approach, but this approach will not be used in this book.

Suppose the hypotheses for a hypothesis test are:

[latex]\begin{eqnarray*} H_0: & & \mu=5 \\ H_a: & & \mu \lt 5 \end{eqnarray*}[/latex]

Because the alternative hypothesis is a [latex]\lt[/latex], this is a left-tailed test. The p -value is the area in the left-tail of the distribution.

[latex]\begin{eqnarray*} H_0: & & \mu=0.5 \\ H_a: & & \mu \neq 0.5 \end{eqnarray*}[/latex]

Because the alternative hypothesis is a [latex]\neq[/latex], this is a two-tailed test. The p -value is the sum of the areas in the two tails of the distribution. Each tail contains exactly half of the p -value.

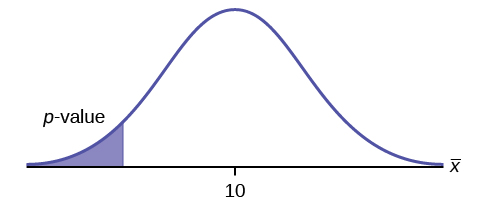

[latex]\begin{eqnarray*} H_0: & & \mu=10 \\ H_a: & & \mu \lt 10 \end{eqnarray*}[/latex]

Steps to Conduct a Hypothesis Test for a Population Mean with Known Population Standard Deviation

- Write down the null and alternative hypotheses in terms of the population mean [latex]\mu[/latex]. Include appropriate units with the values of the mean.

- Use the form of the alternative hypothesis to determine if the test is left-tailed, right-tailed, or two-tailed.

- Collect the sample information for the test and identify the significance level [latex]\alpha[/latex].

- When the population standard deviation is known , we use a normal distribution with [latex]\displaystyle{z=\frac{\overline{x}-\mu}{\frac{\sigma}{\sqrt{n}}}}[/latex] to find the p -value. The p -value is the area in the corresponding tail of the normal distribution.

- The results of the sample data are significant. There is sufficient evidence to conclude that the null hypothesis [latex]H_0[/latex] is an incorrect belief and that the alternative hypothesis [latex]H_a[/latex] is most likely correct.

- The results of the sample data are not significant. There is not sufficient evidence to conclude that the alternative hypothesis [latex]H_a[/latex] may be correct.

- Write down a concluding sentence specific to the context of the question.

USING EXCEL TO CALCULE THE P -VALUE FOR A HYPOTHESIS TEST ON A POPULATION MEAN WITH KNOWN POPULATION STANDARD DEVIATION

The p -value for a hypothesis test on a population mean is the area in the tail(s) of the distribution of the sample mean. When the population standard deviation is known, use the normal distribution to find the p -value.

The p -value is the area in the tail(s) of a normal distribution, so the norm.dist(x,[latex]\mu[/latex],[latex]\sigma[/latex],logic operator) function can be used to calculate the p -value.

- For x , enter the value for [latex]\overline{x}[/latex].

- For [latex]\mu[/latex] , enter the mean of the sample means [latex]\mu[/latex]. Note: Because the test is run assuming the null hypothesis is true, the value for [latex]\mu[/latex] is the claim from the null hypothesis.

- For [latex]\sigma[/latex] , enter the standard error of the mean [latex]\displaystyle{\frac{\sigma}{\sqrt{n}}}[/latex].

- For the logic operator , enter true . Note: Because we are calculating the area under the curve, we always enter true for the logic operator.

Use the appropriate technique with the norm.dist function to find the area in the left-tail or the area in the right-tail.

Jeffrey, as an eight-year old, established a mean time of 16.43 seconds with a standard deviation of 0.8 seconds for swimming the 25-meter freestyle. His dad, Frank, thought that Jeffrey could swim the 25-meter freestyle faster using goggles. Frank bought Jeffrey a new pair of goggles and timed Jeffrey swimming the 25-meter freestyle 15 different times. In the sample of 15 swims, Jeffrey’s mean time was 16 seconds. Frank thought that the goggles helped Jeffrey swim faster than 16.43 seconds. At the 5% significance level, did Jeffrey swim faster wearing the goggles? Assume that the swim times for the 25-meter freestyle are normally distributed.

Hypotheses:

[latex]\begin{eqnarray*} H_0: & & \mu=16.43 \mbox{ seconds} \\ H_a: & & \mu \lt 16.43 \mbox{ seconds} \end{eqnarray*}[/latex]

From the question, we have [latex]n=15[/latex], [latex]\overline{x}=16[/latex], [latex]\sigma=0.8[/latex] and [latex]\alpha=0.05[/latex].

This is a test on a population mean where the population standard deviation is known ([latex]\sigma=0.8[/latex]). So we use a normal distribution to calculate the p -value. Because the alternative hypothesis is a [latex]\lt[/latex], the p -value is the area in the left-tail of the distribution.

| norm.dist | ||

| 16 | 0.0187 | |

| 16.43 | ||

| 0.8/sqrt(15) | ||

| true |

So the p -value[latex]=0.0187[/latex].

Conclusion:

Because p -value[latex]=0.0187 \lt 0.05=\alpha[/latex], we reject the null hypothesis in favour of the alternative hypothesis. At the 5% significance level there is enough evidence to suggest that Jeffrey’s mean swim time with the goggles is less than 16.43 seconds.

- The null hypothesis [latex]\mu=16.43[/latex] is the claim that Jeffrey’s mean swim time with the goggles is 16.43 seconds (the same as it is without the googles).

- The alternative hypothesis [latex]\mu \lt 16.43[/latex] is the claim that Jeffrey’s swim time with the goggles is less than 16.43 seconds.

- The function is norm.dist because we are finding the area in the left tail of a normal distribution.

- Field 1 is the value of [latex]\overline{x}[/latex]

- Field 2 is the value of [latex]\mu[/latex] from the null hypothesis. Remember, we run the test assuming the null hypothesis is true, so that means we assume [latex]\mu=16.43[/latex].

- Field 3 is the standard deviation for the sample means [latex]\displaystyle{\frac{\sigma}{\sqrt{n}}}[/latex]. Note that we are not using the standard deviation from the population ([latex]\sigma=0.8[/latex]). This is because the p -value is the area under the curve of the distribution of the sample means, not the distribution of the population.

- The p -value of 0.0187 tells us that under the assumption that Jeffrey’s mean swim time with goggles is 16.43 seconds (the null hypothesis), there is only a 1.87% chance that the mean time for the 15 sample swims is 16 seconds or less. This is a small probability, and so is unlikely to happen assuming the null hypothesis is true. This suggests that the assumption that the null hypothesis is true is most likely incorrect, and so the conclusion of the test is to reject the null hypothesis in favour of the alternative hypothesis.

- The Type I error for this problem is to conclude that Jeffrey swims the 25-meter freestyle, on average, in less than 16.43 seconds (the alternative hypothesis) when, in fact, he actually swims the 25-meter freestyle, on average, in 16.43 seconds (the null hypothesis). That is, reject the null hypothesis when the null hypothesis is actually true.

- The Type II error for this problem is to conclude that Jeffrey swims the 25-meter freestyle, on average, in 16.43 seconds (the null hypothesis) when, in fact, he actually swims the 25-meter freestyle, on average, in less than 16.43 seconds (the alternative hypothesis). That is, do not reject the null hypothesis when the null hypothesis is actually false.

The mean throwing distance of a football for Marco, a high school freshman quarterback, is 40 yards with a standard deviation of 2 yards. The team coach tells Marco to adjust his grip to get more distance. The coach records the distances for 20 throws with the new grip. For the 20 throws, Marco’s mean distance was 41.5 yards. The coach thought the different grip helped Marco throw farther than 40 yards. At the 5% significance level, is Marco’s mean throwing distance higher with the new grip? Assume the throw distances for footballs are normally distributed.

[latex]\begin{eqnarray*} H_0: & & \mu=40 \mbox{ yards} \\ H_a: & & \mu \gt 40 \mbox{ yards} \end{eqnarray*}[/latex]

From the question, we have [latex]n=20[/latex], [latex]\overline{x}=41.5[/latex], [latex]\sigma=2[/latex] and [latex]\alpha=0.05[/latex].

This is a test on a population mean where the population standard deviation is known ([latex]\sigma=2[/latex]). So we use a normal distribution to calculate the p -value. Because the alternative hypothesis is a [latex]\gt[/latex], the p -value is the area in the right-tail of the distribution.

| 1-norm.dist | ||

| 41.5 | 0.0004 | |

| 40 | ||

| 2/sqrt(20) | ||

| true |

So the p -value[latex]=0.0004[/latex].

Because p -value[latex]=0.0004 \lt 0.05=\alpha[/latex], we reject the null hypothesis in favour of the alternative hypothesis. At the 5% significance level there is enough evidence to suggest that Marco’s mean throwing distance is greater than 40 yards with the new grip.

- The null hypothesis [latex]\mu=40[/latex] is the claim that Marco’s mean throwing distance with the new grip is 40 yards (the same as it is without the new grip).

- The alternative hypothesis [latex]\mu \gt 40[/latex] is the claim that Marco’s mean throwing distance with the new grip is greater than 40 yards.

- Field 2 is the value of [latex]\mu[/latex] from the null hypothesis.

- Field 3 is the standard deviation for the sample means [latex]\displaystyle{\frac{\sigma}{\sqrt{n}}}[/latex].

- The p -value of 0.0004 tells us that under the assumption that Marco’s mean throwing distance with the new grip is 40 yards, there is only a 0.047% chance that the mean throwing distance for the 20 sample throws is more than 40 yards. This is a small probability, and so is unlikely to happen assuming the null hypothesis is true. This suggests that the assumption that the null hypothesis is true is most likely incorrect, and so the conclusion of the test is to reject the null hypothesis in favour of the alternative hypothesis.

A local college states in its marketing materials that the average age of its first-year students is 18.3 years with a standard deviation of 3.4 years. But this information is based on old data and does not take into account that more older adults are returning to college. A researcher at the college believes that the average age of its first-year students has changed. The researcher takes a sample of 50 first-year students and finds the average age is 19.5 years. At the 1% significance level, has the average age of the college’s first-year students changed?

[latex]\begin{eqnarray*} H_0: & & \mu=18.3 \mbox{ years} \\ H_a: & & \mu \neq 18.3 \mbox{ years} \end{eqnarray*}[/latex]

From the question, we have [latex]n=50[/latex], [latex]\overline{x}=19.5[/latex], [latex]\sigma=3.4[/latex] and [latex]\alpha=0.01[/latex].

This is a test on a population mean where the population standard deviation is known ([latex]\sigma=3.4[/latex]). In this case, the sample size is greater than 30. So we use a normal distribution to calculate the p -value. Because the alternative hypothesis is a [latex]\neq[/latex], the p -value is the sum of area in the tails of the distribution.

Because there is only one sample, we only have information relating to one of the two tails, either the left tail or the right tail. We need to know if the sample relates to the left tail or right tail because that will determine how we calculate out the area of that tail using the normal distribution. In this case, the sample mean [latex]\overline{x}=19.5[/latex] is greater than the value of the population mean in the null hypothesis [latex]\mu=18.3[/latex] ([latex]\overline{x}=19.5>18.3=\mu[/latex]), so the sample information relates to the right-tail of the normal distribution. This means that we will calculate out the area in the right tail using 1-norm.dist . However, this is a two-tailed test where the p -value is the sum of the area in the two tails and the area in the right-tail is only one half of the p -value. The area in the left tail equals the area in the right tail and the p -value is the sum of these two areas.

| 1-norm.dist | ||

| 19.5 | 0.0063 | |

| 18.3 | ||

| 3.4/sqrt(50) | ||

| true |

So the area in the right tail is 0.0063 and [latex]\frac{1}{2}[/latex]( p -value)[latex]=0.0063[/latex]. This is also the area in the left tail, so

p -value[latex]=0.0063+0.0063=0.0126[/latex]

Because p -value[latex]=0.0126 \gt 0.01=\alpha[/latex], we do not reject the null hypothesis. At the 1% significance level there is not enough evidence to suggest that the average age of the college’s first-year students has changed.

- The null hypothesis [latex]\mu=18.3[/latex] is the claim that the average age of the first-year students is still 18.3 years.

- The alternative hypothesis [latex]\mu \neq 18.3[/latex] is the claim that the average age of the first-year students has changed from 18.3 years.

- We use norm.dist([latex]\overline{x}[/latex],[latex]\mu[/latex],[latex]\sigma/\mbox{sqrt}(n)[/latex],true) to find the area in the left tail. The area in the right tail equals the area in the left tail, so we can find the p -value by adding the output from this function to itself.

- We use 1-norm.dist([latex]\overline{x}[/latex],[latex]\mu[/latex],[latex]\sigma/\mbox{sqrt}(n)[/latex],true) to find the area in the right tail. The area in the left tail equals the area in the right tail, so we can find the p -value by adding the output from this function to itself.

- The p -value of 0.0126 is a large probability compared to the 1% significance level, and so is likely to happen assuming the null hypothesis is true. This suggests that the assumption that the null hypothesis is true is most likely correct, and so the conclusion of the test is to not reject the null hypothesis. In other words, the claim that the average age of first-year students is 18.3 years is most likely correct.

Watch this video: Hypothesis Testing: z -test, right tail by ExcelIsFun [33:47]

Watch this video: Hypothesis Testing: z -test, left tail by ExcelIsFun [10:57]

Watch this video: Hypothesis Testing: z -test, two tail by ExcelIsFun [9:56]

Concept Review

The hypothesis test for a population mean is a well established process:

- Collect the sample information for the test and identify the significance level.

- When the population standard deviation is known, find the p -value (the area in the corresponding tail) for the test using the normal distribution.

- Compare the p -value to the significance level and state the outcome of the test.

Attribution

“ 9.6 Hypothesis Testing of a Single Mean and Single Proportion “ in Introductory Statistics by OpenStax is licensed under a Creative Commons Attribution 4.0 International License.

Introduction to Statistics Copyright © 2022 by Valerie Watts is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Teach yourself statistics

Hypothesis Test for a Mean

This lesson explains how to conduct a hypothesis test of a mean, when the following conditions are met:

- The sampling method is simple random sampling .

- The sampling distribution is normal or nearly normal.

Generally, the sampling distribution will be approximately normally distributed if any of the following conditions apply.

- The population distribution is normal.

- The population distribution is symmetric , unimodal , without outliers , and the sample size is 15 or less.

- The population distribution is moderately skewed , unimodal, without outliers, and the sample size is between 16 and 40.

- The sample size is greater than 40, without outliers.

This approach consists of four steps: (1) state the hypotheses, (2) formulate an analysis plan, (3) analyze sample data, and (4) interpret results.

State the Hypotheses

Every hypothesis test requires the analyst to state a null hypothesis and an alternative hypothesis . The hypotheses are stated in such a way that they are mutually exclusive. That is, if one is true, the other must be false; and vice versa.

The table below shows three sets of hypotheses. Each makes a statement about how the population mean μ is related to a specified value M . (In the table, the symbol ≠ means " not equal to ".)

| Set | Null hypothesis | Alternative hypothesis | Number of tails |

|---|---|---|---|

| 1 | μ = M | μ ≠ M | 2 |

| 2 | μ M | μ < M | 1 |

| 3 | μ M | μ > M | 1 |

The first set of hypotheses (Set 1) is an example of a two-tailed test , since an extreme value on either side of the sampling distribution would cause a researcher to reject the null hypothesis. The other two sets of hypotheses (Sets 2 and 3) are one-tailed tests , since an extreme value on only one side of the sampling distribution would cause a researcher to reject the null hypothesis.

Formulate an Analysis Plan

The analysis plan describes how to use sample data to accept or reject the null hypothesis. It should specify the following elements.

- Significance level. Often, researchers choose significance levels equal to 0.01, 0.05, or 0.10; but any value between 0 and 1 can be used.

- Test method. Use the one-sample t-test to determine whether the hypothesized mean differs significantly from the observed sample mean.

Analyze Sample Data

Using sample data, conduct a one-sample t-test. This involves finding the standard error, degrees of freedom, test statistic, and the P-value associated with the test statistic.

SE = s * sqrt{ ( 1/n ) * [ ( N - n ) / ( N - 1 ) ] }

SE = s / sqrt( n )

- Degrees of freedom. The degrees of freedom (DF) is equal to the sample size (n) minus one. Thus, DF = n - 1.

t = ( x - μ) / SE

- P-value. The P-value is the probability of observing a sample statistic as extreme as the test statistic. Since the test statistic is a t statistic, use the t Distribution Calculator to assess the probability associated with the t statistic, given the degrees of freedom computed above. (See sample problems at the end of this lesson for examples of how this is done.)

Sample Size Calculator

As you probably noticed, the process of hypothesis testing can be complex. When you need to test a hypothesis about a mean score, consider using the Sample Size Calculator. The calculator is fairly easy to use, and it is free. You can find the Sample Size Calculator in Stat Trek's main menu under the Stat Tools tab. Or you can tap the button below.

Interpret Results

If the sample findings are unlikely, given the null hypothesis, the researcher rejects the null hypothesis. Typically, this involves comparing the P-value to the significance level , and rejecting the null hypothesis when the P-value is less than the significance level.

Test Your Understanding

In this section, two sample problems illustrate how to conduct a hypothesis test of a mean score. The first problem involves a two-tailed test; the second problem, a one-tailed test.

Problem 1: Two-Tailed Test

An inventor has developed a new, energy-efficient lawn mower engine. He claims that the engine will run continuously for 5 hours (300 minutes) on a single gallon of regular gasoline. From his stock of 2000 engines, the inventor selects a simple random sample of 50 engines for testing. The engines run for an average of 295 minutes, with a standard deviation of 20 minutes. Test the null hypothesis that the mean run time is 300 minutes against the alternative hypothesis that the mean run time is not 300 minutes. Use a 0.05 level of significance. (Assume that run times for the population of engines are normally distributed.)

Solution: The solution to this problem takes four steps: (1) state the hypotheses, (2) formulate an analysis plan, (3) analyze sample data, and (4) interpret results. We work through those steps below:

Null hypothesis: μ = 300

Alternative hypothesis: μ ≠ 300

- Formulate an analysis plan . For this analysis, the significance level is 0.05. The test method is a one-sample t-test .

SE = s / sqrt(n) = 20 / sqrt(50) = 20/7.07 = 2.83

DF = n - 1 = 50 - 1 = 49

t = ( x - μ) / SE = (295 - 300)/2.83 = -1.77

where s is the standard deviation of the sample, x is the sample mean, μ is the hypothesized population mean, and n is the sample size.

Since we have a two-tailed test , the P-value is the probability that the t statistic having 49 degrees of freedom is less than -1.77 or greater than 1.77. We use the t Distribution Calculator to find P(t < -1.77) is about 0.04.

- If you enter 1.77 as the sample mean in the t Distribution Calculator, you will find the that the P(t < 1.77) is about 0.04. Therefore, P(t > 1.77) is 1 minus 0.96 or 0.04. Thus, the P-value = 0.04 + 0.04 = 0.08.

- Interpret results . Since the P-value (0.08) is greater than the significance level (0.05), we cannot reject the null hypothesis.

Note: If you use this approach on an exam, you may also want to mention why this approach is appropriate. Specifically, the approach is appropriate because the sampling method was simple random sampling, the population was normally distributed, and the sample size was small relative to the population size (less than 5%).

Problem 2: One-Tailed Test

Bon Air Elementary School has 1000 students. The principal of the school thinks that the average IQ of students at Bon Air is at least 110. To prove her point, she administers an IQ test to 20 randomly selected students. Among the sampled students, the average IQ is 108 with a standard deviation of 10. Based on these results, should the principal accept or reject her original hypothesis? Assume a significance level of 0.01. (Assume that test scores in the population of engines are normally distributed.)

Null hypothesis: μ >= 110

Alternative hypothesis: μ < 110

- Formulate an analysis plan . For this analysis, the significance level is 0.01. The test method is a one-sample t-test .

SE = s / sqrt(n) = 10 / sqrt(20) = 10/4.472 = 2.236

DF = n - 1 = 20 - 1 = 19

t = ( x - μ) / SE = (108 - 110)/2.236 = -0.894

Here is the logic of the analysis: Given the alternative hypothesis (μ < 110), we want to know whether the observed sample mean is small enough to cause us to reject the null hypothesis.

The observed sample mean produced a t statistic test statistic of -0.894. We use the t Distribution Calculator to find P(t < -0.894) is about 0.19.

- This means we would expect to find a sample mean of 108 or smaller in 19 percent of our samples, if the true population IQ were 110. Thus the P-value in this analysis is 0.19.

- Interpret results . Since the P-value (0.19) is greater than the significance level (0.01), we cannot reject the null hypothesis.

Module: Inference for Means

Hypothesis test for a population mean (5 of 5), learning objectives.

- Interpret the P-value as a conditional probability.

We finish our discussion of the hypothesis test for a population mean with a review of the meaning of the P-value, along with a review of type I and type II errors.

Review of the Meaning of the P-value

At this point, we assume you know how to use a P-value to make a decision in a hypothesis test. The logic is always the same. If we pick a level of significance (α), then we compare the P-value to α.

- If the P-value ≤ α, reject the null hypothesis. The data supports the alternative hypothesis.

- If the P-value > α, do not reject the null hypothesis. The data is not strong enough to support the alternative hypothesis.

In fact, we find that we treat these as “rules” and apply them without thinking about what the P-value means. So let’s pause here and review the meaning of the P-value, since it is the connection between probability and decision-making in inference.

Birth Weights in a Town

Let’s return to the familiar context of birth weights for babies in a town. Suppose that babies in the town had a mean birth weight of 3,500 grams in 2010. This year, a random sample of 50 babies has a mean weight of about 3,400 grams with a standard deviation of about 500 grams. Here is the distribution of birth weights in the sample.

Obviously, this sample weighs less on average than the population of babies in the town in 2010. A decrease in the town’s mean birth weight could indicate a decline in overall health of the town. But does this sample give strong evidence that the town’s mean birth weight is less than 3,500 grams this year?

We now know how to answer this question with a hypothesis test. Let’s use a significance level of 5%.

Let μ = mean birth weight in the town this year. The null hypothesis says there is “no change from 2010.”

- H 0 : μ < 3,500

- H a : μ = 3,500

Since the sample is large, we can conduct the T-test (without worrying about the shape of the distribution of birth weights for individual babies.)

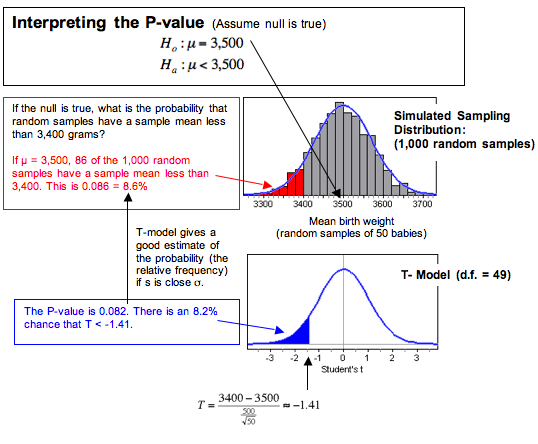

[latex]T\text{}=\text{}\frac{\mathrm{3,400}-\mathrm{3,500}}{\frac{500}{\sqrt{50}}}\text{}\approx \text{}-1.41[/latex]

Statistical software tells us the P-value is 0.082 = 8.2%. Since the P-value is greater than 0.05, we fail to reject the null hypothesis.

Our conclusion: This sample does not suggest that the mean birth weight this year is less than 3,500 grams ( P -value = 0.082). The sample from this year has a mean of 3,400 grams, which is 100 grams lower than the mean in 2010. But this difference is not statistically significant. It can be explained by the chance fluctuation we expect to see in random sampling.

What Does the P-Value of 0.082 Tell Us?

A simulation can help us understand the P-value. In a simulation, we assume that the population mean is 3,500 grams. This is the null hypothesis. We assume the null hypothesis is true and select 1,000 random samples from a population with a mean of 3,500 grams. The mean of the sampling distribution is at 3,500 (as predicted by the null hypothesis.) We see this in the simulated sampling distribution.

In the simulation, we can see that about 8.6% of the samples have a mean less than 3,400. Since probability is the relative frequency of an event in the long run, we say there is an 8.6% chance that a random sample of 500 babies has a mean less than 3,400 if the population mean is 3,500. We can see that the corresponding area to the left of T = −1.41 in the T-model (with df = 49) also gives us a good estimate of the probability. This area is the P-value, about 8.2%.

If we generalize this statement, we say the P-value is the probability that random samples have results more extreme than the data if the null hypothesis is true. (By more extreme, we mean further from value of the parameter, in the direction of the alternative hypothesis.) We can also describe the P-value in terms of T-scores. The P-value is the probability that the test statistic from a random sample has a value more extreme than that associated with the data if the null hypothesis is true.

Learn By Doing

What does a p-value mean.

Do women who smoke run the risk of shorter pregnancy and premature birth? The mean pregnancy length is 266 days. We test the following hypotheses.

- H 0 : μ = 266

- H a : μ < 266

Suppose a random sample of 40 women who smoke during their pregnancy have a mean pregnancy length of 260 days with a standard deviation of 21 days. The P-value is 0.04.

What probability does the P-value of 0.04 describe? Label each of the following interpretations as valid or invalid.

Review of Type I and Type II Errors

We know that statistical inference is based on probability, so there is always some chance of making a wrong decision. Recall that there are two types of wrong decisions that can be made in hypothesis testing. When we reject a null hypothesis that is true, we commit a type I error. When we fail to reject a null hypothesis that is false, we commit a type II error.

The following table summarizes the logic behind type I and type II errors.

It is possible to have some influence over the likelihoods of committing these errors. But decreasing the chance of a type I error increases the chance of a type II error. We have to decide which error is more serious for a given situation. Sometimes a type I error is more serious. Other times a type II error is more serious. Sometimes neither is serious.

Recall that if the null hypothesis is true, the probability of committing a type I error is α. Why is this? Well, when we choose a level of significance (α), we are choosing a benchmark for rejecting the null hypothesis. If the null hypothesis is true, then the probability that we will reject a true null hypothesis is α. So the smaller α is, the smaller the probability of a type I error.

It is more complicated to calculate the probability of a type II error. The best way to reduce the probability of a type II error is to increase the sample size. But once the sample size is set, larger values of α will decrease the probability of a type II error (while increasing the probability of a type I error).

General Guidelines for Choosing a Level of Significance

- If the consequences of a type I error are more serious, choose a small level of significance (α).

- If the consequences of a type II error are more serious, choose a larger level of significance (α). But remember that the level of significance is the probability of committing a type I error.

- In general, we pick the largest level of significance that we can tolerate as the chance of a type I error.

Let’s return to the investigation of the impact of smoking on pregnancy length.

Recap of the hypothesis test: The mean human pregnancy length is 266 days. We test the following hypotheses.

Let’s Summarize

In this “Hypothesis Test for a Population Mean,” we looked at the four steps of a hypothesis test as they relate to a claim about a population mean.

Step 1: Determine the hypotheses.

- The hypotheses are claims about the population mean, µ.

- The null hypothesis is a hypothesis that the mean equals a specific value, µ 0 .

- When [latex]{H}_{a}[/latex] is [latex]μ[/latex] < [latex]{μ}_{0}[/latex] or [latex]μ[/latex] > [latex]{μ}_{0}[/latex] , the test is a one-tailed test.

- When [latex]{H}_{a}[/latex] is [latex]μ[/latex] ≠ [latex]{μ}_{0}[/latex] , the test is a two-tailed test.

Step 2: Collect the data.

Since the hypothesis test is based on probability, random selection or assignment is essential in data production. Additionally, we need to check whether the t-model is a good fit for the sampling distribution of sample means. To use the t-model, the variable must be normally distributed in the population or the sample size must be more than 30. In practice, it is often impossible to verify that the variable is normally distributed in the population. If this is the case and the sample size is not more than 30, researchers often use the t-model if the sample is not strongly skewed and does not have outliers.

Step 3: Assess the evidence.

- If a t-model is appropriate, determine the t-test statistic for the data’s sample mean.

[latex]\frac{\mathrm{sample}\text{}\mathrm{mean}-\mathrm{population}\text{}\mathrm{mean}}{\mathrm{estimated}\text{}\mathrm{standard}\text{}\mathrm{error}}\text{}=\text{}\frac{\stackrel{¯}{x}-μ}{s/\sqrt{n}}[/latex]

- Use the test statistic, together with the alternative hypothesis, to determine the P-value.

- The P-value is the probability of finding a random sample with a mean at least as extreme as our sample mean, assuming that the null hypothesis is true.

- As in all hypothesis tests, if the alternative hypothesis is greater than, the P-value is the area to the right of the test statistic. If the alternative hypothesis is less than, the P-value is the area to the left of the test statistic. If the alternative hypothesis is not equal to, the P-value is equal to double the tail area beyond the test statistic.

Step 4: Give the conclusion.

The logic of the hypothesis test is always the same. To state a conclusion about H 0 , we compare the P-value to the significance level, α.

- If P ≤ α, we reject H 0 . We conclude there is significant evidence in favor of H a .

- If P > α, we fail to reject H 0 . We conclude the sample does not provide significant evidence in favor of H a .

- We write the conclusion in the context of the research question. Our conclusion is usually a statement about the alternative hypothesis (we accept H a or fail to acceptH a ) and should include the P-value.

Other Hypothesis Testing Notes

- Remember that the P-value is the probability of seeing a sample mean at least as extreme as the one from the data if the null hypothesis is true. The probability is about the random sample; it is not a “chance” statement about the null or alternative hypothesis.

- If our test results in rejecting a null hypothesis that is actually true, then it is called a type I error.

- If our test results in failing to reject a null hypothesis that is actually false, then it is called a type II error.

- If rejecting a null hypothesis would be very expensive, controversial, or dangerous, then we really want to avoid a type I error. In this case, we would set a strict significance level (a small value of α, such as 0.01).

- Finally, remember the phrase “garbage in, garbage out.” If the data collection methods are poor, then the results of a hypothesis test are meaningless.

- Concepts in Statistics. Provided by : Open Learning Initiative. Located at : http://oli.cmu.edu . License : CC BY: Attribution

Privacy Policy

Hypothesis Testing for Means & Proportions

Lisa Sullivan, PhD

Professor of Biostatistics

Boston University School of Public Health

Introduction

This is the first of three modules that will addresses the second area of statistical inference, which is hypothesis testing, in which a specific statement or hypothesis is generated about a population parameter, and sample statistics are used to assess the likelihood that the hypothesis is true. The hypothesis is based on available information and the investigator's belief about the population parameters. The process of hypothesis testing involves setting up two competing hypotheses, the null hypothesis and the alternate hypothesis. One selects a random sample (or multiple samples when there are more comparison groups), computes summary statistics and then assesses the likelihood that the sample data support the research or alternative hypothesis. Similar to estimation, the process of hypothesis testing is based on probability theory and the Central Limit Theorem.

This module will focus on hypothesis testing for means and proportions. The next two modules in this series will address analysis of variance and chi-squared tests.

Learning Objectives

After completing this module, the student will be able to:

- Define null and research hypothesis, test statistic, level of significance and decision rule

- Distinguish between Type I and Type II errors and discuss the implications of each

- Explain the difference between one and two sided tests of hypothesis

- Estimate and interpret p-values

- Explain the relationship between confidence interval estimates and p-values in drawing inferences

- Differentiate hypothesis testing procedures based on type of outcome variable and number of sample

Introduction to Hypothesis Testing

Techniques for hypothesis testing .

The techniques for hypothesis testing depend on

- the type of outcome variable being analyzed (continuous, dichotomous, discrete)

- the number of comparison groups in the investigation

- whether the comparison groups are independent (i.e., physically separate such as men versus women) or dependent (i.e., matched or paired such as pre- and post-assessments on the same participants).

In estimation we focused explicitly on techniques for one and two samples and discussed estimation for a specific parameter (e.g., the mean or proportion of a population), for differences (e.g., difference in means, the risk difference) and ratios (e.g., the relative risk and odds ratio). Here we will focus on procedures for one and two samples when the outcome is either continuous (and we focus on means) or dichotomous (and we focus on proportions).

General Approach: A Simple Example

The Centers for Disease Control (CDC) reported on trends in weight, height and body mass index from the 1960's through 2002. 1 The general trend was that Americans were much heavier and slightly taller in 2002 as compared to 1960; both men and women gained approximately 24 pounds, on average, between 1960 and 2002. In 2002, the mean weight for men was reported at 191 pounds. Suppose that an investigator hypothesizes that weights are even higher in 2006 (i.e., that the trend continued over the subsequent 4 years). The research hypothesis is that the mean weight in men in 2006 is more than 191 pounds. The null hypothesis is that there is no change in weight, and therefore the mean weight is still 191 pounds in 2006.

| Null Hypothesis | H : μ= 191 (no change) |

| Research Hypothesis | H : μ> 191 (investigator's belief) |

In order to test the hypotheses, we select a random sample of American males in 2006 and measure their weights. Suppose we have resources available to recruit n=100 men into our sample. We weigh each participant and compute summary statistics on the sample data. Suppose in the sample we determine the following:

Do the sample data support the null or research hypothesis? The sample mean of 197.1 is numerically higher than 191. However, is this difference more than would be expected by chance? In hypothesis testing, we assume that the null hypothesis holds until proven otherwise. We therefore need to determine the likelihood of observing a sample mean of 197.1 or higher when the true population mean is 191 (i.e., if the null hypothesis is true or under the null hypothesis). We can compute this probability using the Central Limit Theorem. Specifically,

(Notice that we use the sample standard deviation in computing the Z score. This is generally an appropriate substitution as long as the sample size is large, n > 30. Thus, there is less than a 1% probability of observing a sample mean as large as 197.1 when the true population mean is 191. Do you think that the null hypothesis is likely true? Based on how unlikely it is to observe a sample mean of 197.1 under the null hypothesis (i.e., <1% probability), we might infer, from our data, that the null hypothesis is probably not true.

Suppose that the sample data had turned out differently. Suppose that we instead observed the following in 2006:

How likely it is to observe a sample mean of 192.1 or higher when the true population mean is 191 (i.e., if the null hypothesis is true)? We can again compute this probability using the Central Limit Theorem. Specifically,

There is a 33.4% probability of observing a sample mean as large as 192.1 when the true population mean is 191. Do you think that the null hypothesis is likely true?

Neither of the sample means that we obtained allows us to know with certainty whether the null hypothesis is true or not. However, our computations suggest that, if the null hypothesis were true, the probability of observing a sample mean >197.1 is less than 1%. In contrast, if the null hypothesis were true, the probability of observing a sample mean >192.1 is about 33%. We can't know whether the null hypothesis is true, but the sample that provided a mean value of 197.1 provides much stronger evidence in favor of rejecting the null hypothesis, than the sample that provided a mean value of 192.1. Note that this does not mean that a sample mean of 192.1 indicates that the null hypothesis is true; it just doesn't provide compelling evidence to reject it.

In essence, hypothesis testing is a procedure to compute a probability that reflects the strength of the evidence (based on a given sample) for rejecting the null hypothesis. In hypothesis testing, we determine a threshold or cut-off point (called the critical value) to decide when to believe the null hypothesis and when to believe the research hypothesis. It is important to note that it is possible to observe any sample mean when the true population mean is true (in this example equal to 191), but some sample means are very unlikely. Based on the two samples above it would seem reasonable to believe the research hypothesis when x̄ = 197.1, but to believe the null hypothesis when x̄ =192.1. What we need is a threshold value such that if x̄ is above that threshold then we believe that H 1 is true and if x̄ is below that threshold then we believe that H 0 is true. The difficulty in determining a threshold for x̄ is that it depends on the scale of measurement. In this example, the threshold, sometimes called the critical value, might be 195 (i.e., if the sample mean is 195 or more then we believe that H 1 is true and if the sample mean is less than 195 then we believe that H 0 is true). Suppose we are interested in assessing an increase in blood pressure over time, the critical value will be different because blood pressures are measured in millimeters of mercury (mmHg) as opposed to in pounds. In the following we will explain how the critical value is determined and how we handle the issue of scale.

First, to address the issue of scale in determining the critical value, we convert our sample data (in particular the sample mean) into a Z score. We know from the module on probability that the center of the Z distribution is zero and extreme values are those that exceed 2 or fall below -2. Z scores above 2 and below -2 represent approximately 5% of all Z values. If the observed sample mean is close to the mean specified in H 0 (here m =191), then Z will be close to zero. If the observed sample mean is much larger than the mean specified in H 0 , then Z will be large.

In hypothesis testing, we select a critical value from the Z distribution. This is done by first determining what is called the level of significance, denoted α ("alpha"). What we are doing here is drawing a line at extreme values. The level of significance is the probability that we reject the null hypothesis (in favor of the alternative) when it is actually true and is also called the Type I error rate.

α = Level of significance = P(Type I error) = P(Reject H 0 | H 0 is true).

Because α is a probability, it ranges between 0 and 1. The most commonly used value in the medical literature for α is 0.05, or 5%. Thus, if an investigator selects α=0.05, then they are allowing a 5% probability of incorrectly rejecting the null hypothesis in favor of the alternative when the null is in fact true. Depending on the circumstances, one might choose to use a level of significance of 1% or 10%. For example, if an investigator wanted to reject the null only if there were even stronger evidence than that ensured with α=0.05, they could choose a =0.01as their level of significance. The typical values for α are 0.01, 0.05 and 0.10, with α=0.05 the most commonly used value.

Suppose in our weight study we select α=0.05. We need to determine the value of Z that holds 5% of the values above it (see below).

The critical value of Z for α =0.05 is Z = 1.645 (i.e., 5% of the distribution is above Z=1.645). With this value we can set up what is called our decision rule for the test. The rule is to reject H 0 if the Z score is 1.645 or more.

With the first sample we have

Because 2.38 > 1.645, we reject the null hypothesis. (The same conclusion can be drawn by comparing the 0.0087 probability of observing a sample mean as extreme as 197.1 to the level of significance of 0.05. If the observed probability is smaller than the level of significance we reject H 0 ). Because the Z score exceeds the critical value, we conclude that the mean weight for men in 2006 is more than 191 pounds, the value reported in 2002. If we observed the second sample (i.e., sample mean =192.1), we would not be able to reject the null hypothesis because the Z score is 0.43 which is not in the rejection region (i.e., the region in the tail end of the curve above 1.645). With the second sample we do not have sufficient evidence (because we set our level of significance at 5%) to conclude that weights have increased. Again, the same conclusion can be reached by comparing probabilities. The probability of observing a sample mean as extreme as 192.1 is 33.4% which is not below our 5% level of significance.

Hypothesis Testing: Upper-, Lower, and Two Tailed Tests

The procedure for hypothesis testing is based on the ideas described above. Specifically, we set up competing hypotheses, select a random sample from the population of interest and compute summary statistics. We then determine whether the sample data supports the null or alternative hypotheses. The procedure can be broken down into the following five steps.

- Step 1. Set up hypotheses and select the level of significance α.

H 0 : Null hypothesis (no change, no difference);

H 1 : Research hypothesis (investigator's belief); α =0.05

|

Upper-tailed, Lower-tailed, Two-tailed Tests The research or alternative hypothesis can take one of three forms. An investigator might believe that the parameter has increased, decreased or changed. For example, an investigator might hypothesize: : μ > μ , where μ is the comparator or null value (e.g., μ =191 in our example about weight in men in 2006) and an increase is hypothesized - this type of test is called an ; : μ < μ , where a decrease is hypothesized and this is called a ; or : μ ≠ μ where a difference is hypothesized and this is called a .The exact form of the research hypothesis depends on the investigator's belief about the parameter of interest and whether it has possibly increased, decreased or is different from the null value. The research hypothesis is set up by the investigator before any data are collected.

|

- Step 2. Select the appropriate test statistic.

The test statistic is a single number that summarizes the sample information. An example of a test statistic is the Z statistic computed as follows:

When the sample size is small, we will use t statistics (just as we did when constructing confidence intervals for small samples). As we present each scenario, alternative test statistics are provided along with conditions for their appropriate use.

- Step 3. Set up decision rule.

The decision rule is a statement that tells under what circumstances to reject the null hypothesis. The decision rule is based on specific values of the test statistic (e.g., reject H 0 if Z > 1.645). The decision rule for a specific test depends on 3 factors: the research or alternative hypothesis, the test statistic and the level of significance. Each is discussed below.

- The decision rule depends on whether an upper-tailed, lower-tailed, or two-tailed test is proposed. In an upper-tailed test the decision rule has investigators reject H 0 if the test statistic is larger than the critical value. In a lower-tailed test the decision rule has investigators reject H 0 if the test statistic is smaller than the critical value. In a two-tailed test the decision rule has investigators reject H 0 if the test statistic is extreme, either larger than an upper critical value or smaller than a lower critical value.

- The exact form of the test statistic is also important in determining the decision rule. If the test statistic follows the standard normal distribution (Z), then the decision rule will be based on the standard normal distribution. If the test statistic follows the t distribution, then the decision rule will be based on the t distribution. The appropriate critical value will be selected from the t distribution again depending on the specific alternative hypothesis and the level of significance.

- The third factor is the level of significance. The level of significance which is selected in Step 1 (e.g., α =0.05) dictates the critical value. For example, in an upper tailed Z test, if α =0.05 then the critical value is Z=1.645.

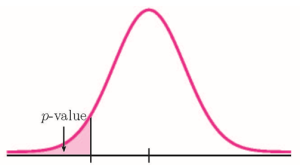

The following figures illustrate the rejection regions defined by the decision rule for upper-, lower- and two-tailed Z tests with α=0.05. Notice that the rejection regions are in the upper, lower and both tails of the curves, respectively. The decision rules are written below each figure.

|

Rejection Region for Upper-Tailed Z Test (H : μ > μ ) with α=0.05 The decision rule is: Reject H if Z 1.645. |

Rejection Region for Lower-Tailed Z Test (H 1 : μ < μ 0 ) with α =0.05 The decision rule is: Reject H 0 if Z < 1.645.

Rejection Region for Two-Tailed Z Test (H 1 : μ ≠ μ 0 ) with α =0.05 The decision rule is: Reject H 0 if Z < -1.960 or if Z > 1.960.

The complete table of critical values of Z for upper, lower and two-tailed tests can be found in the table of Z values to the right in "Other Resources." Critical values of t for upper, lower and two-tailed tests can be found in the table of t values in "Other Resources."

Here we compute the test statistic by substituting the observed sample data into the test statistic identified in Step 2.

The final conclusion is made by comparing the test statistic (which is a summary of the information observed in the sample) to the decision rule. The final conclusion will be either to reject the null hypothesis (because the sample data are very unlikely if the null hypothesis is true) or not to reject the null hypothesis (because the sample data are not very unlikely). If the null hypothesis is rejected, then an exact significance level is computed to describe the likelihood of observing the sample data assuming that the null hypothesis is true. The exact level of significance is called the p-value and it will be less than the chosen level of significance if we reject H 0 . Statistical computing packages provide exact p-values as part of their standard output for hypothesis tests. In fact, when using a statistical computing package, the steps outlined about can be abbreviated. The hypotheses (step 1) should always be set up in advance of any analysis and the significance criterion should also be determined (e.g., α =0.05). Statistical computing packages will produce the test statistic (usually reporting the test statistic as t) and a p-value. The investigator can then determine statistical significance using the following: If p < α then reject H 0 .

H 0 : μ = 191 H 1 : μ > 191 α =0.05 The research hypothesis is that weights have increased, and therefore an upper tailed test is used.

Because the sample size is large (n > 30) the appropriate test statistic is

In this example, we are performing an upper tailed test (H 1 : μ> 191), with a Z test statistic and selected α =0.05. Reject H 0 if Z > 1.645. We now substitute the sample data into the formula for the test statistic identified in Step 2. We reject H 0 because 2.38 > 1.645. We have statistically significant evidence at a =0.05, to show that the mean weight in men in 2006 is more than 191 pounds. Because we rejected the null hypothesis, we now approximate the p-value which is the likelihood of observing the sample data if the null hypothesis is true. An alternative definition of the p-value is the smallest level of significance where we can still reject H 0 . In this example, we observed Z=2.38 and for α=0.05, the critical value was 1.645. Because 2.38 exceeded 1.645 we rejected H 0 . In our conclusion we reported a statistically significant increase in mean weight at a 5% level of significance. Using the table of critical values for upper tailed tests, we can approximate the p-value. If we select α=0.025, the critical value is 1.96, and we still reject H 0 because 2.38 > 1.960. If we select α=0.010 the critical value is 2.326, and we still reject H 0 because 2.38 > 2.326. However, if we select α=0.005, the critical value is 2.576, and we cannot reject H 0 because 2.38 < 2.576. Therefore, the smallest α where we still reject H 0 is 0.010. This is the p-value. A statistical computing package would produce a more precise p-value which would be in between 0.005 and 0.010. Here we are approximating the p-value and would report p < 0.010. Type I and Type II ErrorsIn all tests of hypothesis, there are two types of errors that can be committed. The first is called a Type I error and refers to the situation where we incorrectly reject H 0 when in fact it is true. This is also called a false positive result (as we incorrectly conclude that the research hypothesis is true when in fact it is not). When we run a test of hypothesis and decide to reject H 0 (e.g., because the test statistic exceeds the critical value in an upper tailed test) then either we make a correct decision because the research hypothesis is true or we commit a Type I error. The different conclusions are summarized in the table below. Note that we will never know whether the null hypothesis is really true or false (i.e., we will never know which row of the following table reflects reality). Table - Conclusions in Test of Hypothesis

In the first step of the hypothesis test, we select a level of significance, α, and α= P(Type I error). Because we purposely select a small value for α, we control the probability of committing a Type I error. For example, if we select α=0.05, and our test tells us to reject H 0 , then there is a 5% probability that we commit a Type I error. Most investigators are very comfortable with this and are confident when rejecting H 0 that the research hypothesis is true (as it is the more likely scenario when we reject H 0 ). When we run a test of hypothesis and decide not to reject H 0 (e.g., because the test statistic is below the critical value in an upper tailed test) then either we make a correct decision because the null hypothesis is true or we commit a Type II error. Beta (β) represents the probability of a Type II error and is defined as follows: β=P(Type II error) = P(Do not Reject H 0 | H 0 is false). Unfortunately, we cannot choose β to be small (e.g., 0.05) to control the probability of committing a Type II error because β depends on several factors including the sample size, α, and the research hypothesis. When we do not reject H 0 , it may be very likely that we are committing a Type II error (i.e., failing to reject H 0 when in fact it is false). Therefore, when tests are run and the null hypothesis is not rejected we often make a weak concluding statement allowing for the possibility that we might be committing a Type II error. If we do not reject H 0 , we conclude that we do not have significant evidence to show that H 1 is true. We do not conclude that H 0 is true.  The most common reason for a Type II error is a small sample size. Tests with One Sample, Continuous OutcomeHypothesis testing applications with a continuous outcome variable in a single population are performed according to the five-step procedure outlined above. A key component is setting up the null and research hypotheses. The objective is to compare the mean in a single population to known mean (μ 0 ). The known value is generally derived from another study or report, for example a study in a similar, but not identical, population or a study performed some years ago. The latter is called a historical control. It is important in setting up the hypotheses in a one sample test that the mean specified in the null hypothesis is a fair and reasonable comparator. This will be discussed in the examples that follow. Test Statistics for Testing H 0 : μ= μ 0

Note that statistical computing packages will use the t statistic exclusively and make the necessary adjustments for comparing the test statistic to appropriate values from probability tables to produce a p-value. The National Center for Health Statistics (NCHS) published a report in 2005 entitled Health, United States, containing extensive information on major trends in the health of Americans. Data are provided for the US population as a whole and for specific ages, sexes and races. The NCHS report indicated that in 2002 Americans paid an average of $3,302 per year on health care and prescription drugs. An investigator hypothesizes that in 2005 expenditures have decreased primarily due to the availability of generic drugs. To test the hypothesis, a sample of 100 Americans are selected and their expenditures on health care and prescription drugs in 2005 are measured. The sample data are summarized as follows: n=100, x̄ =$3,190 and s=$890. Is there statistical evidence of a reduction in expenditures on health care and prescription drugs in 2005? Is the sample mean of $3,190 evidence of a true reduction in the mean or is it within chance fluctuation? We will run the test using the five-step approach.

H 0 : μ = 3,302 H 1 : μ < 3,302 α =0.05 The research hypothesis is that expenditures have decreased, and therefore a lower-tailed test is used. This is a lower tailed test, using a Z statistic and a 5% level of significance. Reject H 0 if Z < -1.645.

We do not reject H 0 because -1.26 > -1.645. We do not have statistically significant evidence at α=0.05 to show that the mean expenditures on health care and prescription drugs are lower in 2005 than the mean of $3,302 reported in 2002. Recall that when we fail to reject H 0 in a test of hypothesis that either the null hypothesis is true (here the mean expenditures in 2005 are the same as those in 2002 and equal to $3,302) or we committed a Type II error (i.e., we failed to reject H 0 when in fact it is false). In summarizing this test, we conclude that we do not have sufficient evidence to reject H 0 . We do not conclude that H 0 is true, because there may be a moderate to high probability that we committed a Type II error. It is possible that the sample size is not large enough to detect a difference in mean expenditures. The NCHS reported that the mean total cholesterol level in 2002 for all adults was 203. Total cholesterol levels in participants who attended the seventh examination of the Offspring in the Framingham Heart Study are summarized as follows: n=3,310, x̄ =200.3, and s=36.8. Is there statistical evidence of a difference in mean cholesterol levels in the Framingham Offspring? Here we want to assess whether the sample mean of 200.3 in the Framingham sample is statistically significantly different from 203 (i.e., beyond what we would expect by chance). We will run the test using the five-step approach. H 0 : μ= 203 H 1 : μ≠ 203 α=0.05 The research hypothesis is that cholesterol levels are different in the Framingham Offspring, and therefore a two-tailed test is used.

This is a two-tailed test, using a Z statistic and a 5% level of significance. Reject H 0 if Z < -1.960 or is Z > 1.960. We reject H 0 because -4.22 ≤ -1. .960. We have statistically significant evidence at α=0.05 to show that the mean total cholesterol level in the Framingham Offspring is different from the national average of 203 reported in 2002. Because we reject H 0 , we also approximate a p-value. Using the two-sided significance levels, p < 0.0001. Statistical Significance versus Clinical (Practical) SignificanceThis example raises an important concept of statistical versus clinical or practical significance. From a statistical standpoint, the total cholesterol levels in the Framingham sample are highly statistically significantly different from the national average with p < 0.0001 (i.e., there is less than a 0.01% chance that we are incorrectly rejecting the null hypothesis). However, the sample mean in the Framingham Offspring study is 200.3, less than 3 units different from the national mean of 203. The reason that the data are so highly statistically significant is due to the very large sample size. It is always important to assess both statistical and clinical significance of data. This is particularly relevant when the sample size is large. Is a 3 unit difference in total cholesterol a meaningful difference? Consider again the NCHS-reported mean total cholesterol level in 2002 for all adults of 203. Suppose a new drug is proposed to lower total cholesterol. A study is designed to evaluate the efficacy of the drug in lowering cholesterol. Fifteen patients are enrolled in the study and asked to take the new drug for 6 weeks. At the end of 6 weeks, each patient's total cholesterol level is measured and the sample statistics are as follows: n=15, x̄ =195.9 and s=28.7. Is there statistical evidence of a reduction in mean total cholesterol in patients after using the new drug for 6 weeks? We will run the test using the five-step approach. H 0 : μ= 203 H 1 : μ< 203 α=0.05

Because the sample size is small (n<30) the appropriate test statistic is This is a lower tailed test, using a t statistic and a 5% level of significance. In order to determine the critical value of t, we need degrees of freedom, df, defined as df=n-1. In this example df=15-1=14. The critical value for a lower tailed test with df=14 and a =0.05 is -2.145 and the decision rule is as follows: Reject H 0 if t < -2.145. We do not reject H 0 because -0.96 > -2.145. We do not have statistically significant evidence at α=0.05 to show that the mean total cholesterol level is lower than the national mean in patients taking the new drug for 6 weeks. Again, because we failed to reject the null hypothesis we make a weaker concluding statement allowing for the possibility that we may have committed a Type II error (i.e., failed to reject H 0 when in fact the drug is efficacious).  This example raises an important issue in terms of study design. In this example we assume in the null hypothesis that the mean cholesterol level is 203. This is taken to be the mean cholesterol level in patients without treatment. Is this an appropriate comparator? Alternative and potentially more efficient study designs to evaluate the effect of the new drug could involve two treatment groups, where one group receives the new drug and the other does not, or we could measure each patient's baseline or pre-treatment cholesterol level and then assess changes from baseline to 6 weeks post-treatment. These designs are also discussed here. Video - Comparing a Sample Mean to Known Population Mean (8:20) Link to transcript of the video Tests with One Sample, Dichotomous OutcomeHypothesis testing applications with a dichotomous outcome variable in a single population are also performed according to the five-step procedure. Similar to tests for means, a key component is setting up the null and research hypotheses. The objective is to compare the proportion of successes in a single population to a known proportion (p 0 ). That known proportion is generally derived from another study or report and is sometimes called a historical control. It is important in setting up the hypotheses in a one sample test that the proportion specified in the null hypothesis is a fair and reasonable comparator. In one sample tests for a dichotomous outcome, we set up our hypotheses against an appropriate comparator. We select a sample and compute descriptive statistics on the sample data. Specifically, we compute the sample size (n) and the sample proportion which is computed by taking the ratio of the number of successes to the sample size, We then determine the appropriate test statistic (Step 2) for the hypothesis test. The formula for the test statistic is given below. Test Statistic for Testing H 0 : p = p 0 if min(np 0 , n(1-p 0 )) > 5 The formula above is appropriate for large samples, defined when the smaller of np 0 and n(1-p 0 ) is at least 5. This is similar, but not identical, to the condition required for appropriate use of the confidence interval formula for a population proportion, i.e., Here we use the proportion specified in the null hypothesis as the true proportion of successes rather than the sample proportion. If we fail to satisfy the condition, then alternative procedures, called exact methods must be used to test the hypothesis about the population proportion. Example: The NCHS report indicated that in 2002 the prevalence of cigarette smoking among American adults was 21.1%. Data on prevalent smoking in n=3,536 participants who attended the seventh examination of the Offspring in the Framingham Heart Study indicated that 482/3,536 = 13.6% of the respondents were currently smoking at the time of the exam. Suppose we want to assess whether the prevalence of smoking is lower in the Framingham Offspring sample given the focus on cardiovascular health in that community. Is there evidence of a statistically lower prevalence of smoking in the Framingham Offspring study as compared to the prevalence among all Americans? H 0 : p = 0.211 H 1 : p < 0.211 α=0.05 We must first check that the sample size is adequate. Specifically, we need to check min(np 0 , n(1-p 0 )) = min( 3,536(0.211), 3,536(1-0.211))=min(746, 2790)=746. The sample size is more than adequate so the following formula can be used: This is a lower tailed test, using a Z statistic and a 5% level of significance. Reject H 0 if Z < -1.645. We reject H 0 because -10.93 < -1.645. We have statistically significant evidence at α=0.05 to show that the prevalence of smoking in the Framingham Offspring is lower than the prevalence nationally (21.1%). Here, p < 0.0001. The NCHS report indicated that in 2002, 75% of children aged 2 to 17 saw a dentist in the past year. An investigator wants to assess whether use of dental services is similar in children living in the city of Boston. A sample of 125 children aged 2 to 17 living in Boston are surveyed and 64 reported seeing a dentist over the past 12 months. Is there a significant difference in use of dental services between children living in Boston and the national data? Calculate this on your own before checking the answer. Video - Hypothesis Test for One Sample and a Dichotomous Outcome (3:55) Tests with Two Independent Samples, Continuous OutcomeThere are many applications where it is of interest to compare two independent groups with respect to their mean scores on a continuous outcome. Here we compare means between groups, but rather than generating an estimate of the difference, we will test whether the observed difference (increase, decrease or difference) is statistically significant or not. Remember, that hypothesis testing gives an assessment of statistical significance, whereas estimation gives an estimate of effect and both are important. Here we discuss the comparison of means when the two comparison groups are independent or physically separate. The two groups might be determined by a particular attribute (e.g., sex, diagnosis of cardiovascular disease) or might be set up by the investigator (e.g., participants assigned to receive an experimental treatment or placebo). The first step in the analysis involves computing descriptive statistics on each of the two samples. Specifically, we compute the sample size, mean and standard deviation in each sample and we denote these summary statistics as follows: for sample 1: for sample 2: The designation of sample 1 and sample 2 is arbitrary. In a clinical trial setting the convention is to call the treatment group 1 and the control group 2. However, when comparing men and women, for example, either group can be 1 or 2. In the two independent samples application with a continuous outcome, the parameter of interest in the test of hypothesis is the difference in population means, μ 1 -μ 2 . The null hypothesis is always that there is no difference between groups with respect to means, i.e., The null hypothesis can also be written as follows: H 0 : μ 1 = μ 2 . In the research hypothesis, an investigator can hypothesize that the first mean is larger than the second (H 1 : μ 1 > μ 2 ), that the first mean is smaller than the second (H 1 : μ 1 < μ 2 ), or that the means are different (H 1 : μ 1 ≠ μ 2 ). The three different alternatives represent upper-, lower-, and two-tailed tests, respectively. The following test statistics are used to test these hypotheses. Test Statistics for Testing H 0 : μ 1 = μ 2

NOTE: The formulas above assume equal variability in the two populations (i.e., the population variances are equal, or s 1 2 = s 2 2 ). This means that the outcome is equally variable in each of the comparison populations. For analysis, we have samples from each of the comparison populations. If the sample variances are similar, then the assumption about variability in the populations is probably reasonable. As a guideline, if the ratio of the sample variances, s 1 2 /s 2 2 is between 0.5 and 2 (i.e., if one variance is no more than double the other), then the formulas above are appropriate. If the ratio of the sample variances is greater than 2 or less than 0.5 then alternative formulas must be used to account for the heterogeneity in variances. The test statistics include Sp, which is the pooled estimate of the common standard deviation (again assuming that the variances in the populations are similar) computed as the weighted average of the standard deviations in the samples as follows: Because we are assuming equal variances between groups, we pool the information on variability (sample variances) to generate an estimate of the variability in the population. Note: Because Sp is a weighted average of the standard deviations in the sample, Sp will always be in between s 1 and s 2 .) Data measured on n=3,539 participants who attended the seventh examination of the Offspring in the Framingham Heart Study are shown below.

Suppose we now wish to assess whether there is a statistically significant difference in mean systolic blood pressures between men and women using a 5% level of significance. H 0 : μ 1 = μ 2 H 1 : μ 1 ≠ μ 2 α=0.05 Because both samples are large ( > 30), we can use the Z test statistic as opposed to t. Note that statistical computing packages use t throughout. Before implementing the formula, we first check whether the assumption of equality of population variances is reasonable. The guideline suggests investigating the ratio of the sample variances, s 1 2 /s 2 2 . Suppose we call the men group 1 and the women group 2. Again, this is arbitrary; it only needs to be noted when interpreting the results. The ratio of the sample variances is 17.5 2 /20.1 2 = 0.76, which falls between 0.5 and 2 suggesting that the assumption of equality of population variances is reasonable. The appropriate test statistic is We now substitute the sample data into the formula for the test statistic identified in Step 2. Before substituting, we will first compute Sp, the pooled estimate of the common standard deviation. Notice that the pooled estimate of the common standard deviation, Sp, falls in between the standard deviations in the comparison groups (i.e., 17.5 and 20.1). Sp is slightly closer in value to the standard deviation in the women (20.1) as there were slightly more women in the sample. Recall, Sp is a weight average of the standard deviations in the comparison groups, weighted by the respective sample sizes. Now the test statistic: We reject H 0 because 2.66 > 1.960. We have statistically significant evidence at α=0.05 to show that there is a difference in mean systolic blood pressures between men and women. The p-value is p < 0.010. Here again we find that there is a statistically significant difference in mean systolic blood pressures between men and women at p < 0.010. Notice that there is a very small difference in the sample means (128.2-126.5 = 1.7 units), but this difference is beyond what would be expected by chance. Is this a clinically meaningful difference? The large sample size in this example is driving the statistical significance. A 95% confidence interval for the difference in mean systolic blood pressures is: 1.7 + 1.26 or (0.44, 2.96). The confidence interval provides an assessment of the magnitude of the difference between means whereas the test of hypothesis and p-value provide an assessment of the statistical significance of the difference. Above we performed a study to evaluate a new drug designed to lower total cholesterol. The study involved one sample of patients, each patient took the new drug for 6 weeks and had their cholesterol measured. As a means of evaluating the efficacy of the new drug, the mean total cholesterol following 6 weeks of treatment was compared to the NCHS-reported mean total cholesterol level in 2002 for all adults of 203. At the end of the example, we discussed the appropriateness of the fixed comparator as well as an alternative study design to evaluate the effect of the new drug involving two treatment groups, where one group receives the new drug and the other does not. Here, we revisit the example with a concurrent or parallel control group, which is very typical in randomized controlled trials or clinical trials (refer to the EP713 module on Clinical Trials). A new drug is proposed to lower total cholesterol. A randomized controlled trial is designed to evaluate the efficacy of the medication in lowering cholesterol. Thirty participants are enrolled in the trial and are randomly assigned to receive either the new drug or a placebo. The participants do not know which treatment they are assigned. Each participant is asked to take the assigned treatment for 6 weeks. At the end of 6 weeks, each patient's total cholesterol level is measured and the sample statistics are as follows.