The best AI tools for research papers and academic research (Literature review, grants, PDFs and more)

As our collective understanding and application of artificial intelligence (AI) continues to evolve, so too does the realm of academic research. Some people are scared by it while others are openly embracing the change.

Make no mistake, AI is here to stay!

Instead of tirelessly scrolling through hundreds of PDFs, a powerful AI tool comes to your rescue, summarizing key information in your research papers. Instead of manually combing through citations and conducting literature reviews, an AI research assistant proficiently handles these tasks.

These aren’t futuristic dreams, but today’s reality. Welcome to the transformative world of AI-powered research tools!

This blog post will dive deeper into these tools, providing a detailed review of how AI is revolutionizing academic research. We’ll look at the tools that can make your literature review process less tedious, your search for relevant papers more precise, and your overall research process more efficient and fruitful.

I know that I wish these were around during my time in academia. It can be quite confronting when trying to work out what ones you should and shouldn’t use. A new one seems to be coming out every day!

Here is everything you need to know about AI for academic research and the ones I have personally trialed on my YouTube channel.

My Top AI Tools for Researchers and Academics – Tested and Reviewed!

There are many different tools now available on the market but there are only a handful that are specifically designed with researchers and academics as their primary user.

These are my recommendations that’ll cover almost everything that you’ll want to do:

| Find literature using semantic search. I use this almost every day to answer a question that pops into my head. | |

| An increasingly powerful and useful application, especially effective for conducting literature reviews through its advanced semantic search capabilities. | |

| An AI-powered search engine specifically designed for academic research, providing a range of innovative features that make it extremely valuable for academia, PhD candidates, and anyone interested in in-depth research on various topics. | |

| A tool designed to streamline the process of academic writing and journal submission, offering features that integrate directly with Microsoft Word as well as an online web document option. | |

| A tools that allow users to easily understand complex language in peer reviewed papers. The free tier is enough for nearly everyone. | |

| A versatile and powerful tool that acts like a personal data scientist, ideal for any research field. It simplifies data analysis and visualization, making complex tasks approachable and quick through its user-friendly interface. |

Want to find out all of the tools that you could use?

Here they are, below:

AI literature search and mapping – best AI tools for a literature review – elicit and more

Harnessing AI tools for literature reviews and mapping brings a new level of efficiency and precision to academic research. No longer do you have to spend hours looking in obscure research databases to find what you need!

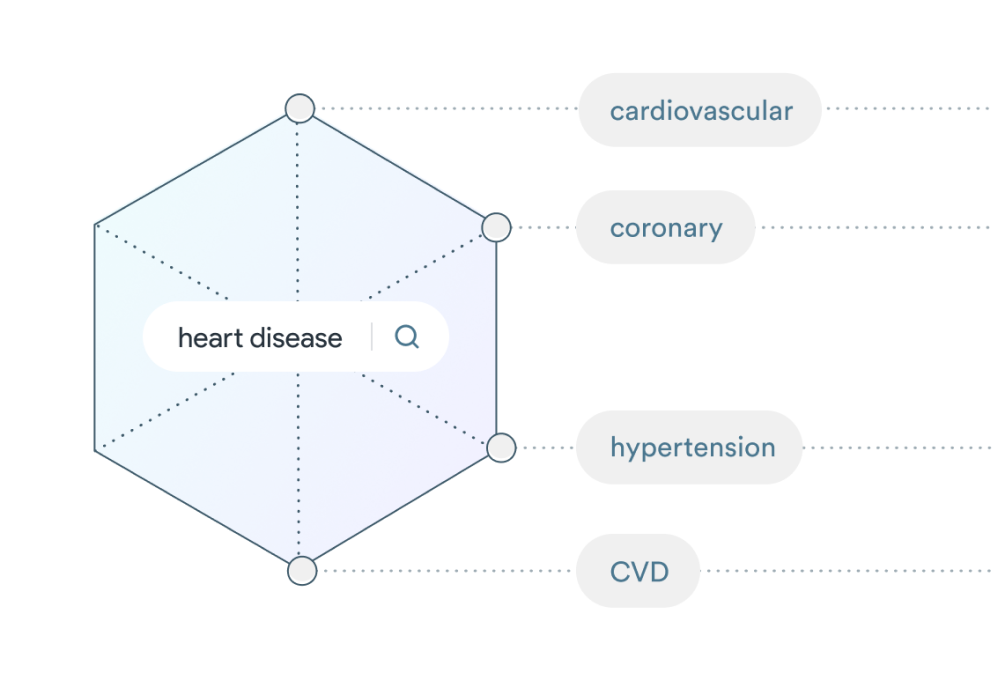

AI-powered tools like Semantic Scholar and elicit.org use sophisticated search engines to quickly identify relevant papers.

They can mine key information from countless PDFs, drastically reducing research time. You can even search with semantic questions, rather than having to deal with key words etc.

With AI as your research assistant, you can navigate the vast sea of scientific research with ease, uncovering citations and focusing on academic writing. It’s a revolutionary way to take on literature reviews.

- Elicit – https://elicit.org

- Litmaps – https://www.litmaps.com

- Research rabbit – https://www.researchrabbit.ai/

- Connected Papers – https://www.connectedpapers.com/

- Supersymmetry.ai: https://www.supersymmetry.ai

- Semantic Scholar: https://www.semanticscholar.org

- Laser AI – https://laser.ai/

- Inciteful – https://inciteful.xyz/

- Scite – https://scite.ai/

- System – https://www.system.com

If you like AI tools you may want to check out this article:

- How to get ChatGPT to write an essay [The prompts you need]

AI-powered research tools and AI for academic research

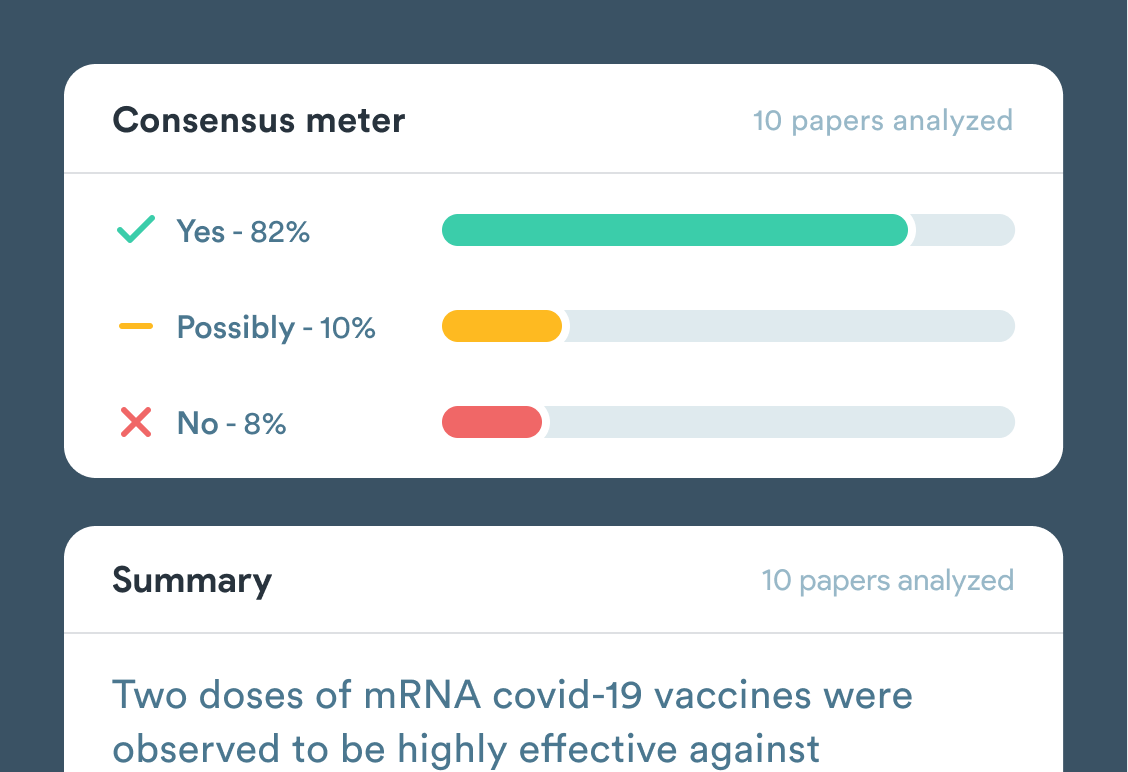

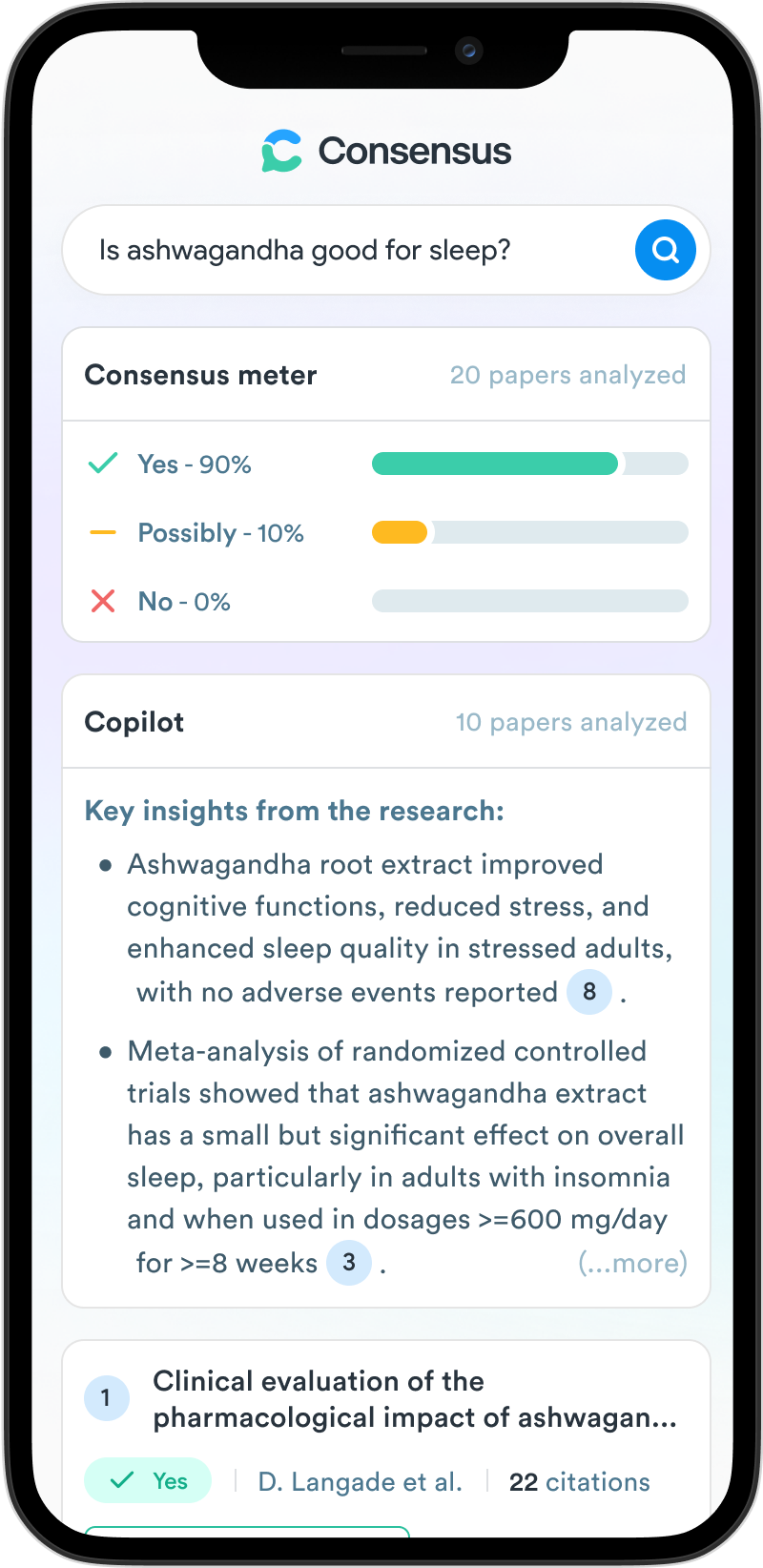

AI research tools, like Concensus, offer immense benefits in scientific research. Here are the general AI-powered tools for academic research.

These AI-powered tools can efficiently summarize PDFs, extract key information, and perform AI-powered searches, and much more. Some are even working towards adding your own data base of files to ask questions from.

Tools like scite even analyze citations in depth, while AI models like ChatGPT elicit new perspectives.

The result? The research process, previously a grueling endeavor, becomes significantly streamlined, offering you time for deeper exploration and understanding. Say goodbye to traditional struggles, and hello to your new AI research assistant!

- Consensus – https://consensus.app/

- Iris AI – https://iris.ai/

- Research Buddy – https://researchbuddy.app/

- Mirror Think – https://mirrorthink.ai

AI for reading peer-reviewed papers easily

Using AI tools like Explain paper and Humata can significantly enhance your engagement with peer-reviewed papers. I always used to skip over the details of the papers because I had reached saturation point with the information coming in.

These AI-powered research tools provide succinct summaries, saving you from sifting through extensive PDFs – no more boring nights trying to figure out which papers are the most important ones for you to read!

They not only facilitate efficient literature reviews by presenting key information, but also find overlooked insights.

With AI, deciphering complex citations and accelerating research has never been easier.

- Aetherbrain – https://aetherbrain.ai

- Explain Paper – https://www.explainpaper.com

- Chat PDF – https://www.chatpdf.com

- Humata – https://www.humata.ai/

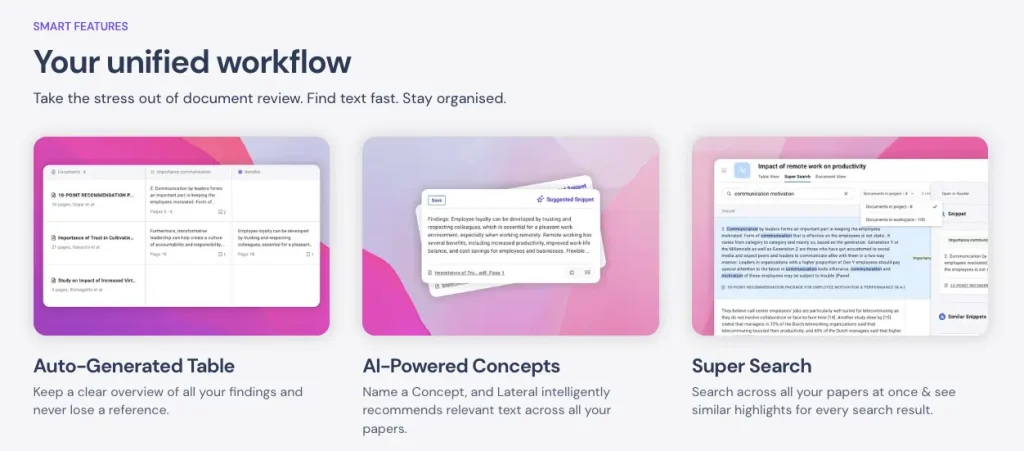

- Lateral AI – https://www.lateral.io/

- Paper Brain – https://www.paperbrain.study/

- Scholarcy – https://www.scholarcy.com/

- SciSpace Copilot – https://typeset.io/

- Unriddle – https://www.unriddle.ai/

- Sharly.ai – https://www.sharly.ai/

- Open Read – https://www.openread.academy

AI for scientific writing and research papers

In the ever-evolving realm of academic research, AI tools are increasingly taking center stage.

Enter Paper Wizard, Jenny.AI, and Wisio – these groundbreaking platforms are set to revolutionize the way we approach scientific writing.

Together, these AI tools are pioneering a new era of efficient, streamlined scientific writing.

- Jenny.AI – https://jenni.ai/ (20% off with code ANDY20)

- Yomu – https://www.yomu.ai

- Wisio – https://www.wisio.app

AI academic editing tools

In the realm of scientific writing and editing, artificial intelligence (AI) tools are making a world of difference, offering precision and efficiency like never before. Consider tools such as Paper Pal, Writefull, and Trinka.

Together, these tools usher in a new era of scientific writing, where AI is your dedicated partner in the quest for impeccable composition.

- PaperPal – https://paperpal.com/

- Writefull – https://www.writefull.com/

- Trinka – https://www.trinka.ai/

AI tools for grant writing

In the challenging realm of science grant writing, two innovative AI tools are making waves: Granted AI and Grantable.

These platforms are game-changers, leveraging the power of artificial intelligence to streamline and enhance the grant application process.

Granted AI, an intelligent tool, uses AI algorithms to simplify the process of finding, applying, and managing grants. Meanwhile, Grantable offers a platform that automates and organizes grant application processes, making it easier than ever to secure funding.

Together, these tools are transforming the way we approach grant writing, using the power of AI to turn a complex, often arduous task into a more manageable, efficient, and successful endeavor.

- Granted AI – https://grantedai.com/

- Grantable – https://grantable.co/

Best free AI research tools

There are many different tools online that are emerging for researchers to be able to streamline their research processes. There’s no need for convience to come at a massive cost and break the bank.

The best free ones at time of writing are:

- Elicit – https://elicit.org

- Connected Papers – https://www.connectedpapers.com/

- Litmaps – https://www.litmaps.com ( 10% off Pro subscription using the code “STAPLETON” )

- Consensus – https://consensus.app/

Wrapping up

The integration of artificial intelligence in the world of academic research is nothing short of revolutionary.

With the array of AI tools we’ve explored today – from research and mapping, literature review, peer-reviewed papers reading, scientific writing, to academic editing and grant writing – the landscape of research is significantly transformed.

The advantages that AI-powered research tools bring to the table – efficiency, precision, time saving, and a more streamlined process – cannot be overstated.

These AI research tools aren’t just about convenience; they are transforming the way we conduct and comprehend research.

They liberate researchers from the clutches of tedium and overwhelm, allowing for more space for deep exploration, innovative thinking, and in-depth comprehension.

Whether you’re an experienced academic researcher or a student just starting out, these tools provide indispensable aid in your research journey.

And with a suite of free AI tools also available, there is no reason to not explore and embrace this AI revolution in academic research.

We are on the precipice of a new era of academic research, one where AI and human ingenuity work in tandem for richer, more profound scientific exploration. The future of research is here, and it is smart, efficient, and AI-powered.

Before we get too excited however, let us remember that AI tools are meant to be our assistants, not our masters. As we engage with these advanced technologies, let’s not lose sight of the human intellect, intuition, and imagination that form the heart of all meaningful research. Happy researching!

Thank you to Ivan Aguilar – Ph.D. Student at SFU (Simon Fraser University), for starting this list for me!

Dr Andrew Stapleton has a Masters and PhD in Chemistry from the UK and Australia. He has many years of research experience and has worked as a Postdoctoral Fellow and Associate at a number of Universities. Although having secured funding for his own research, he left academia to help others with his YouTube channel all about the inner workings of academia and how to make it work for you.

Thank you for visiting Academia Insider.

We are here to help you navigate Academia as painlessly as possible. We are supported by our readers and by visiting you are helping us earn a small amount through ads and affiliate revenue - Thank you!

2024 © Academia Insider

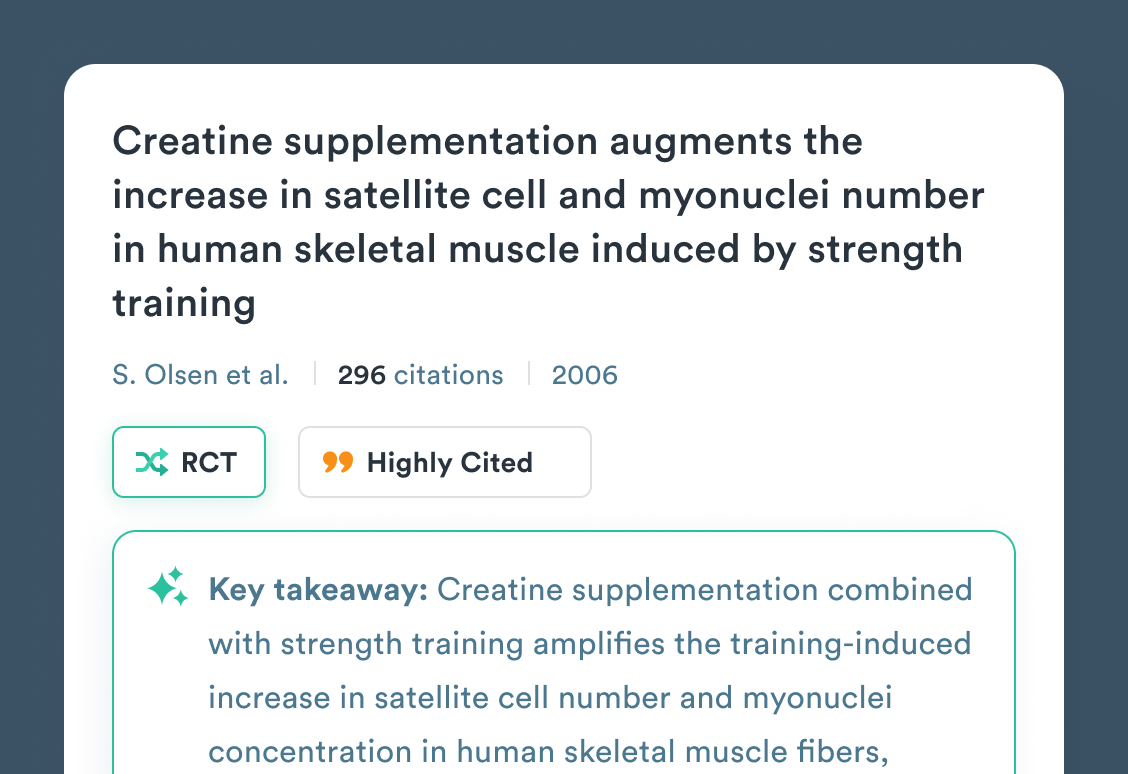

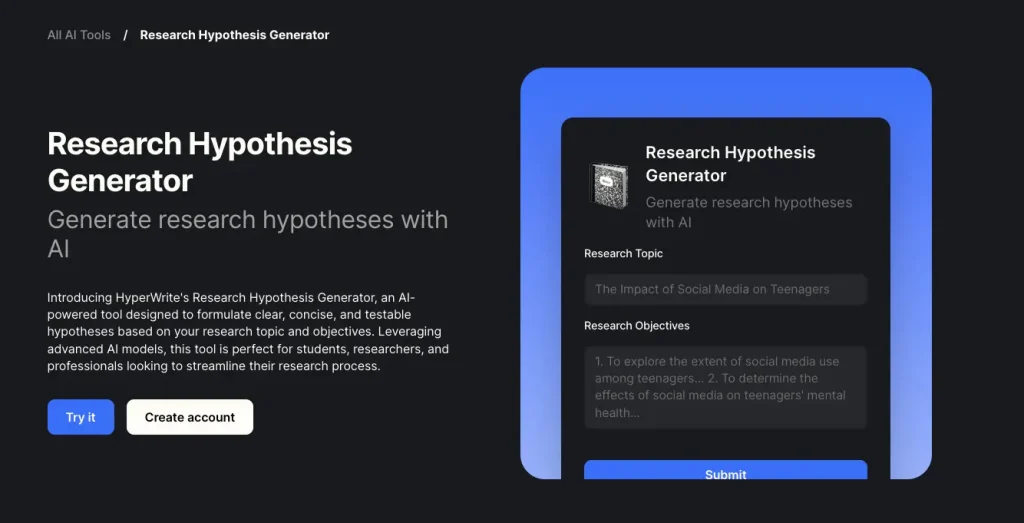

Analyze research papers at superhuman speed

Search for research papers, get one sentence abstract summaries, select relevant papers and search for more like them, extract details from papers into an organized table.

Find themes and concepts across many papers

Don't just take our word for it.

.webp)

Tons of features to speed up your research

Upload your own pdfs, orient with a quick summary, view sources for every answer, ask questions to papers, research for the machine intelligence age, pick a plan that's right for you, get in touch, enterprise and institutions, common questions. great answers., how do researchers use elicit.

Over 2 million researchers have used Elicit. Researchers commonly use Elicit to:

- Speed up literature review

- Find papers they couldn’t find elsewhere

- Automate systematic reviews and meta-analyses

- Learn about a new domain

Elicit tends to work best for empirical domains that involve experiments and concrete results. This type of research is common in biomedicine and machine learning.

What is Elicit not a good fit for?

Elicit does not currently answer questions or surface information that is not written about in an academic paper. It tends to work less well for identifying facts (e.g. "How many cars were sold in Malaysia last year?") and in theoretical or non-empirical domains.

What types of data can Elicit search over?

Elicit searches across 125 million academic papers from the Semantic Scholar corpus, which covers all academic disciplines. When you extract data from papers in Elicit, Elicit will use the full text if available or the abstract if not.

How accurate are the answers in Elicit?

A good rule of thumb is to assume that around 90% of the information you see in Elicit is accurate. While we do our best to increase accuracy without skyrocketing costs, it’s very important for you to check the work in Elicit closely. We try to make this easier for you by identifying all of the sources for information generated with language models.

How can you get in contact with the team?

You can email us at [email protected] or post in our Slack community ! We log and incorporate all user comments, and will do our best to reply to every inquiry as soon as possible.

What happens to papers uploaded to Elicit?

When you upload papers to analyze in Elicit, those papers will remain private to you and will not be shared with anyone else.

How accurate is Elicit?

Training our models on specific tasks, searching over academic papers, making it easy to double-check answers, save time, think more. try elicit for free..

A free, AI-powered research tool for scientific literature

- Pattern Recognition

- Classical Conditioning

New & Improved API for Developers

Introducing semantic reader in beta.

Stay Connected With Semantic Scholar Sign Up What Is Semantic Scholar? Semantic Scholar is a free, AI-powered research tool for scientific literature, based at Ai2.

Educational resources and simple solutions for your research journey

Top AI Tools for Literature Review

As researchers, one of the most important steps in conducting a comprehensive study is to perform a literature review. Some of the common challenges researchers face include the overwhelming volume of literature, difficulty in identifying relevant studies, synthesizing findings, and keeping up with the latest research. Fortunately, AI tools for literature reviews are helping researchers overcome these challenges by providing efficient and effective ways to search, filter, and analyze literature.

In this blog post, we’ll explore some of the top AI tools for literature review and how they can help streamline the research process.

Table of Contents

Top five AI tools for literature review

Conducting literature review is a long and arduous task where researchers have to closely go through vast information sources. However, with these five AI literature review tools, sifting large volumes of information easy.

R Discovery

R Discovery is one of the largest scholarly content repositories allowing you access to 250M+ research papers. R Discovery accelerates your research discovery journey, with latest and relevant content in your area of interest. Below are a few benefits of R Discovery tailored to students and researchers to enhance their research finding and reading experience:

- Personalized Research Reading Feeds – R Discovery curates an academic reading library based on the user’s interests. It provides personalized reading recommendations with daily alerts on top papers, ensuring that users are always updated with the latest and most relevant research in their fields.

- Multiple reading lists – You can create and manage separate reading lists for different literature reviews you’re conducting, keeping your research organized.

- Multilingual & Full-Text Audio Features – R Discovery offers audio versions of research abstracts or full-text articles in more than 30+ languages. This feature is particularly beneficial for non-native English speakers, allowing them to listen to or read research papers in their preferred language, thus enhancing accessibility and comprehension

- Smart Research Alerts – The app sends targeted research alerts and notifications to users based on their reading habits and preferences. Users receive no more than three research paper notifications per day to avoid information overload. The notifications include curated lists of top research papers, updates from preferred journals, and alerts on full-text articles available for reading.

- Integration with Reference Managers – The platform offers auto-sync capabilities with reference managers like Zotero and Mendeley, ensuring that users’ libraries are up to date with the papers they save or remove

Mendeley is a comprehensive reference management software that serves as an essential tool for researchers, academics, and students engaged in literature review and research management. Founded in 2007 by PhD students and acquired by Elsevier in 2013, Mendeley has evolved into a multifaceted platform that facilitates the organization, sharing, and discovery of research papers.

Below are the detailed features of this AI tool for literature reviews:

- Reference Management – Mendeley allows users to store, organize, and search all their references from a single library. This feature simplifies the process of managing a vast amount of literature, making it easier to access and cite these references in future research.

- Viewing and Annotation – Users can open PDFs directly within Mendeley’s PDF viewer, where they can add highlights and notes to the document. These annotations are stored in the user’s Mendeley account, and a new PDF file containing all annotations can be exported, facilitating collaborative review and personal notetaking.

- Collaboration and Sharing – Mendeley supports collaboration through the creation of private groups, allowing users to share references and annotated documents with co-workers or research team members. This feature enhances the collaborative aspect of literature review by enabling shared access to key resources.

- Literature Search and Discovery – While a dedicated literature search feature was removed from the latest version of Mendeley, the platform still offers capabilities for discovering relevant research. Users can import references from other sources and utilize Mendeley’s academic social network to find and share literature.

- Citation and Bibliography Generation – Mendeley simplifies the citation process with Mendeley Cite, an add-in for Microsoft Word that automates the generation of citations and bibliographies in various citation styles. This feature significantly reduces the time and effort required to accurately cite sources during a literature review.

Zotero is an open-access, easy-to-use reference management tool designed to assist with the collection, organization, citation, and sharing of research sources. It serves as a personal research assistant for students, researchers, and academics, helping them manage their literature review process efficiently.

- Reference Management – Zotero allows users to collect citations from various sources, including books, articles, media, webpages, and more. It provides a centralized library where users can organize these references into collections, tag them with keywords, and create saved searches that automatically populate with relevant materials.

- PDF Viewing and Annotation – Zotero has a built-in PDF viewer that enables users to highlight text, add sticky notes, and take snapshots of images or charts within PDFs. Annotations made in the PDF viewer are saved within Zotero and can be extracted as notes, making them fully searchable and easier to organize.

- Collaboration and Sharing – Zotero’s Groups feature allows users to collaborate with other Zotero users through shared libraries. Users can create private, public closed, or public open groups to work on collaborative research projects, distribute course materials, or build collaborative bibliographies

- Citation and Bibliography Generation – Zotero integrates with word processors like Microsoft Word, LibreOffice, and Google Docs to insert citations and bibliographies directly into documents. It supports over 10,000 citation styles, allowing users to format their work to match any style guide or publication

Scholarcy

Scholarcy is an innovative tool designed to assist researchers, students, and academics in managing the often-overwhelming task of conducting literature reviews. It leverages artificial intelligence to automate the extraction of key information from academic papers, creating structured summaries that make it easier to evaluate and understand research articles. Below are the detailed features of Scholarcy that are particularly useful for conducting literature reviews:

- Flashcard Summaries – Scholarcy generates interactive summary flashcards from research papers, book chapters, and other documents. These flashcards highlight key information, providing a compact and easy-to-read overview of the text. This feature is perfect for skim reading or getting to the key points of an article before reading it in full.

- Smart Highlighting and Analysis – The tool guides users to important sections of text and helps interpret them through smart highlighting and analysis. Scholarcy identifies factual statements and findings, highlighting them in different colors (blue for factual statements and orange for findings) to facilitate quick understanding of the content.

- Access to Full Text and Cited Papers – Scholarcy provides convenient access to the full text of articles and cited papers. It uses icons to link directly to the full text and to services like Libkey, which provides access via institutional subscriptions. Additionally, clicking on the Scholarcy icon shows the key findings from cited articles, offering a quick overview of the citation context.

- Literature Discovery and Screening – Scholarcy aids in the discovery and screening of new literature. It can source, screen, and store academic literature more efficiently than traditional methods. The Scholarcy browser extension provides a detailed Synopsis and Highlights for a more comprehensive screening than what an abstract alone can offer. This process can take as little as five minutes, allowing readers to absorb the underlying points of the literature quickly

- Reference Management Integration – Scholarcy allows for the export of flashcards to reference management software like Zotero. This integration enables users to see not only the reference of the article in their Zotero library but also the key highlights and structured summaries generated by Scholarcy.

Unpaywall

Offering open access to scholarly articles, Unpaywall is a free database built from over 50,000 publishers and repositories globally. They also leverage open data sources like PubMed Central, DOAJ, Crossref, and DataCite. Major databases including Dimensions, Scopus, and Web of Science have incorporated Unpaywall. For users with Digital Object Identifiers (DOIs), Unpaywall provides various tools to find open access and full-text articles. Data access is available through REST API, R API Wrapper, the Simple Query Tool, or by downloading the entire dataset. Below are the features of this AI tool for literature review

- Simple Query – The Simple Query Tool offered by Unpaywall helps users to determine if there is an open access (OA) version of a list of articles they are interested in. It is particularly useful for users with a list of Digital Object Identifiers (DOIs) for articles and are seeking free, full-text versions of these articles are available through Unpaywall’s extensive database.

- Browser Extension – The Unpaywall browser extension automatically searches for legally available, free versions of scholarly articles as you browse. When you come across a research article online, the extension checks Unpaywall’s database for an open access version. If such a version is available, a green tab appears on the side of your browser, which you can click to access the full text of the article directly. This feature is powered by an index of over 20 million free, legal full-text PDFs, making it a powerful ally in the quest for open access literature.

There are many AI tools for literature review available for your assistance. However, the best tool for you depends on your specific needs. Do you prioritize comprehensive search and full-text access? Easy organization and citation management? Or perhaps AI-powered summaries to grasp key findings quickly?

Evaluate the functionalities of each tool and choose the one that best complements your research workflow. With the right AI tool, your literature review can become a breeze.

R Discovery is a literature search and research reading platform that accelerates your research discovery journey by keeping you updated on the latest, most relevant scholarly content. With 250M+ research articles sourced from trusted aggregators like CrossRef, Unpaywall, PubMed, PubMed Central, Open Alex and top publishing houses like Springer Nature, JAMA, IOP, Taylor & Francis, NEJM, BMJ, Karger, SAGE, Emerald Publishing and more, R Discovery puts a world of research at your fingertips.

Try R Discovery Prime FREE for 1 week or upgrade at just US$72 a year to access premium features that let you listen to research on the go, read in your language, collaborate with peers, auto sync with reference managers, and much more. Choose a simpler, smarter way to find and read research – Download the app and start your free 7-day trial today !

Related Posts

How Does R Discovery’s Interplatform Capability Enhance Research Accessibility

What is Convenience Sampling: Definition, Method, and Examples

RAxter is now Enago Read! Enjoy the same licensing and pricing with enhanced capabilities. No action required for existing customers.

Your all in one AI-powered Reading Assistant

A Reading Space to Ideate, Create Knowledge, and Collaborate on Your Research

- Smartly organize your research

- Receive recommendations that cannot be ignored

- Collaborate with your team to read, discuss, and share knowledge

From Surface-Level Exploration to Critical Reading - All in one Place!

Fine-tune your literature search.

Our AI-powered reading assistant saves time spent on the exploration of relevant resources and allows you to focus more on reading.

Select phrases or specific sections and explore more research papers related to the core aspects of your selections. Pin the useful ones for future references.

Our platform brings you the latest research related to your and project work.

Speed up your literature review

Quickly generate a summary of key sections of any paper with our summarizer.

Make informed decisions about which papers are relevant, and where to invest your time in further reading.

Get key insights from the paper, quickly comprehend the paper’s unique approach, and recall the key points.

Bring order to your research projects

Organize your reading lists into different projects and maintain the context of your research.

Quickly sort items into collections and tag or filter them according to keywords and color codes.

Experience the power of sharing by finding all the shared literature at one place.

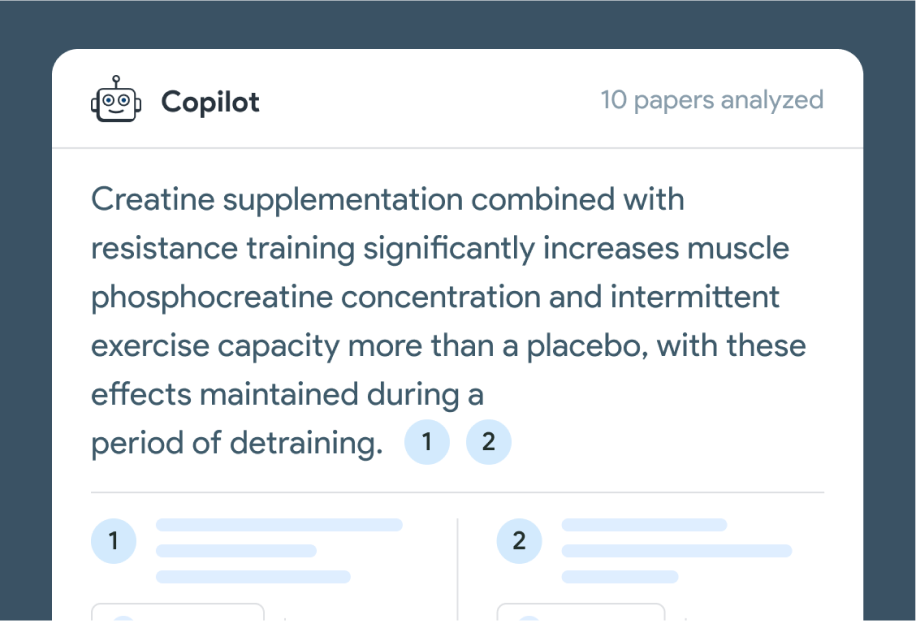

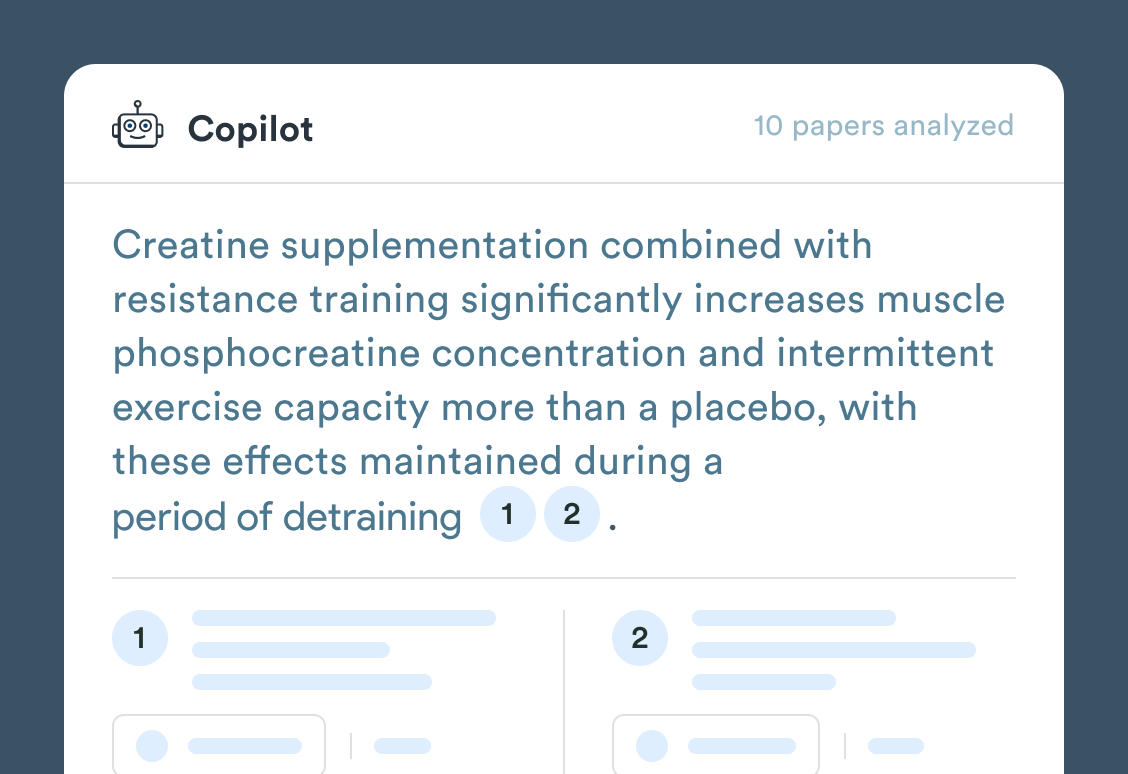

Decode papers effortlessly for faster comprehension

Highlight what is important so that you can retrieve it faster next time.

Select any text in the paper and ask Copilot to explain it to help you get a deeper understanding.

Ask questions and follow-ups from AI-powered Copilot.

Collaborate to read with your team, professors, or students

Share and discuss literature and drafts with your study group, colleagues, experts, and advisors. Recommend valuable resources and help each other for better understanding.

Work in shared projects efficiently and improve visibility within your study group or lab members.

Keep track of your team's progress by being constantly connected and engaging in active knowledge transfer by requesting full access to relevant papers and drafts.

Find papers from across the world's largest repositories

Testimonials

Privacy and security of your research data are integral to our mission..

Everything you add or create on Enago Read is private by default. It is visible if and when you share it with other users.

You can put Creative Commons license on original drafts to protect your IP. For shared files, Enago Read always maintains a copy in case of deletion by collaborators or revoked access.

We use state-of-the-art security protocols and algorithms including MD5 Encryption, SSL, and HTTPS to secure your data.

HTML conversions sometimes display errors due to content that did not convert correctly from the source. This paper uses the following packages that are not yet supported by the HTML conversion tool. Feedback on these issues are not necessary; they are known and are being worked on.

- failed: arydshln

- failed: inconsolata

Authors: achieve the best HTML results from your LaTeX submissions by following these best practices .

LitLLM: A Toolkit for Scientific Literature Review

Conducting literature reviews for scientific papers is essential for understanding research, its limitations, and building on existing work. It is a tedious task which makes an automatic literature review generator appealing. Unfortunately, many existing works that generate such reviews using Large Language Models (LLMs) have significant limitations. They tend to hallucinate—generate non-factual information—and ignore the latest research they have not been trained on. To address these limitations, we propose a toolkit that operates on Retrieval Augmented Generation (RAG) principles, specialized prompting and instructing techniques with the help of LLMs. Our system first initiates a web search to retrieve relevant papers by summarizing user-provided abstracts into keywords using an off-the-shelf LLM. Authors can enhance the search by supplementing it with relevant papers or keywords, contributing to a tailored retrieval process. Second, the system re-ranks the retrieved papers based on the user-provided abstract. Finally, the related work section is generated based on the re-ranked results and the abstract. There is a substantial reduction in time and effort for literature review compared to traditional methods, establishing our toolkit as an efficient alternative. Our open-source toolkit is accessible at https://github.com/shubhamagarwal92/LitLLM and Huggingface space ( https://huggingface.co/spaces/shubhamagarwal92/LitLLM ) with the video demo at https://youtu.be/E2ggOZBAFw0

Shubham Agarwal 1,2,3 , Issam H. Laradji 1,4 , Laurent Charlin 2,3,5 , Christopher Pal 1,2,5 1 ServiceNow Research, 2 Mila - Quebec AI Institute, 3 HEC Montreal, Canada 4 UBC, Vancouver, Canada, 5 Canada CIFAR AI Chair Correspondence: [email protected]

1 Introduction

Scientists have long used NLP systems like search engines to find and retrieve relevant papers. Scholarly engines, including Google Scholar, Microsoft Academic Graph, and Semantic Scholar, provide additional tools and structure to help researchers further. Following recent advances in large language models (LLMs), a new set of systems provides even more advanced features. For example, Explainpaper 1 1 1 https://www.explainpaper.com/ helps explain the contents of papers, and Writefull 2 2 2 https://x.writefull.com/ helps with several writing tasks, including abstract and title generation. There are, of course, many other tasks where similar technologies could be helpful.

Systems that help researchers with literature reviews hold promising prospects. The literature review is a difficult task that can be decomposed into several sub-tasks, including retrieving relevant papers and generating a related works section that contextualizes the proposed work compared to the existing literature. It is also a task where factual correctness is essential. In that sense, it is a challenging task for current LLMs, which are known to hallucinate. Overall, creating tools to help researchers more rapidly identify, summarize and contextualize relevant prior work could significantly help the research community.

Recent works explore the task of literature review in parts or in full. For example, Lu et al. ( 2020 ) proposes generating the related works section of a paper using its abstract and a list of (relevant) references. Researchers also look at the whole task and build systems using LLMs like ChatGPT for literature review Haman and Školník ( 2023 ); Huang and Tan ( 2023 ) . While these LLMs tend to generate high-quality text, they are prone to hallucinations Athaluri et al. ( 2023 ) . For example, the Galactica system was developed to reason about scientific knowledge (Taylor et al., 2022 ) . While it outperforms contemporary models on various scientific tasks, it generates made-up content like inaccurate citations and imaginary papers. 3 3 3 see e.g., What Meta Learned from Galactica

As a step forward, we explore retrieval-augmented-generation (RAG) to improve factual correctness Lewis et al. ( 2020 ) . The idea is to use the retrieval mechanism to obtain a relevant list of existing papers to be cited which provides relevant contextual knowledge for LLM based generation.

LitLLM is an interactive tool to help scientists write the literature review or related work section of a scientific paper starting from a user-provided abstract (see Figure 1 ). The specific objectives of this work are to create a system to help users navigate through research papers and write a literature review for a given paper or project. Our main contributions are:

We provide a system based on a modular pipeline that conducts a literature review based on a user-proposed abstract.

We use Retrieval Augmented Generation (RAG) techniques to condition the generated related work on factual content and avoid hallucinations using multiple search techniques.

We incorporate sentence-based planning to promote controllable generation.

2 Related Work

LLMs have demonstrated significant capabilities in storing factual knowledge and achieving state-of-the-art results when fine-tuned on downstream Natural Language Processing (NLP) tasks Lewis et al. ( 2020 ) .

However, they also face challenges such as hallucination, outdated knowledge, and non-transparent, untraceable reasoning processes Huang et al. ( 2023 ); Gao et al. ( 2023 ); Li et al. ( 2024 ) . These limitations have motivated the development of RAG (Retrieval Augmented Generation), which incorporates knowledge from external databases to enhance the accuracy and credibility of the models, particularly for knowledge-intensive tasks Gao et al. ( 2023 ) . RAG has emerged as a promising solution to the challenges faced by LLMs. It synergistically merges LLMs’ intrinsic knowledge with the vast, dynamic repositories of external databases Gao et al. ( 2023 ) . This approach allows for continuous knowledge updates and integration of domain-specific information in an attempt to limit the effect of outdated knowledge. The proposed work builds upon the advancements around RAG to provide a more efficient solution for academic writing.

On the other hand, there has been a notable emphasis on utilizing Large Language Models (LLMs) for tasks related to information retrieval and ranking Zhu et al. ( 2023 ) . The work by Sun et al. ( 2023 ) leverages generative LLMs such as ChatGPT and GPT-4 for relevance ranking in information retrieval, demonstrating that these models can deliver competitive results to state-of-the-art supervised methods. Pradeep et al. ( 2023b , a ) introduce different open-source LLM for listwise zero-shot reranking, further motivating the proposed approach of using LLMs for reranking in our work.

The exploration of large language models (LLMs) and their zero-shot abilities has been a significant focus in recent research. For instance, one study investigated using LLMs in recommender systems, demonstrating their promising zero-shot ranking abilities, although they struggled with the order of historical interactions and position bias Hou et al. ( 2023 ) . Another study improved the zero-shot learning abilities of LLMs through instruction tuning, which led to substantial improvements in performance on unseen tasks Wei et al. ( 2021 ) . A similar approach was taken to enhance the zero-shot reasoning abilities of LLMs, with the introduction of an autonomous agent to instruct the reasoning process, resulting in significant performance boosts Crispino et al. ( 2023 ) . The application of LLMs has also been explored in the context of natural language generation (NLG) assessment, with comparative assessment found to be superior to prompt scoring Liusie et al. ( 2023 ) . In the domain of Open-Domain Question Answering (ODQA), a Self-Prompting framework was proposed to utilize the massive knowledge stored in LLMs, leading to significant improvements over previous methods Li et al. ( 2022 ) . Prompt engineering has been identified as a key technique for enhancing the abilities of LLMs, with various strategies being explored Shi et al. ( 2023 ) . 4 4 4 This paragraph was generated using our platform with some minor modifications based on a slightly different version of our abstract.

Figure 2 provides an overview of the pipeline. The user provides a draft of the abstract or a research idea. We use LLM to first summarize the abstract in keywords that can be used as a query for search engines. Optionally, the users could provide relevant keywords to improve search results. This query is passed to the search engine, which retrieves relevant papers with the corresponding information, such as abstracts and open-access PDF URLs. These retrieved abstracts with the original query abstract are used as input to the other LLM Re-ranker, which provides a listwise ranking of the papers based on the relevance to the query abstract. These re-ranked abstracts with the original query are finally passed to the LLM generator, which generates the related work section of the paper. Recently, Agarwal et al. ( 2024 ) showed that prompting the LLMs with the sentence plans results in reduced hallucinations in the generation outputs. These plans contain information about the number of sentences and the citation description on each line, providing control to meet author preferences. We include this sentence-based planning in the LLM generator as part of this system. In the following, we provide more details about each of the modules.

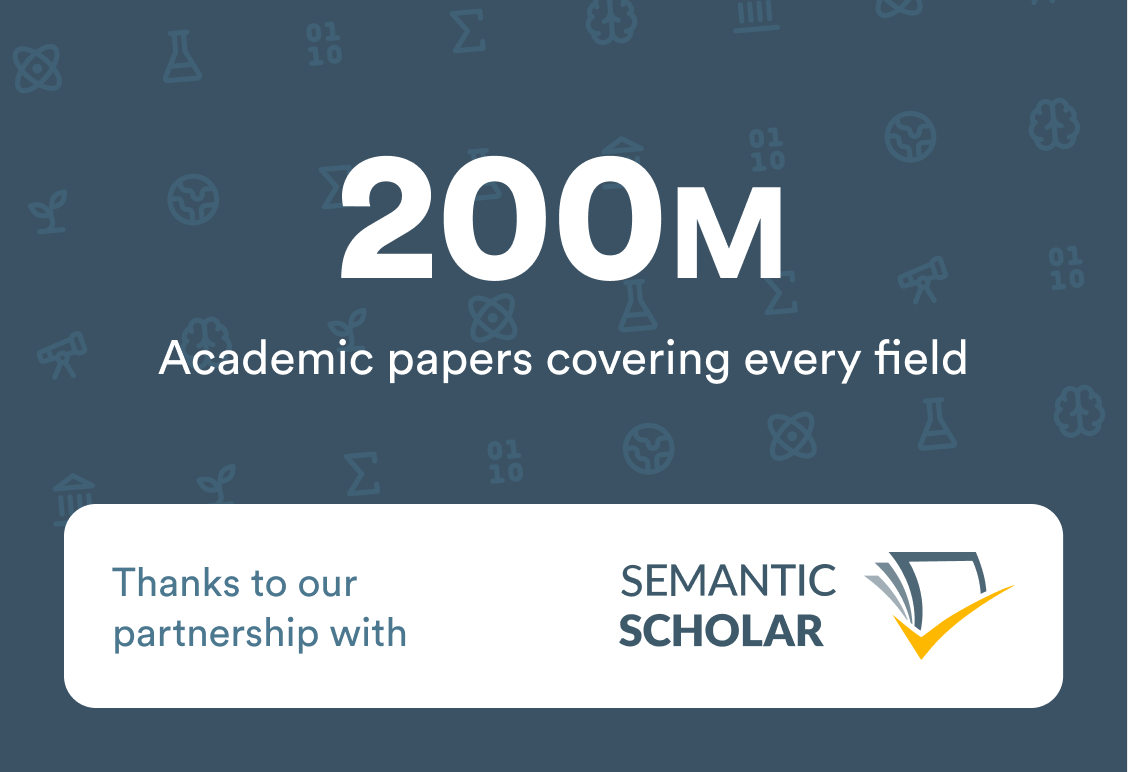

3.1 Paper Retrieval Module

In our toolkit, we retrieve relevant papers using the Semantic Scholar API. Other platforms could be used, but the S2 Platform is well-adapted to this use case. It is a large-scale academic corpus comprising 200M+ metadata records across multiple research areas, providing information about papers’ metadata, authors, paper embedding, etc. The Recommendations API also provides relevant papers similar to any seed paper. Figure 3 shows our system’s different strategies. We describe these three settings that we use to search for references:

User provides an abstract or a research idea (roughly the length of the abstract). We prompt an LLM (see Figure 4 ) to summarize this abstract in keywords which can be used as a search query with most APIs.

Users can optionally also provide keywords that can improve search results. This is similar (in spirit) to how researchers search for related work with a search engine. This is particularly useful in interdisciplinary research, and authors would like to include the latest research from a particular domain, which could not be captured much in the abstract.

Lastly, any seed paper the user finds relevant enough to their idea could be used with the Recommendations API from search engines to provide other closely related papers.

3.2 Paper Re-Ranking Module

Recent efforts have explored the application of proprietary LLMs for ranking Sun et al. ( 2023 ); Ma et al. ( 2023 ) as well as open-source models like Pradeep et al. ( 2023a , b ) . These approaches provide a combined list of passages directly as input to the model and retrieve the re-ordered ranking list Zhang et al. ( 2023 ) . Typically, a retriever first filters top-k potential candidates, which are then re-ranked by an LLM to provide the final output list. In our work, we use the instructional permutation generation approach Sun et al. ( 2023 ) where the model is prompted to generate a permutation of the different papers in descending order based on the relevance to the user-provided abstract, thus producing an ordered list of preferences against providing intermediate scores. Figure 5 showcases the prompt we used for LLM-based re-ranking.

3.3 Summary Generation Module

We explore two strategies for generation: (1) Zero-shot generation and (2) Plan-based generation, which relies on sentence plans for controllable generation, described in the following

3.3.1 Zero-shot generation

While LLMs can potentially search and generate relevant papers from their parametric memory and trained data, they are prone to hallucinating and generating non-factual content. Retrieval augmented generation, first introduced in Parvez et al. ( 2021 ) for knowledge tasks, addresses this by augmenting the generation model with an information retrieval module. The RAG principles have been subsequently used for dialogue generation in task-oriented settings Thulke et al. ( 2021 ) , code generation Liu et al. ( 2020 ); Parvez et al. ( 2021 ) and product review generation Kim et al. ( 2020 ) . RAG drastically reduces hallucinations in the generated output Gao et al. ( 2023 ); Tonmoy et al. ( 2024 ) .

Our work builds upon the principles of RAG, where we retrieve the relevant papers based on the query and augment them as context for generating the literature review. This also allows the system to be grounded in the retrieved information and be updated with the latest research where the training data limits the parametric knowledge of the LLM. Figure 6 shows our system’s prompt for effective Retrieval Augmented Generation (RAG).

3.3.2 Plan based generation

To get the best results from LLM, recent research shifts focus on designing better prompts (Prompt Engineering) including 0-shot chain-of-thought prompting Kojima et al. ( 2022 ); Zhou et al. ( 2022 ) , few-shot prompting Brown et al. ( 2020 ) techniques, few-shot Chain-of-thought prompting Wei et al. ( 2022 ) and in-context prompting Li and Liang ( 2021 ); Qin and Eisner ( 2021 ) . However, the longer context of our problem statement (query paper and multiple relevant papers) hinders the application of these techniques for response generation.

We utilized sentence plan-based prompting techniques drawing upon insights from the literature of traditional modular Natural Language Generation (NLG) pipelines with intermediary steps of sentence planning and surface realization Reiter and Dale ( 1997 ); Stent et al. ( 2004 ) . These plans provide a sentence structure of the expected output, which efficiently guides the LLM in generating the literature review in a controllable fashion as demonstrated in concurrent work (Agarwal et al., 2024 ) . Figure 7 (in Appendix) shows the prompt for plan-based generation with an example template as:

Please generate {num_sentences} sentences in {num_words} words. Cite {cite_x} at line {line_x}. Cite {cite_y} at line {line_y}.

4 Implementation Details

We build our system using Gradio Abid et al. ( 2019 ) , which provides a nice interface to quickly and efficiently build system demos. Our user interface is also available at HuggingFace Space 5 5 5 https://huggingface.co/spaces/shubhamagarwal92/LitLLM . We query the Semantic Scholar API available through the Semantic Scholar Open Data Platform (Lo et al., 2020 ; Kinney et al., 2023 ) to search for the relevant papers. Specifically, we use the Academic Graph 6 6 6 https://api.semanticscholar.org/api-docs/graph and Recommendations 7 7 7 https://api.semanticscholar.org/api-docs/recommendations API endpoint. In this work, we use OpenAI API 8 8 8 https://platform.openai.com/docs/guides/gpt to generate results for LLM using GPT-3.5-turbo and GPT-4 model. At the same time, our modular pipeline allows using any LLM (proprietary or open-sourced) for different components. We also allow the end-user to sort the retrieved papers by relevance (default S2 results), citation count, or year.

5 User Experience

As a preliminary study, we provided access to our user interface to 5 different researchers who worked through the demo to write literature reviews and validate the system’s efficacy. We also provide an example in the demo with an abstract for a quick start. Particularly, the users found the 0-shot generation to be more informative about the literature in general while the plan-based generation to be more accessible and tailored for their research paper, as also evident in our demo video. 9 9 9 https://youtu.be/E2ggOZBAFw0 . Table 1 (in Appendix) shows the output-related work for a recent paper Li et al. ( 2023 ) that was randomly chosen with a number of cited papers as 4. Our system generated an informative query Multimodal Research: Image-Text Model Interaction and retrieved relevant papers where the top recommended paper was also cited in the original paper. While zero-shot generation provides valuable insights into existing literature, plan-based generation produces a more succinct and readily usable literature review.

6 Conclusion and Future Work

In this work, we introduce and describe LitLLM, a system which can generate literature reviews in a few clicks from an abstract using off-the-shelf LLMs. This LLM-powered toolkit relies on the RAG with a re-ranking strategy to generate a literature review with attribution. Our auxiliary tool allows researchers to actively search for related work based on a preliminary research idea, research proposal or even a full abstract. We present a modular pipeline that can be easily adapted to include the next generation of LLMs and other domains, such as news, by changing the source of retrieval information.

Given the growing impact of different LLM-based writing assistants, we are optimistic that our system may aid researchers in searching relevant papers and improve the quality of automatically generated related work sections of a paper. While our system shows promise as a helpful research assistant, we believe that their usage should be disclosed to the readers, and authors should also observe caution in eliminating any possible hallucinations.

In the future, we would also like to explore academic search through multiple APIs, such as Google Scholar. This work only considered abstracts of the query paper and the retrieved papers, which creates a bottleneck in effective literature review generation. With the advent of longer context LLMs, we envision our system ingesting the whole paper (potentially leveraging an efficient LLM-based PDF parser) to provide a more relevant background of the related research. We consider our approach as an initial step for building intelligent research assistants which could help academicians through an interactive setting (Dwivedi-Yu et al., 2022 ) .

- Abid et al. (2019) Abubakar Abid, Ali Abdalla, Ali Abid, Dawood Khan, Abdulrahman Alfozan, and James Zou. 2019. Gradio: Hassle-free sharing and testing of ml models in the wild . arXiv preprint arXiv:1906.02569 .

- Agarwal et al. (2024) Shubham Agarwal, Issam Laradji, Laurent Charlin, and Christopher Pal. 2024. LLMs for Literature Review generation: Are we there yet? Under submission .

- Athaluri et al. (2023) Sai Anirudh Athaluri, Sandeep Varma Manthena, V S R Krishna Manoj Kesapragada, Vineel Yarlagadda, Tirth Dave, and Rama Tulasi Siri Duddumpudi. 2023. Exploring the boundaries of reality: Investigating the phenomenon of artificial intelligence hallucination in scientific writing through chatgpt references . Cureus , 15.

- Brown et al. (2020) Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, and Dario Amodei. 2020. Language models are few-shot learners .

- Crispino et al. (2023) Nicholas Crispino, Kyle Montgomery, Fankun Zeng, Dawn Song, and Chenguang Wang. 2023. Agent instructs large language models to be general zero-shot reasoners . ArXiv , abs/2310.03710.

- Dwivedi-Yu et al. (2022) Jane Dwivedi-Yu, Timo Schick, Zhengbao Jiang, Maria Lomeli, Patrick Lewis, Gautier Izacard, Edouard Grave, Sebastian Riedel, and Fabio Petroni. 2022. Editeval: An instruction-based benchmark for text improvements. arXiv preprint arXiv:2209.13331 .

- Gao et al. (2023) Yunfan Gao, Yun Xiong, Xinyu Gao, Kangxiang Jia, Jinliu Pan, Yuxi Bi, Yi Dai, Jiawei Sun, and Haofen Wang. 2023. Retrieval-augmented generation for large language models: A survey . arXiv preprint arXiv:2312.10997 .

- Haman and Školník (2023) Michael Haman and Milan Školník. 2023. Using chatgpt to conduct a literature review. Accountability in Research , pages 1–3.

- Hou et al. (2023) Yupeng Hou, Junjie Zhang, Zihan Lin, Hongyu Lu, Ruobing Xie, Julian McAuley, and Wayne Xin Zhao. 2023. Large language models are zero-shot rankers for recommender systems . ArXiv , abs/2305.08845.

- Huang and Tan (2023) Jingshan Huang and Ming Tan. 2023. The role of chatgpt in scientific communication: writing better scientific review articles . American Journal of Cancer Research , 13(4):1148.

- Huang et al. (2023) Lei Huang, Weijiang Yu, Weitao Ma, Weihong Zhong, Zhangyin Feng, Haotian Wang, Qianglong Chen, Weihua Peng, Xiaocheng Feng, Bing Qin, et al. 2023. A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions . arXiv preprint arXiv:2311.05232 .

- Kim et al. (2020) Jihyeok Kim, Seungtaek Choi, Reinald Kim Amplayo, and Seung-won Hwang. 2020. Retrieval-augmented controllable review generation. In Proceedings of the 28th International Conference on Computational Linguistics , pages 2284–2295.

- Kinney et al. (2023) Rodney Kinney, Chloe Anastasiades, Russell Authur, Iz Beltagy, Jonathan Bragg, Alexandra Buraczynski, Isabel Cachola, Stefan Candra, Yoganand Chandrasekhar, Arman Cohan, et al. 2023. The semantic scholar open data platform. arXiv preprint arXiv:2301.10140 .

- Kojima et al. (2022) Takeshi Kojima, Shixiang Shane Gu, Machel Reid, Yutaka Matsuo, and Yusuke Iwasawa. 2022. Large language models are zero-shot reasoners. Advances in neural information processing systems , 35:22199–22213.

- Lewis et al. (2020) Patrick Lewis, Ethan Perez, Aleksandra Piktus, Fabio Petroni, Vladimir Karpukhin, Naman Goyal, Heinrich Küttler, Mike Lewis, Wen-tau Yih, Tim Rocktäschel, et al. 2020. Retrieval-augmented generation for knowledge-intensive nlp tasks. Advances in Neural Information Processing Systems , 33:9459–9474.

- Li et al. (2023) Hang Li, Jindong Gu, Rajat Koner, Sahand Sharifzadeh, and Volker Tresp. 2023. Do dall-e and flamingo understand each other? In Proceedings of the IEEE/CVF International Conference on Computer Vision , pages 1999–2010.

- Li et al. (2022) Junlong Li, Zhuosheng Zhang, and Hai Zhao. 2022. Self-prompting large language models for zero-shot open-domain qa .

- Li et al. (2024) Junyi Li, Jie Chen, Ruiyang Ren, Xiaoxue Cheng, Wayne Xin Zhao, Jian-Yun Nie, and Ji-Rong Wen. 2024. The dawn after the dark: An empirical study on factuality hallucination in large language models. arXiv preprint arXiv:2401.03205 .

- Li and Liang (2021) Xiang Lisa Li and Percy Liang. 2021. Prefix-tuning: Optimizing continuous prompts for generation. arXiv preprint arXiv:2101.00190 .

- Liu et al. (2020) Shangqing Liu, Yu Chen, Xiaofei Xie, Jingkai Siow, and Yang Liu. 2020. Retrieval-augmented generation for code summarization via hybrid gnn. arXiv preprint arXiv:2006.05405 .

- Liusie et al. (2023) Adian Liusie, Potsawee Manakul, and Mark John Francis Gales. 2023. Llm comparative assessment: Zero-shot nlg evaluation through pairwise comparisons using large language models .

- Lo et al. (2020) Kyle Lo, Lucy Lu Wang, Mark Neumann, Rodney Kinney, and Daniel Weld. 2020. S2ORC: The semantic scholar open research corpus . In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics , pages 4969–4983, Online. Association for Computational Linguistics.

- Lu et al. (2020) Yao Lu, Yue Dong, and Laurent Charlin. 2020. Multi-XScience: A large-scale dataset for extreme multi-document summarization of scientific articles . In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP) , pages 8068–8074. Association for Computational Linguistics.

- Ma et al. (2023) Xueguang Ma, Xinyu Zhang, Ronak Pradeep, and Jimmy Lin. 2023. Zero-shot listwise document reranking with a large language model. arXiv preprint arXiv:2305.02156 .

- Parvez et al. (2021) Md Rizwan Parvez, Wasi Uddin Ahmad, Saikat Chakraborty, Baishakhi Ray, and Kai-Wei Chang. 2021. Retrieval augmented code generation and summarization. arXiv preprint arXiv:2108.11601 .

- Pradeep et al. (2023a) Ronak Pradeep, Sahel Sharifymoghaddam, and Jimmy Lin. 2023a. Rankvicuna: Zero-shot listwise document reranking with open-source large language models. arXiv preprint arXiv:2309.15088 .

- Pradeep et al. (2023b) Ronak Pradeep, Sahel Sharifymoghaddam, and Jimmy Lin. 2023b. Rankzephyr: Effective and robust zero-shot listwise reranking is a breeze! arXiv preprint arXiv:2312.02724 .

- Qin and Eisner (2021) Guanghui Qin and Jason Eisner. 2021. Learning how to ask: Querying LMs with mixtures of soft prompts . arXiv preprint arXiv:2104.06599 .

- Qu et al. (2021) Leigang Qu, Meng Liu, Jianlong Wu, Zan Gao, and Liqiang Nie. 2021. Dynamic modality interaction modeling for image-text retrieval . Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval .

- Reiter and Dale (1997) Ehud Reiter and Robert Dale. 1997. Building applied natural language generation systems. Natural Language Engineering , 3(1):57–87.

- Shi et al. (2023) Fobo Shi, Peijun Qing, D. Yang, Nan Wang, Youbo Lei, H. Lu, and Xiaodong Lin. 2023. Prompt space optimizing few-shot reasoning success with large language models . ArXiv , abs/2306.03799.

- Srinivasan et al. (2021) Krishna Srinivasan, Karthik Raman, Jiecao Chen, Michael Bendersky, and Marc Najork. 2021. Wit: Wikipedia-based image text dataset for multimodal multilingual machine learning . Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval .

- Stent et al. (2004) Amanda Stent, Rashmi Prasad, and Marilyn Walker. 2004. Trainable sentence planning for complex information presentations in spoken dialog systems . In Proceedings of the 42nd Annual Meeting of the Association for Computational Linguistics (ACL-04) , pages 79–86, Barcelona, Spain.

- Sun et al. (2023) Weiwei Sun, Lingyong Yan, Xinyu Ma, Pengjie Ren, Dawei Yin, and Zhaochun Ren. 2023. Is chatgpt good at search? investigating large language models as re-ranking agent. arXiv preprint arXiv:2304.09542 .

- Taylor et al. (2022) Ross Taylor, Marcin Kardas, Guillem Cucurull, Thomas Scialom, Anthony Hartshorn, Elvis Saravia, Andrew Poulton, Viktor Kerkez, and Robert Stojnic. 2022. Galactica: A large language model for science . arXiv preprint arXiv:2211.09085 .

- Thulke et al. (2021) David Thulke, Nico Daheim, Christian Dugast, and Hermann Ney. 2021. Efficient retrieval augmented generation from unstructured knowledge for task-oriented dialog . arXiv preprint arXiv:2102.04643 .

- Tonmoy et al. (2024) SM Tonmoy, SM Zaman, Vinija Jain, Anku Rani, Vipula Rawte, Aman Chadha, and Amitava Das. 2024. A comprehensive survey of hallucination mitigation techniques in large language models. arXiv preprint arXiv:2401.01313 .

- Wei et al. (2021) Jason Wei, Maarten Bosma, Vincent Zhao, Kelvin Guu, Adams Wei Yu, Brian Lester, Nan Du, Andrew M. Dai, and Quoc V. Le. 2021. Finetuned language models are zero-shot learners . ArXiv , abs/2109.01652.

- Wei et al. (2022) Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Fei Xia, Ed H Chi, Quoc V Le, Denny Zhou, et al. 2022. Chain-of-thought prompting elicits reasoning in large language models. In Advances in Neural Information Processing Systems .

- Yu et al. (2022) Jiahui Yu, Zirui Wang, Vijay Vasudevan, Legg Yeung, Mojtaba Seyedhosseini, and Yonghui Wu. 2022. Coca: Contrastive captioners are image-text foundation models . Trans. Mach. Learn. Res. , 2022.

- Zhang et al. (2023) Xinyu Zhang, Sebastian Hofstätter, Patrick Lewis, Raphael Tang, and Jimmy Lin. 2023. Rank-without-gpt: Building gpt-independent listwise rerankers on open-source large language models. arXiv preprint arXiv:2312.02969 .

- Zhao et al. (2022) Zijia Zhao, Longteng Guo, Xingjian He, Shuai Shao, Zehuan Yuan, and Jing Liu. 2022. Mamo: Fine-grained vision-language representations learning with masked multimodal modeling . Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval .

- Zhou et al. (2022) Yongchao Zhou, Andrei Ioan Muresanu, Ziwen Han, Keiran Paster, Silviu Pitis, Harris Chan, and Jimmy Ba. 2022. Large language models are human-level prompt engineers. arXiv preprint arXiv:2211.01910 .

- Zhu et al. (2023) Yutao Zhu, Huaying Yuan, Shuting Wang, Jiongnan Liu, Wenhan Liu, Chenlong Deng, Zhicheng Dou, and Ji-Rong Wen. 2023. Large language models for information retrieval: A survey . arXiv preprint arXiv:2308.07107 .

In the following, we provide snippets of code to retrieve results from the Semantic Scholar API for both recommendation and query-based search:

| Abstract for paper: Do DALL-E and Flamingo Understand Each Other? ) |

|---|

| The field of multimodal research focusing on the comprehension and creation of both images and text has witnessed significant strides. This progress is exemplified by the emergence of sophisticated models dedicated to image captioning at scale, such as the notable Flamingo model and text-to-image generative models, with DALL-E serving as a prominent example. An interesting question worth exploring in this domain is whether Flamingo and DALL-E understand each other. To study this question, we propose a reconstruction task where Flamingo generates a description for a given image and DALL-E uses this description as input to synthesize a new image. We argue that these models understand each other if the generated image is similar to the given image. Specifically, we study the relationship between the quality of the image reconstruction and that of the text generation. We find that an optimal description of an image is one that gives rise to a generated image similar to the original one. The finding motivates us to propose a unified framework to finetune the text-to-image and image-to-text models. Concretely, the reconstruction part forms a regularization loss to guide the tuning of the models. Extensive experiments on multiple datasets with different image captioning and image generation models validate our findings and demonstrate the effectiveness of our proposed unified framework. As DALL-E and Flamingo are not publicly available, we use Stable Diffusion and BLIP in the remaining work. Project website: |

| LLM summarized query: Multimodal Research: Image-Text Model Interaction |

| Retrieved papers: (User Input: 4) |

| CoCa: Contrastive Captioners are Image-Text Foundation Models (2022) Cited by 702 ) |

| MAMO: Fine-Grained Vision-Language Representations Learning with Masked Multimodal Modeling (2022) Cited by 0 ) |

| Dynamic Modality Interaction Modeling for Image-Text Retrieval (2021) Cited by 88 ) |

| WIT: Wikipedia-based Image Text Dataset for Multimodal Multilingual Machine Learning (2021) Cited by 185 ) |

| Zero shot Generation (Section ) |

| presents the Contrastive Captioner (CoCa), a model that combines contrastive loss and captioning loss to pretrain an image-text encoder-decoder foundation model. This model, while efficient, does not fully address the interaction between image and text modalities at a fine-grained level. The work of [2] addresses this gap by proposing a jointly masked multimodal modeling method that focuses on fine-grained multimodal representations. This method performs joint masking on image-text input and integrates both implicit and explicit targets for the masked signals to recover. However, this approach, while effective, does not fully address the challenges of intra-modal reasoning and cross-modal alignment that are inherent in image-text retrieval. The work of [3] presents a novel modality interaction modeling network based on the routing mechanism, which is the first unified and dynamic multimodal interaction framework towards image-text retrieval. This model can dynamically learn different activated paths for different data, providing a more flexible approach to modality interaction. However, the design of interaction patterns in this model still relies heavily on expert experience and empirical feedback, which may limit its applicability in different contexts. The work of [4] introduces the Wikipedia-based Image Text (WIT) Dataset, a large-scale dataset for multimodal, multilingual learning. This dataset, while providing a rich resource for multimodal learning, does not directly address the question of how different models can understand each other and work together. In light of these previous works, our study proposes a reconstruction task where Flamingo generates a description for a given image and DALL-E uses this description as input to synthesize a new image. We argue that these models understand each other if the generated image is similar to the given image. This approach allows us to study the relationship between the quality of the image reconstruction and that of the text generation, and to propose a unified framework to finetune the text-to-image and image-to-text models. Our extensive experiments validate our findings and demonstrate the effectiveness of our proposed unified framework. |

| Plan based generation (Section ): Generate the output using 5 sentences. Cite [1] on line 2. Cite [2], [3] on line 3. Cite [4] on line 5. |

| The field of multimodal research has seen significant advancements in the comprehension and creation of both images and text, with models like Flamingo and DALL-E leading the way. In a similar vein, the Contrastive Captioner (CoCa) model presented in [1] pretrains an image-text encoder-decoder foundation model with contrastive loss and captioning loss, achieving state-of-the-art performance on a broad range of downstream tasks. Other works have also focused on improving the fine-grained image-text interaction, with [2] proposing a jointly masked multimodal modeling method and [3] developing a dynamic multimodal interaction framework for image-text retrieval. These models, while effective, often rely on expert experience and empirical feedback, which may limit their flexibility. The introduction of the Wikipedia-based Image Text (WIT) Dataset in [4] provides a large, multilingual dataset for pretraining multimodal models, offering a more diverse set of concepts and real-world entities for model training. This diversity and scale could potentially enhance the performance of models like Flamingo and DALL-E, further advancing the field of multimodal research. |

Literature Review Generator

Ai tool for generating academic literature review.

- Conduct a literature review for a dissertation or thesis: Save time and ensure a comprehensive understanding of your research topic.

- Prepare for a research proposal: Demonstrate a thorough understanding of the existing literature in your field.

- Write a research paper or article: Use the tool to generate a literature review section for your academic paper or article.

- Develop course materials: As an educator, you can use the tool to prepare literature reviews for course materials or to provide examples to students.

New & Trending Tools

Text rewriter ai, combine two paragraphs, history fact finder.

- Research Guides

- University Libraries

AI-Based Literature Review Tools

- Dialogues: Insightful Facts

- How to Craft Prompts

- Plugins / Extensions for AI-powered Searches

- Cite ChatGPT in APA / MLA

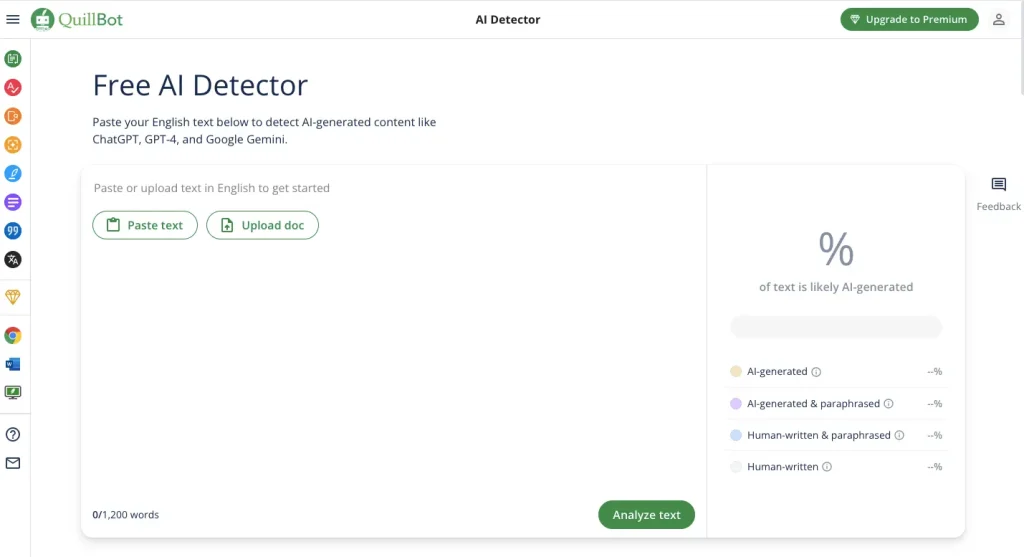

- AI and Plagiarism

- ChatGPT & Higher Education

- Author Profile

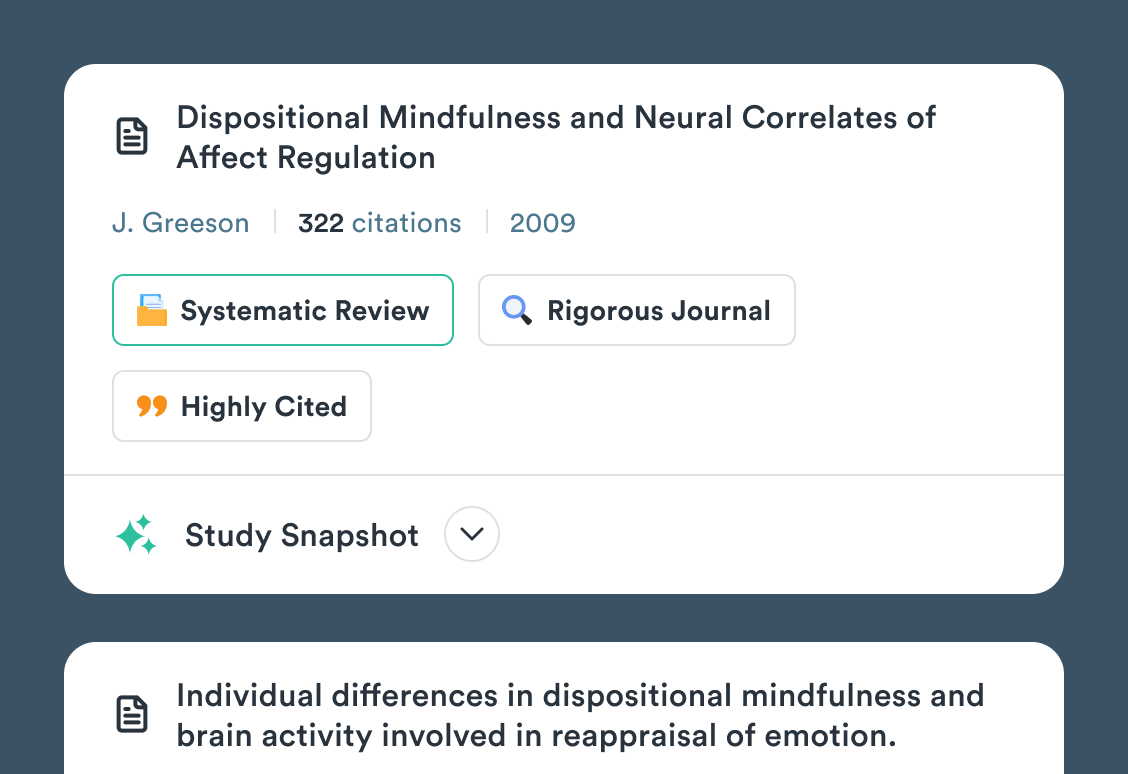

Selected AI-Based Literature Review Tools

Disclaimer:

- The guide is intended for informational purposes. It is advisable for you to independently evaluate these tools and their methods of use.

- Research AI Assistant is available in Dimensions Analytics (TAMU) and Statista (TAMU).

- See news about their AI Assistant (Beta): Web of Science , Scopus , Ebsco , ProQues t, OVID , Dimensions , JStor , Westlaw , and LexisNexis .

Suggestions:

- Please keep these differences in mind when exploring AI-powered academic search engines.

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

- https://www.openread.academy/

- Accessed institutionally by Harvard, MIT, University of Oxford, Johns Hopkins, Stanford, and more. ..

- AI-powered Academic Searching + Web Searching - Over 300 million papers and real-time web content.

- Trending and Topics - Browse them to find the latest hot papers. Use Topic to select specific fields and then see their trending.

- Each keyword search or AI query generates a synthesis report with citations. To adjust the search results, simply click on the Re-Generate button to refresh the report and the accompanied citations. After that click on Follow-Up Questions to go deeper into a specific area or subject.

- Use Paper Q&A to interact with a text directly. Examples: " What does this paper say about machine translation ?" ; "What is C-1 in Fig.1?"

- When you read a paper, under Basic Information select any of the following tools to get more information: Basic Information > Related Paper Graph> Paper Espresso > Paper Q&A , and > Notes. The Related Paper Graph will present the related studies in a visual map with relevancy indication by percentage.

- Click on Translation to put a text or search results into another language.

- Read or upload a document and let Paper Espresso analyze it for you. It will organize the content into a standard academic report format for easy reference: Background and Context > Research Objectives and Hypotheses > Methodology > Results and Findings > Discussion and Interpretation > Contributions to the field > Structure and Flow > Achievements and Significance , and > Limitations and Future Work.

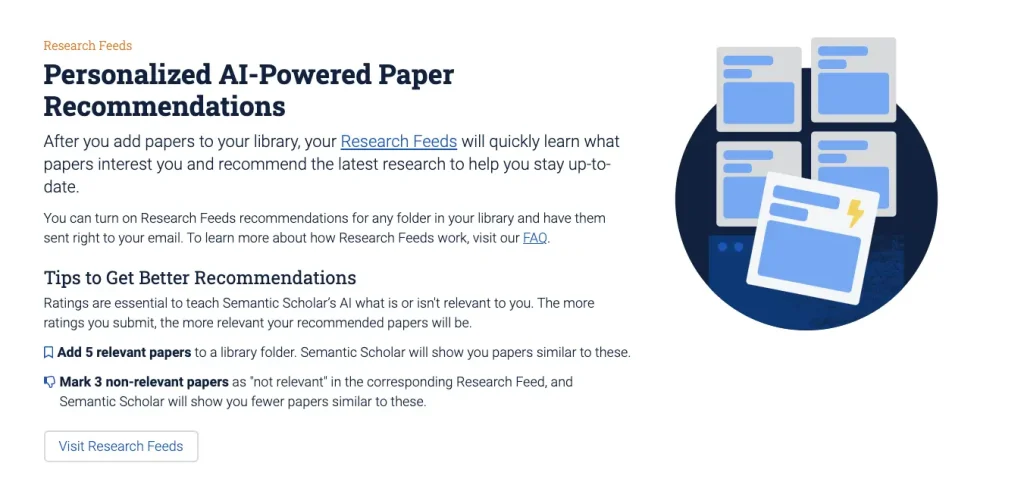

SEMANTIC SCHOLAR

- SCIENTIFIC LITERATURE SEARCH ENGINE - finding semantically similar research papers.

- " A free, AI-powered research tool for scientific literature." <https://www.semanticscholar.org/>. But login is required in order to use all functions.

- Over 200 millions of papers from all fields of science, the data of which has also served as a wellspring for the development of other AI-driven tools.

The 4000+ results can be sorted by Fields of Study, Date Range, Author, Journals & Conferences

Save the papers in your Library folder. The Research Feeds will recommend similar papers based on the items saved.

Example - SERVQUAL: A multiple-item scale for measuring consumer perceptions of service quality Total Citations: 22,438 [Note: these numbers were gathered when this guide was created] Highly Influential Citations 2,001 Background Citations 6,109 Methods Citations 3,273 Results Citations 385

Semantic Reader "Semantic Reader is an augmented reader with the potential to revolutionize scientific reading by making it more accessible and richly contextual." It "uses artificial intelligence to understand a document’s structure and merge it with the Semantic Scholar’s academic corpus, providing detailed information in context via tooltips and other overlays ." <https://www.semanticscholar.org/product/semantic-reader>.

Skim Papers Faster "Find key points of this paper using automatically highlighted overlays. Available in beta on limited papers for desktop devices only." <https://www.semanticscholar.org/product/semantic-reader>. Press on the pen icon to activate the highlights.

TLDRs (Too Long; Didn't Read) Try this example . Press the pen icon to reveal the highlighted key points . TLDRs "are super-short summaries of the main objective and results of a scientific paper generated using expert background knowledge and the latest GPT-3 style NLP techniques. This new feature is available in beta for nearly 60 million papers in computer science, biology, and medicine..." < https://www.semanticscholar.org/product/tldr>

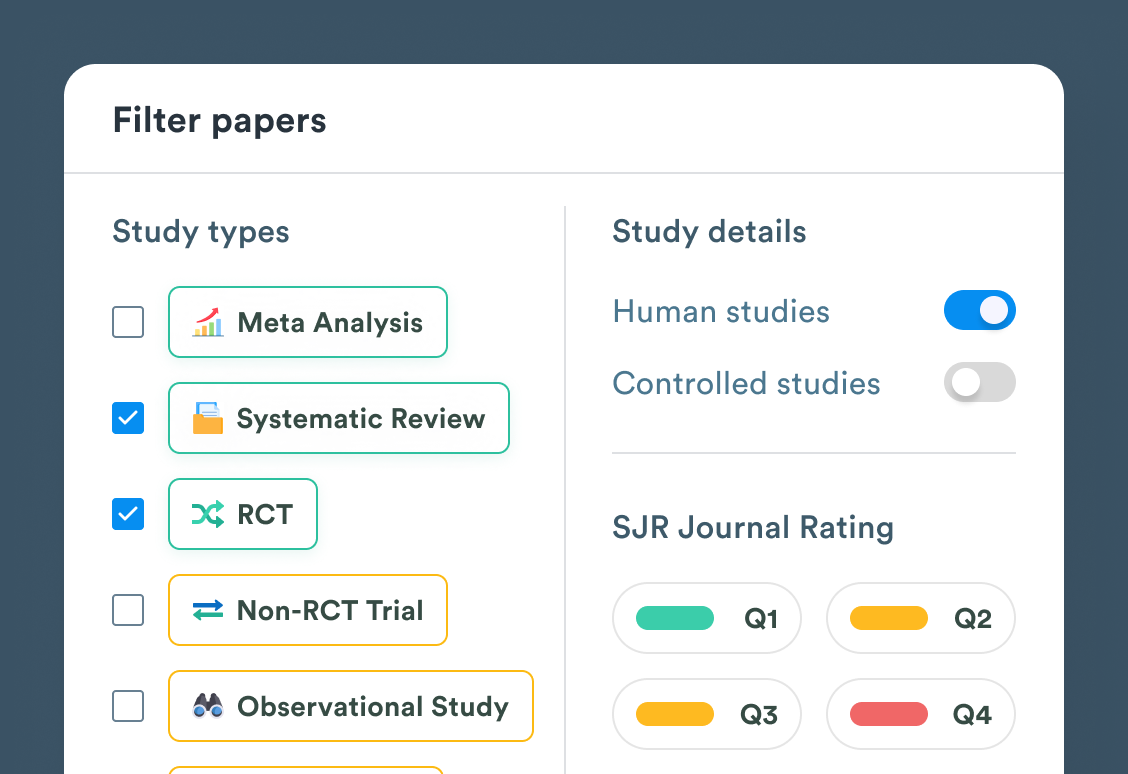

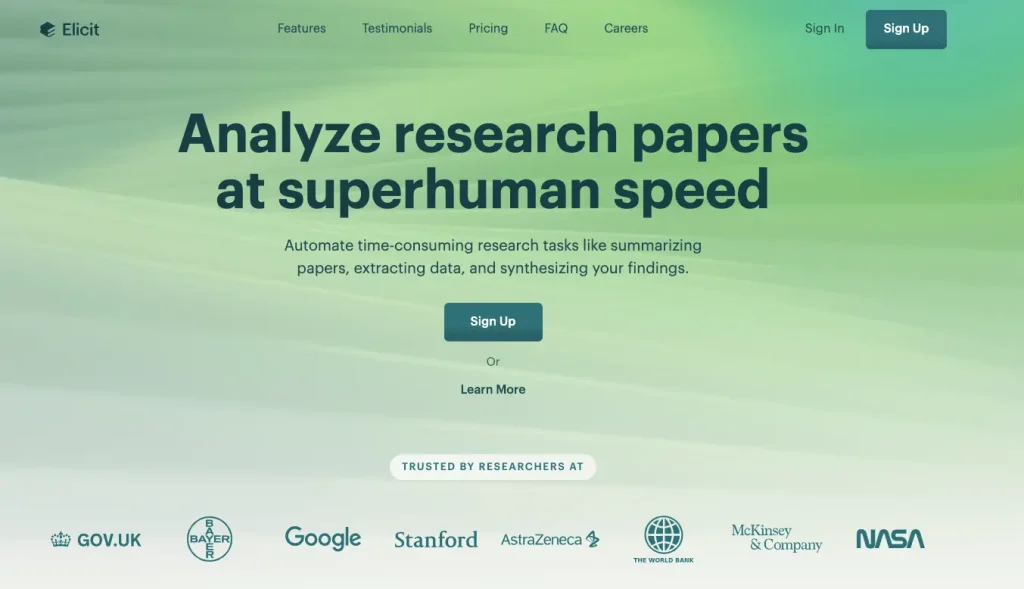

- AI-POWERED RESEARCH ASSISTANT - finding papers, filtering study types, automating research flow, brainstorming, summarizing and more.

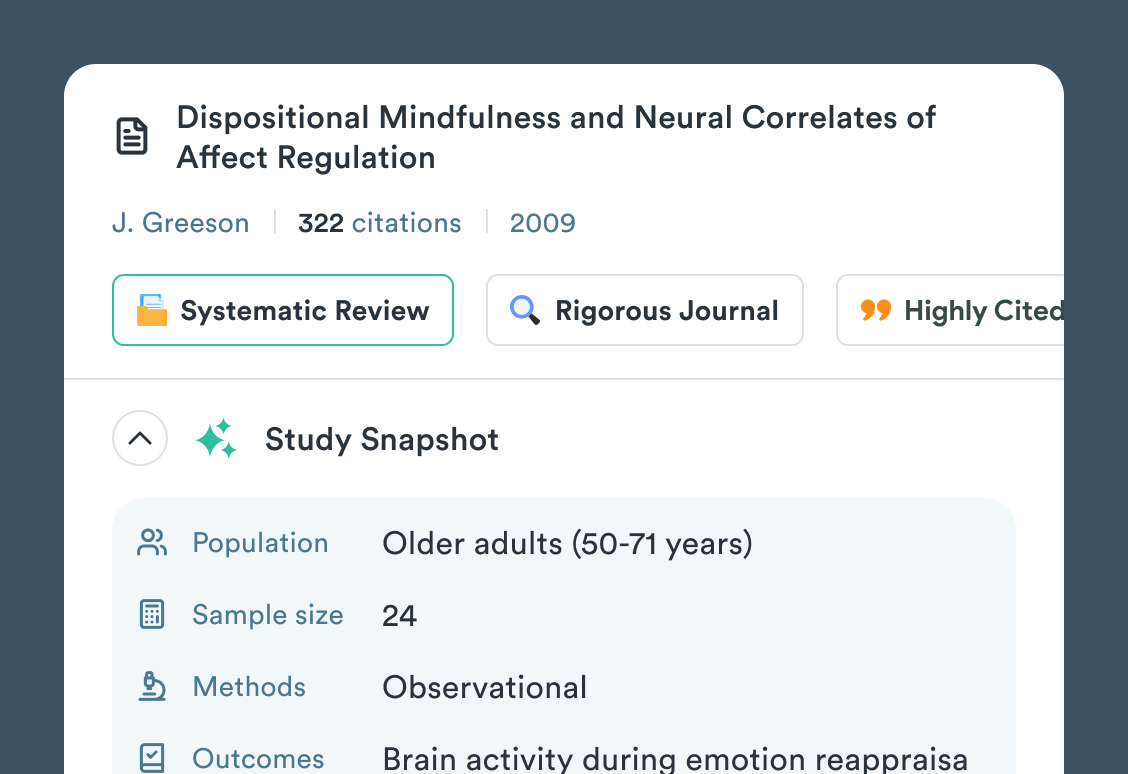

- " Elicit is a research assistant using language models like GPT-3 to automate parts of researchers’ workflows. Currently, the main workflow in Elicit is Literature Review. If you ask a question, Elicit will show relevant papers and summaries of key information about those papers in an easy-to-use table." <https://elicit.org/faq#what-is-elicit.>; Find answers from 175 million papers. FAQS

- Example - How do mental health interventions vary by age group? / Fish oil and depression Results: [Login required] (1) Summary of top 4 papers > Paper #1 - #4 with Title, abstract, citations, DOI, and pdf (2) Table view: Abstract / Interventions / Outcomes measured / Number of participants (3) Relevant studies and citations. (4) Click on Search for Paper Information to find - Metadata about Sources ( SJR etc.) >Population ( age etc.) >Intervention ( duration etc.) > Results ( outcome, limitations etc.) and > Methodology (detailed study design etc.) (5) Export as BIB or CSV

- How to Search / Extract Data / List of Concept Search -Enter a research question >Workflow: Searching > Summarizing 8 papers> A summary of 4 top papers > Final answers. Each result will show its citation counts, DOI, and a full-text link to Semantic Scholar website for more information such as background citations, methods citation, related papers and more. - List of Concepts search - e.g. adult learning motivation . The results will present a list the related concepts. - Extract data from a pdf file - Upload a paper and let Elicit extract data for you.

- Export Results - Various ways to export results.

- How to Cite - Includes the elicit.org URL in the citation, for example: Ought; Elicit: The AI Research Assistant; https://elicit.org; accessed xxxx/xx/xx

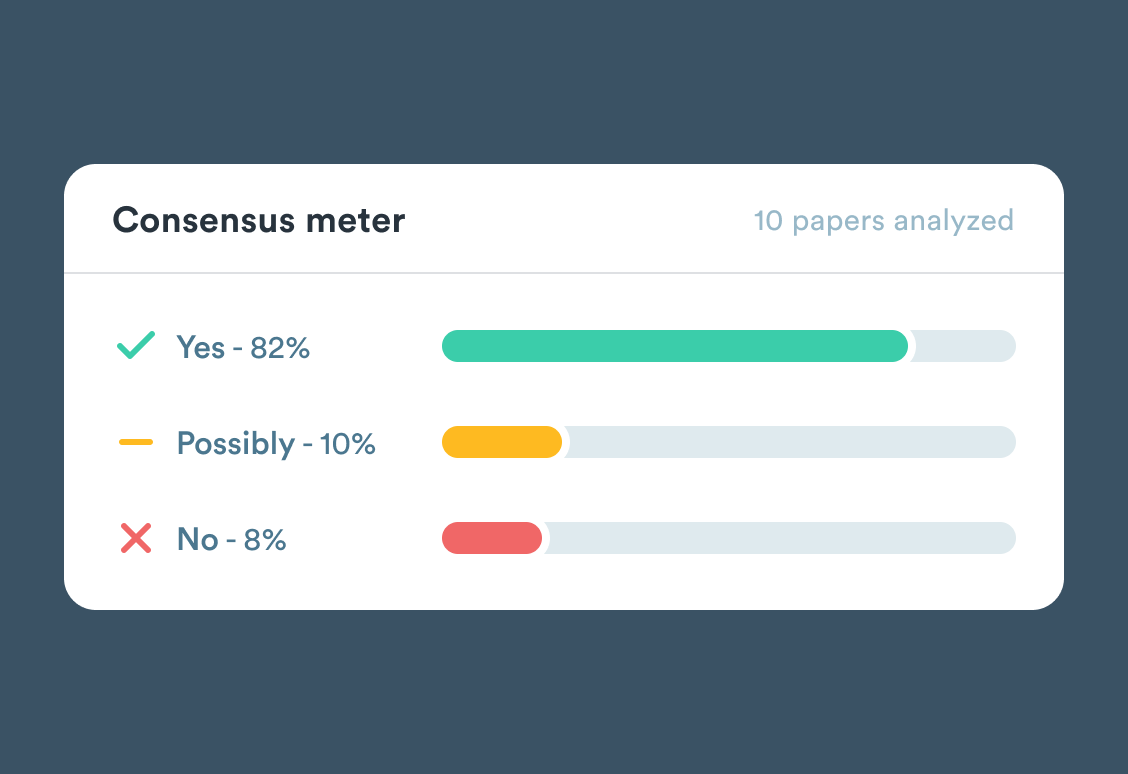

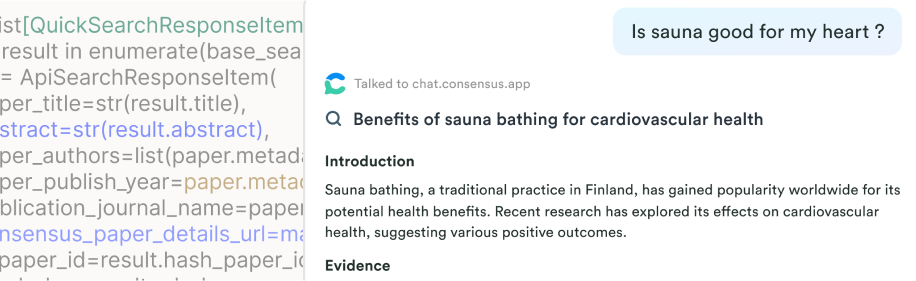

CONSENSUS.APP

ACADEMIC SEARCH ENGINE- using AI to find insights in research papers.

"We are a search engine that is designed to accept research questions, find relevant answers within research papers, and synthesize the results using the same language model technology." <https://consensus.app/home/blog/maximize-your-consensus-experience-with-these-best-practices/>

- Example - Does the death penalty reduce the crime? / Fish oil and depression / (1) Extracted & aggregated findings from relevant papers. (2) Results may include AIMS, DESIGN, PARTICIPANTS, FINDINGS or other methodological or report components. (3) Summaries and Full Text

- How to Search Direct questions - Does the death penalty reduce the crime? Relationship between two concepts - Fish oil and depression / Does X cause Y? Open-ended concepts - effects of immigration on local economics Tips and search examples from Consensus' Best Practice

- Synthesize (beta) / Consensus Meter When the AI recognizes certain types of research questions, this functionality may be activated. It will examine a selection of some studies and provide a summary along with a Consensus Meter illustrating their collective agreement. Try this search: Is white rice linked to diabetes? The Consensus Meter reveals the following outcomes after analyzing 10 papers: 70% indicate a positive association, 20% suggest a possible connection, and 10% indicate no link.

Prompt “ write me a paragraph about the impact of climate change on GDP with citations “

CITATIONS IN CONTEXT

Integrated with Research Solutions.

Over 1.2 billion Citation Statements and metadata from over 181 million papers suggested reference.

How does it work? - "scite uses access to full-text articles and its deep learning model to tell you, for a given publication: - how many times it was cited by others - how it was cited by others by displaying the text where the citation happened from each citing paper - whether each citation offers supporting or contrasting evidence of the cited claims in the publication of interest, or simply mention it." <https://help.scite.ai/en-us/article/what-is-scite-1widqmr/>

EXAMPLE of seeing all citations and citation statements in one place

More information: Scite: A smart citation index that displays the context of citations and classifies their intent using deep learning

Scholar GPT - By awesomegpts.ai

- " Enhance research with 200M+ resources and built-in critical reading skills. Access Google Scholar, PubMed, JSTOR, Arxiv, and more, effortlessly ."

- Dialogue prompts suggested on the page: - Find the latest research about AI. - I'll provide a research paper link; Please analyze it. - I will upload a PDF paper; Use critical skills to read it. - Type "LS" to list my built-in critical reading list.

- To access it, in your ChatGPT account > Select " Explore GPTs > Scholar GPT

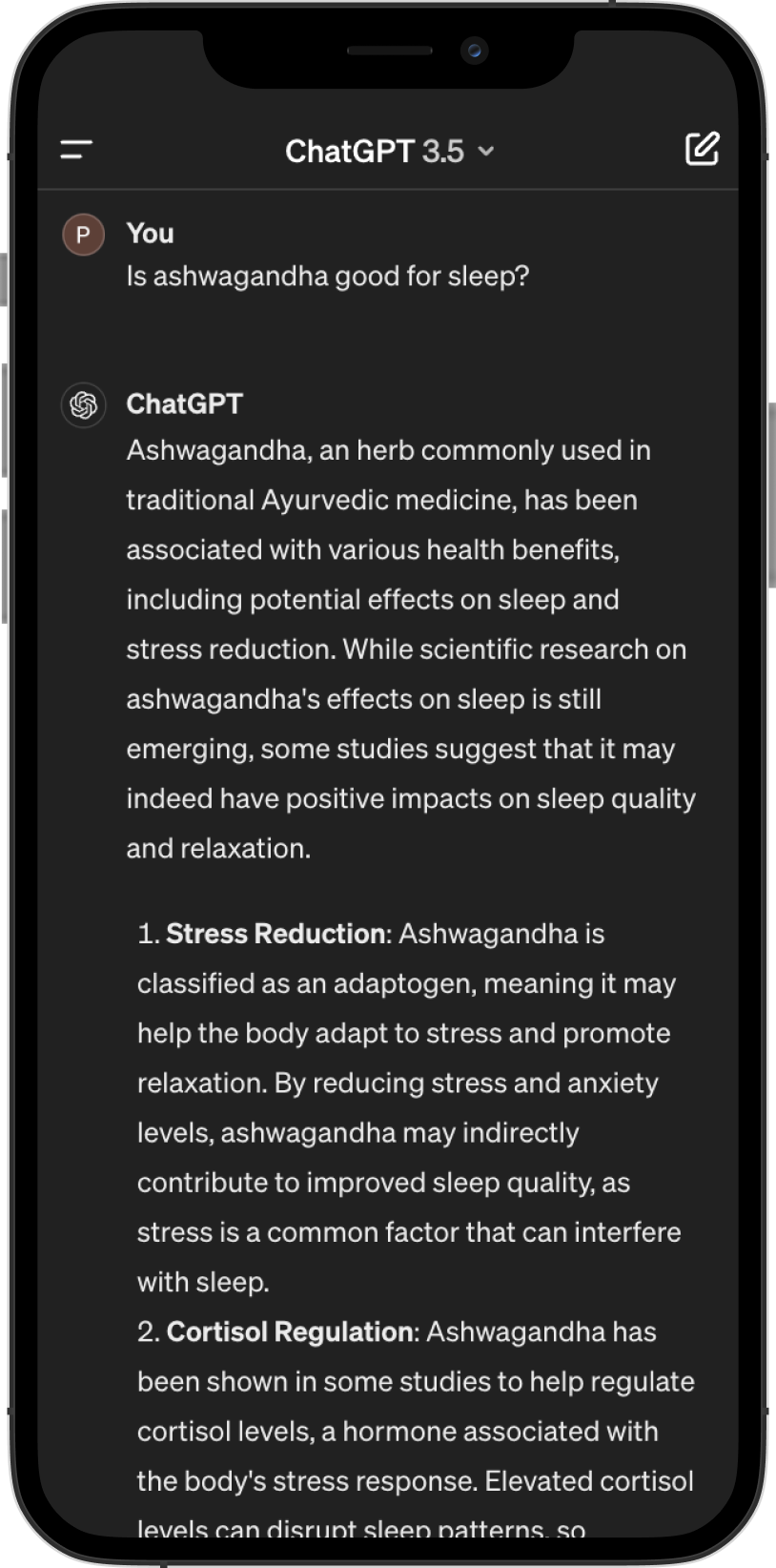

- GPT3.5 by OpenAI. Knowledge cutoff date is September 2021.

- Input/ Output length - ChatGPT-3.5 allows a maximum token limit of 4096 tokens. According to ChatGPT " On average, a token in English is roughly equivalent to 4 bytes or characters. English words are typically around 5 characters long. This means that, very roughly, you could fit around 800 to 1000 English words within 4096 tokens."

- According to ChatGPT, the generated responses are non-deterministic by default. So if you run the searches again and get slightly or very different results, it's likely due to this factor.

- ChatGPT may find non-existent references.

- According to this study < https://arxiv.org/ftp/arxiv/papers/2304/2304.06794.pdf > "ChatGPT cites the most-cited articles and journals, relying solely on Google Scholar's citation counts" within the field of environmental science.

- See a case of using ChatGPT40 to extract a PDF file below.

- Example - "INTERVIEW WITH CHATGPT" as a Research Method & Teaching Tool Some researchers began to use this approach to obtain their research data. Try this Google Scholar search link "interview with ChatGPT" or see two articles below: (1) Chatting about ChatGPT: how may AI and GPT impact academia and libraries? BD Lund, T Wang - Library Hi Tech News, 2023 (2) An interview with ChatGPT: discussing artificial intelligence in teaching, research, and practice , G Scaringi, M Loche - 2023

Increased risk of sudden infant death syndrome (SIDS) Increased risk of premature birth Increased risk of low birth weight Increased risk of respiratory problems in newborns Increased risk of respiratory problems in infants exposed to secondhand smoke Increased risk of developing asthma and other respiratory illnesses later in life for infants exposed to secondhand smoke [Note : ChatGPT may generate non-existent references or false knowledge. To find out why Large Language Models hallucinate, check out this Wiki article: Hallucination (artificial intelligence) and this blog post - A Gentle Introduction to Hallucinations in Large Language Models by Adrian Tam ]

Infant death Neonatal mortality (referring specifically to deaths within the first 28 days of life) Perinatal mortality (referring to deaths occurring during the period from 22 completed weeks of gestation to 7 completed days after birth) Early childhood mortality (referring to deaths occurring within the first five years of life) Child mortality (referring to deaths occurring before the age of 18) [Press the Regenerate button to get more.]

- Example - RELATED WORDS What are the related words of infant mortality? Neonatal mortality, Post-neonatal mortality, Stillbirths, Low birth weight, Malnutrition, Infectious diseases, Vaccination, Maternal health, Access to healthcare, Poverty, Social inequality, Sanitation, Hygiene, Water quality, Childbirth complications, Congenital abnormalities, Birth defects, Maternal age, Under-five mortality, Child mortality, Perinatal mortality, Preterm birth, Low birth weight, Sudden infant death syndrome (SIDS), Maternal mortality, Postnatal care, Malnutrition, Immunization, Access to healthcare, Clean water and sanitation, Congenital anomalies, Infant health disparities, Infant mortality rate (IMR), Infant survival.